-

Notifications

You must be signed in to change notification settings - Fork 4.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

cv::align not optimized (windows 10, C++) #2376

Comments

|

@dorodnic so visually it seems to be better than v2.15.0, and if no changes with rs2::align between 2.15 & 2.16 then the improvement might come from the openmp = TRUE; |

|

[Realsense Customer Engineering Team Comment] |

|

I actually get pretty bad FPS too running this example.. maybe about 10FPS? |

|

Hello @HippoEug , |

|

I have tried it and there is indeed better FPS now. |

|

[Realsense Customer Engineering Team Comment] |

|

@RealSense-Customer-Engineering Will do as long as I've tried it. If the new release version is using CUDA, I'm sure it's going to be better than the previous openMP one. |

Issue Description

CPU: i5-8400

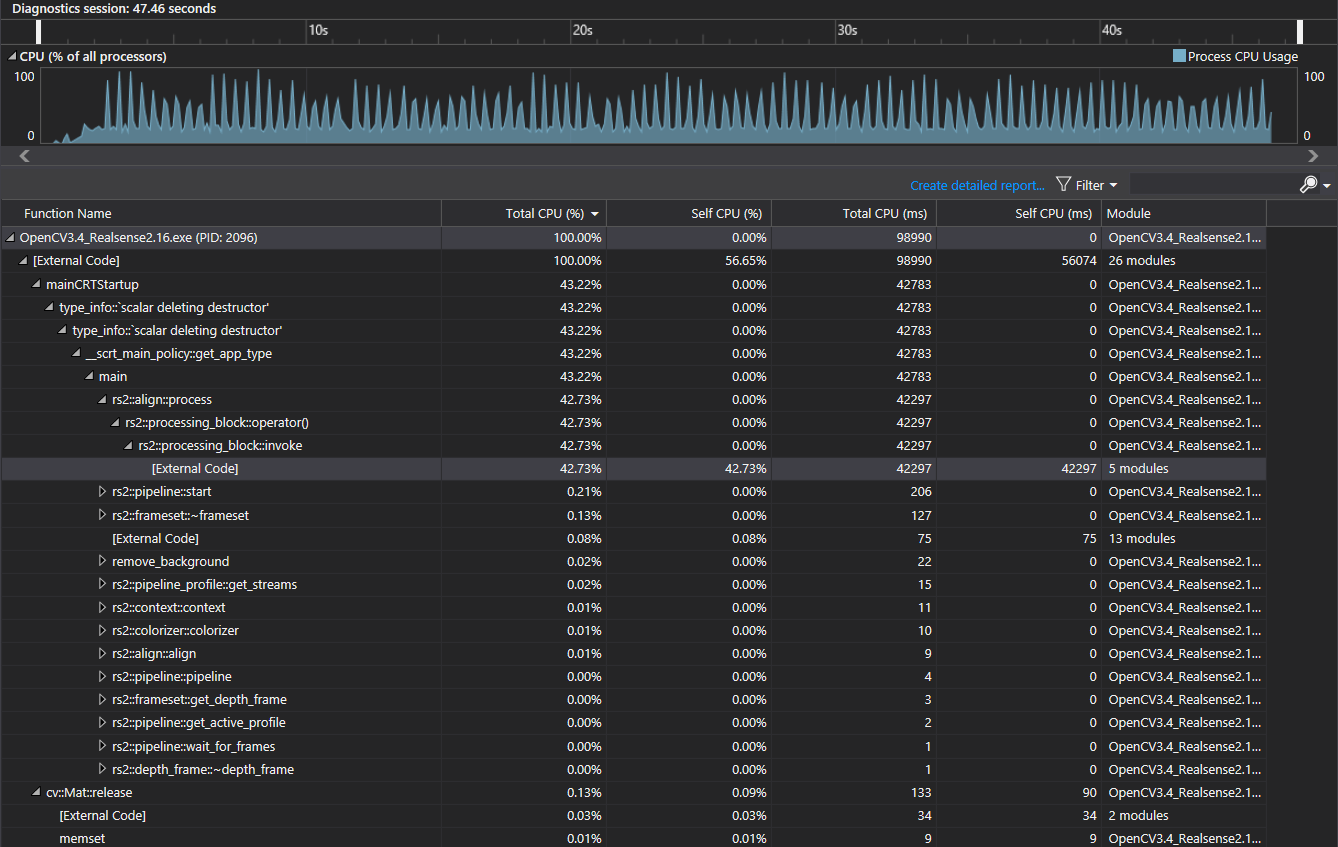

As described in #2321, aligning function slow down overall performance. I checked the 2.16.0 pre-release note, and haven't found any update regarding the align function yet.

(Don't worry about the project name, I'm using v2.15.0)

I modify few lines of code from the example code . To use cv::imshow to show the result, and the rest are basically the same. Code shown below:

The text was updated successfully, but these errors were encountered: