DualRefine: Self-Supervised Depth and Pose Estimation Through Iterative Epipolar Sampling and Refinement Toward Equilibrium

This is the official PyTorch implementation of the paper:

DualRefine: Self-Supervised Depth and Pose Estimation Through Iterative Epipolar Sampling and Refinement Toward Equilibrium

Authors: Antyanta Bangunharcana1, Ahmed Magd Aly2, and KyungSoo Kim1

1MSC Lab, 2VDC Lab, Korea Advanced Institute of Science and Technology (KAIST)

To be presented at IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

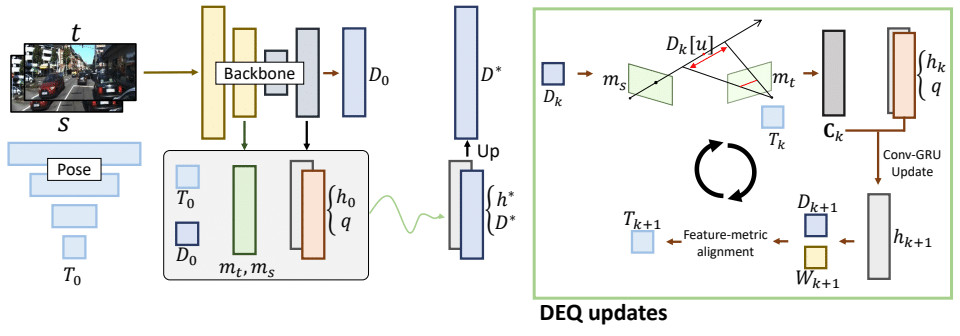

Overview: This repository contains the code for the paper DualRefine, a self-supervised depth and pose estimation model. It refines depth and pose estimates by computing matching costs based on epipolar geometry and deep equilibrium model.

If you find this work useful in your research, please consider citing:

@inproceedings{bangunharcana2023dualrefine,

title={DualRefine: Self-Supervised Depth and Pose Estimation Through Iterative Epipolar Sampling and Refinement Toward Equilibrium},

author={Bangunharcana, Antyanta and Aly, Ahmed Magd and Kim, KyungSoo},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2023},

organization={IEEE}

}- Clone this repository:

git clone https://github.com/antabangun/DualRefine.git

cd DualRefine- Create an anaconda environment and activate it (optional, but recommended):

conda env create -f environment.yml

conda activate dualrefineThe following table shows the performance of the models on the KITTI dataset. You can download the pretrained models from the links below, and place them in the weights folder.

| Model | Download | Resolution | Abs Rel ↓ | Sq Rel ↓ | RMSE ↓ | RMSE log ↓ | a1 ↑ | a2 ↑ | a3 ↑ |

|---|---|---|---|---|---|---|---|---|---|

| Monodepth2 | 192x640 | 0.115 | 0.903 | 4.863 | 0.193 | 0.877 | 0.958 | 0.980 | |

| Manydepth | 192x640 | 0.098 | 0.770 | 4.459 | 0.176 | 0.900 | 0.965 | 0.983 | |

| DualRefine_MR | ckpt | 192x640 | 0.087 | 0.698 | 4.234 | 0.170 | 0.914 | 0.967 | 0.983 |

| Manydepth (HR ResNet50) | 320x1024 | 0.091 | 0.694 | 4.245 | 0.171 | 0.911 | 0.968 | 0.983 | |

| DualRefine_HR | ckpt | 288x960 | 0.087 | 0.674 | 4.130 | 0.167 | 0.915 | 0.969 | 0.984 |

Please follow the instructions in the Monodepth2 repository to download and prepare the KITTI dataset. In our experiments, we use jpeg images for training and evaluation.

After downloading the dataset and the pretrained models, you can try evaluating the model by following the bash script eval.sh.

The script will evaluate the model on the KITTI dataset.

At inference, the DualRefine model outputs a tuple of depth and relative poses, including both initial and refined estimates.

- Initial depth can be obtained from the depth dictionary using the key

('disp', 0, 0) - Refined depth can be obtained from the depth dictionary using the key

('disp', 0, 1)

- The

posesoutput contains a list of initial and refined poses

To run the demo, you can run the following command after downloading the pretrained model and placing it in the weights folder:

python demo.py --load_weights_folder weights/DualRefine_MR-

To re-train the model as was done in the paper, you can follow the bash script

train.sh. The script will train the model on the KITTI dataset. This assumes that you have downloaded and prepared the KITTI dataset as described in the previous section and placed it in the./kitti_datafolder. The training script will save the checkpoints in theweightsfolder. -

Command line arguments that might be useful:

--batch_size: default 12

You may need to reduce the batch size if the memory of your GPU is not enough. We used an NVIDIA RTX 3090 GPU for the medium resolution model.--mixed_precision

as in the./train.shscript is also recommended. It helps slightly with memory usage without any loss in accuracy based on our experiments.

Other ablation arguments can be found in the

dualrefine/options.pyfile.

While our DualRefine model demonstrates improved overall depth accuracy, we have observed some limitations in its performance. In particular, the model produces noisier results around dynamic objects and non-Lambertian surfaces.

We are currently researching and developing enhancements to address these challenges. As we make progress, we plan to update the model and share our findings in the future. By acknowledging these limitations, we hope to foster a more comprehensive understanding of the model's performance and encourage further research and collaboration within the community.

Please stay tuned for updates and feel free to contribute your insights and suggestions to help improve the model.

- Investigate other ablations for DEQ (Deep Equilibrium) implementations: Explore potential improvements by trying different DEQ implementations in the model.

- Address limitations in depth estimation for dynamic objects and non-Lambertian surfaces: Work on improving the model's performance for dynamic objects and non-Lambertian surfaces to enhance overall depth estimation accuracy.

This repository is built upon the following repositories:

We thank the authors for their excellent work.

Contacts:

If you have questions, please send an email to Antyanta Bangunharcana ([email protected])