-

Notifications

You must be signed in to change notification settings - Fork 14.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

airflow dag success , but tasks not yet started,not scheduled. #15559

Comments

|

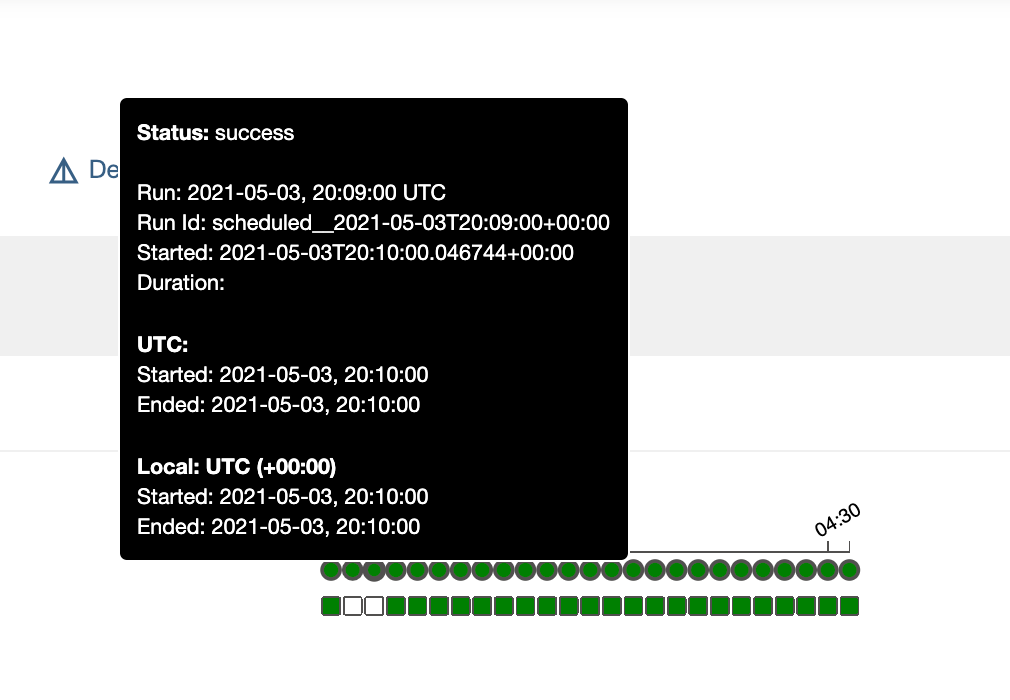

We've been experiencing the same issues (Airflow 2.0.2, 2x schedulers, MySQL 8). Furthermore, when a task has I'm currently trying to recreate this by running some high-frequency DAGs with and without multiple schedulers, I'll update here with my findings. Update:I was able to get this to happen by running 3 schedulers with a DAG running every 1 minute: From the scheduler logs: So it looks like the scheduler was running DagRun.update_state() before any Task instances had been created, which would cause this DAG to be marked as successful. Do you think this could this be either:

Update 2:I did some more investigating today and found that while the DAGRun and TaskInstances are created by one scheduler, they are soon after marked as successful by a different scheduler. Here's some selected logs from one occurence of this issue to demonstrate this (I added debug log entries to the TaskInstance and DagRun constructors so that I could see when and where they were being created) airflow-scheduler-1: airflow-scheduler-2: So within ~30ms of the DagRun being created, another scheduler instance marked it as complete based on having no tasks. |

|

Okay, so we dug into this and here's what we found (TL;DR we think we're getting bit by mysql's default isolation level of REPEATABLE_READ): Here we execute a query to get the recent DagRuns for Next we wait ~5 mins, and we run this query again but with So, there are some new DagRuns, great. When the scheduler goes to get the related task instances (https://github.com/apache/airflow/blob/master/airflow/models/dagrun.py#L307-L310) it will execute the query, but in the original snapshot: As we can see in ^ This query can only see the TIs up until 13:37:00, so it finds 0 tasks for the recent runs, which means this https://github.com/apache/airflow/blob/master/airflow/models/dagrun.py#L444 will mark the DAG run as successful |

|

and confirmed with READ_COMMITTED, we get the expected results: wait a few minutes |

|

Just following up to confirm that switching our database's isolation level from Its worth noting that the default for Postgres is @trollhe, could you confirm the following?

Fixing this IssueI think there's a few possible ways to "fix" this issue.

Any thoughts on the preferred fix? Feel free to assign this to me and I can implement one of these fixes this week. |

hi,team:

how can to fix it?

The text was updated successfully, but these errors were encountered: