diff --git a/docs/source/en/basics/booster_plugins.md b/docs/source/en/basics/booster_plugins.md

index fa360a4b9213..55f1b4f53721 100644

--- a/docs/source/en/basics/booster_plugins.md

+++ b/docs/source/en/basics/booster_plugins.md

@@ -58,7 +58,11 @@ This plugin implements Zero-3 with chunk-based and heterogeneous memory manageme

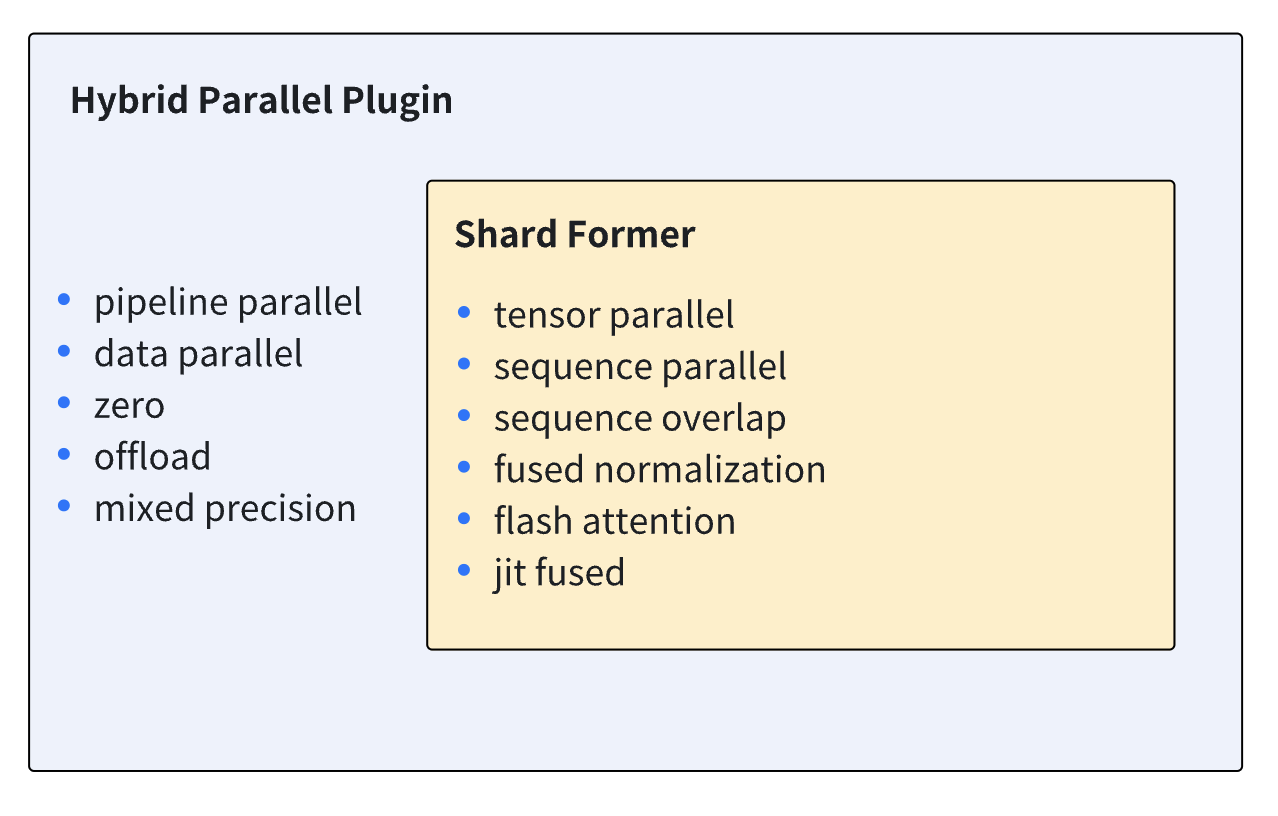

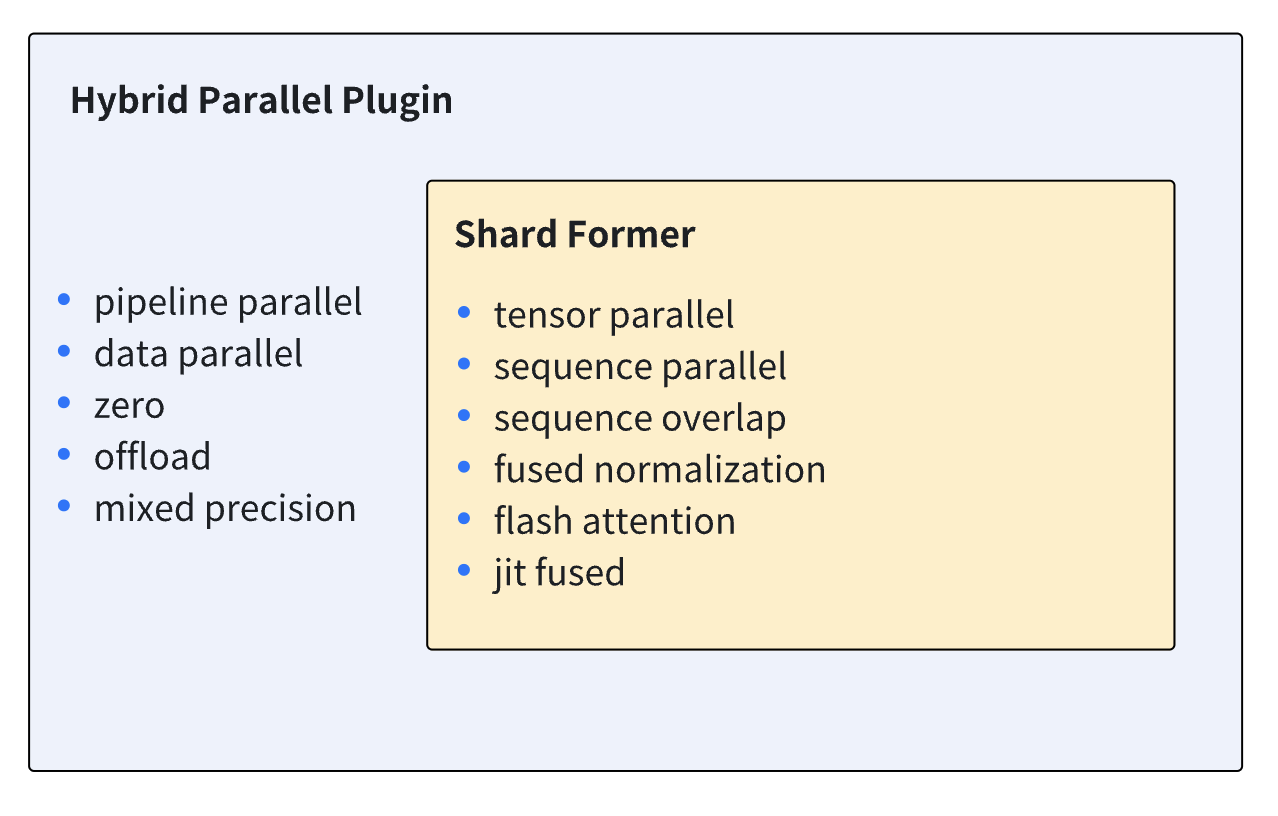

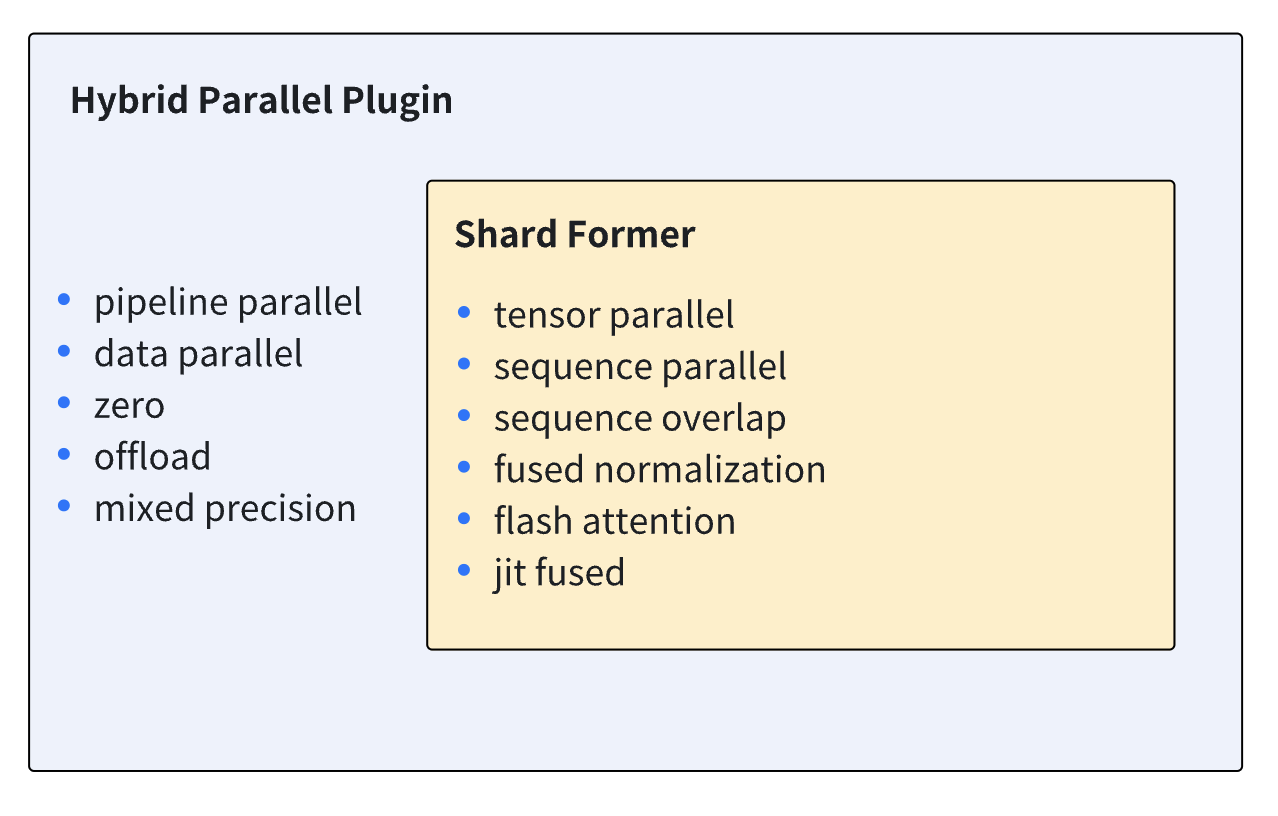

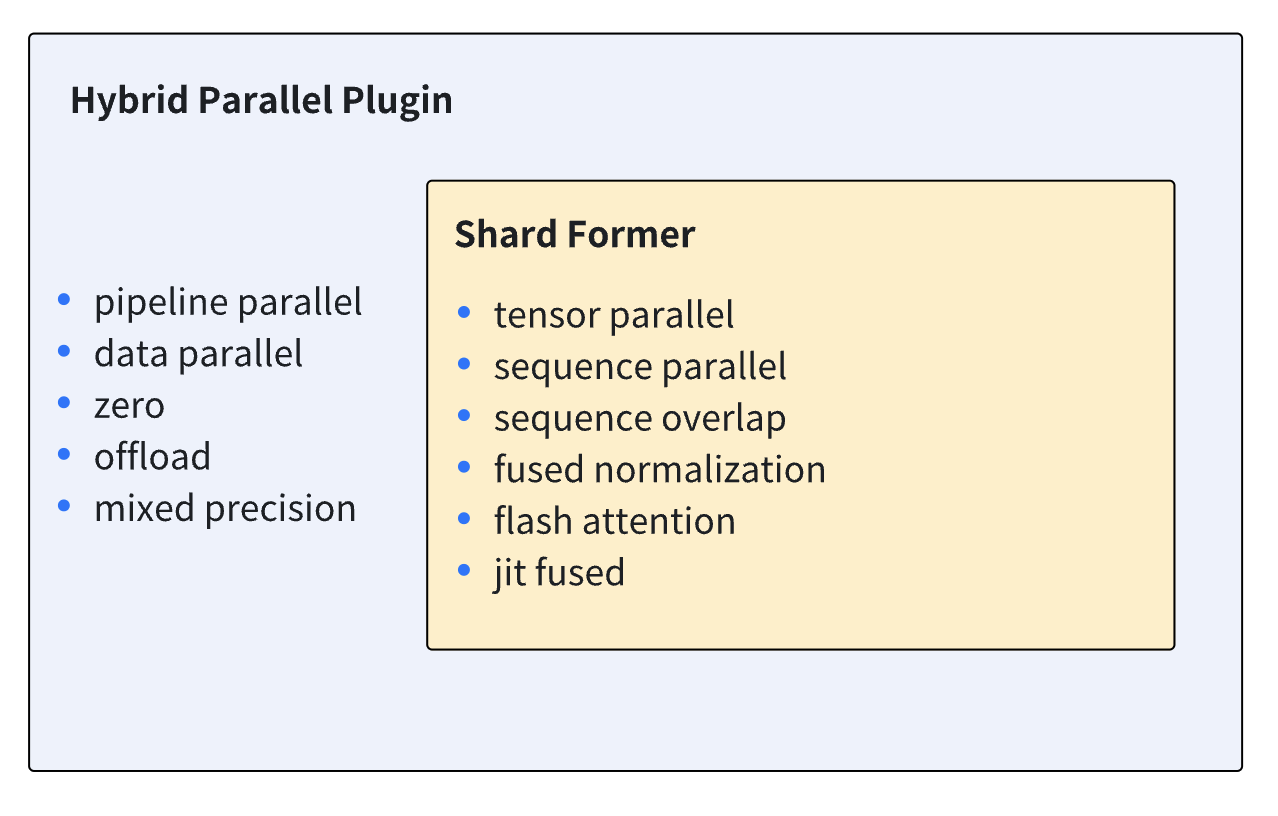

This plugin implements the combination of various parallel training strategies and optimization tools. The features of HybridParallelPlugin can be generally divided into four parts:

-1. Shardformer: This plugin provides an entrance to Shardformer, which controls model sharding under tensor parallel and pipeline parallel setting. Shardformer also overloads the logic of model's forward/backward process to ensure the smooth working of tp/pp. Also, optimization tools including fused normalization, flash attention (xformers), JIT and sequence parallel are injected into the overloaded forward/backward method by Shardformer. More details can be found in chapter [Shardformer Doc](../features/shardformer.md).

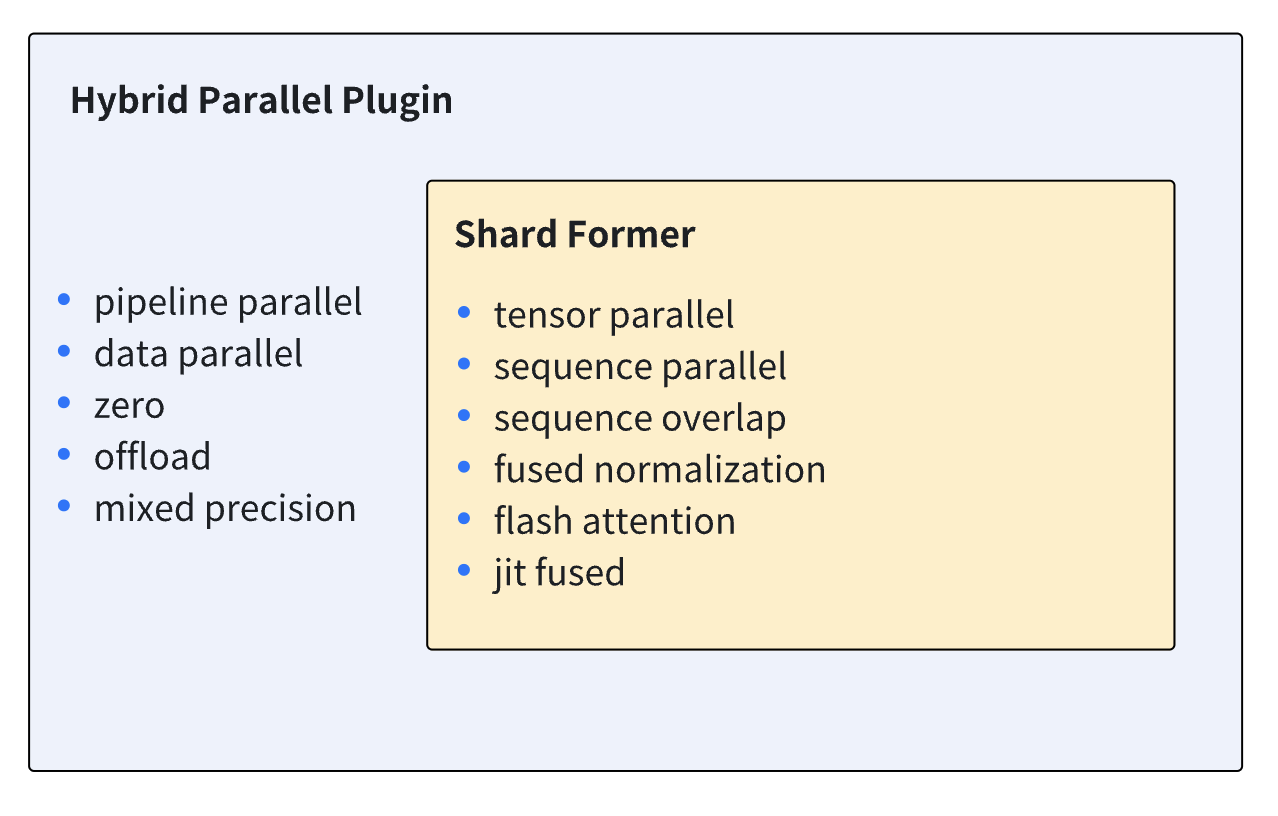

+1. Shardformer: This plugin provides an entrance to Shardformer, which controls model sharding under tensor parallel and pipeline parallel setting. Shardformer also overloads the logic of model's forward/backward process to ensure the smooth working of tp/pp. Also, optimization tools including fused normalization, flash attention (xformers), JIT and sequence parallel are injected into the overloaded forward/backward method by Shardformer. More details can be found in chapter [Shardformer Doc](../features/shardformer.md). The diagram below shows the features supported by shardformer together with hybrid parallel plugin.

+

+

+

+

+

+

+

+ +

+ +

+