diff --git a/.github/workflows/doc_test_on_pr.yml b/.github/workflows/doc_test_on_pr.yml

index 8afc46b87aa2..27f7e76af4fe 100644

--- a/.github/workflows/doc_test_on_pr.yml

+++ b/.github/workflows/doc_test_on_pr.yml

@@ -56,7 +56,7 @@ jobs:

needs: detect-changed-doc

runs-on: [self-hosted, gpu]

container:

- image: hpcaitech/pytorch-cuda:2.0.0-11.7.0

+ image: hpcaitech/pytorch-cuda:2.1.0-12.1.0

options: --gpus all --rm

timeout-minutes: 20

defaults:

diff --git a/.github/workflows/release_docker_after_publish.yml b/.github/workflows/release_docker_after_publish.yml

index 6c8df9730b0d..0792544bf403 100644

--- a/.github/workflows/release_docker_after_publish.yml

+++ b/.github/workflows/release_docker_after_publish.yml

@@ -24,7 +24,7 @@ jobs:

version=$(cat version.txt)

tag=hpcaitech/colossalai:$version

latest=hpcaitech/colossalai:latest

- docker build --build-arg http_proxy=http://172.17.0.1:7890 --build-arg https_proxy=http://172.17.0.1:7890 --build-arg VERSION=v${version} -t $tag ./docker

+ docker build --build-arg VERSION=v${version} -t $tag ./docker

docker tag $tag $latest

echo "tag=${tag}" >> $GITHUB_OUTPUT

echo "latest=${latest}" >> $GITHUB_OUTPUT

diff --git a/LICENSE b/LICENSE

index 47197afe6644..f0b2ffa97953 100644

--- a/LICENSE

+++ b/LICENSE

@@ -552,3 +552,18 @@ Copyright 2021- HPC-AI Technology Inc. All rights reserved.

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

THE SOFTWARE.

+ ---------------- LICENSE FOR Hugging Face accelerate ----------------

+

+ Copyright 2021 The HuggingFace Team

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

diff --git a/README.md b/README.md

index c1e2da0d406f..9e215df63196 100644

--- a/README.md

+++ b/README.md

@@ -25,6 +25,8 @@

## Latest News

+* [2024/04] [Open-Sora Unveils Major Upgrade: Embracing Open Source with Single-Shot 16-Second Video Generation and 720p Resolution](https://hpc-ai.com/blog/open-soras-comprehensive-upgrade-unveiled-embracing-16-second-video-generation-and-720p-resolution-in-open-source)

+* [2024/04] [Most cost-effective solutions for inference, fine-tuning and pretraining, tailored to LLaMA3 series](https://hpc-ai.com/blog/most-cost-effective-solutions-for-inference-fine-tuning-and-pretraining-tailored-to-llama3-series)

* [2024/03] [314 Billion Parameter Grok-1 Inference Accelerated by 3.8x, Efficient and Easy-to-Use PyTorch+HuggingFace version is Here](https://hpc-ai.com/blog/314-billion-parameter-grok-1-inference-accelerated-by-3.8x-efficient-and-easy-to-use-pytorchhuggingface-version-is-here)

* [2024/03] [Open-Sora: Revealing Complete Model Parameters, Training Details, and Everything for Sora-like Video Generation Models](https://hpc-ai.com/blog/open-sora-v1.0)

* [2024/03] [Open-Sora:Sora Replication Solution with 46% Cost Reduction, Sequence Expansion to Nearly a Million](https://hpc-ai.com/blog/open-sora)

@@ -131,7 +133,7 @@ distributed training and inference in a few lines.

[Open-Sora](https://github.com/hpcaitech/Open-Sora):Revealing Complete Model Parameters, Training Details, and Everything for Sora-like Video Generation Models

[[code]](https://github.com/hpcaitech/Open-Sora)

-[[blog]](https://hpc-ai.com/blog/open-sora-v1.0)

+[[blog]](https://hpc-ai.com/blog/open-soras-comprehensive-upgrade-unveiled-embracing-16-second-video-generation-and-720p-resolution-in-open-source)

[[HuggingFace model weights]](https://huggingface.co/hpcai-tech/Open-Sora)

[[Demo]](https://github.com/hpcaitech/Open-Sora?tab=readme-ov-file#-latest-demo)

diff --git a/applications/Colossal-LLaMA/colossal_llama/dataset/loader.py b/applications/Colossal-LLaMA/colossal_llama/dataset/loader.py

index 327651f4e645..abe0fd51a4af 100644

--- a/applications/Colossal-LLaMA/colossal_llama/dataset/loader.py

+++ b/applications/Colossal-LLaMA/colossal_llama/dataset/loader.py

@@ -80,15 +80,19 @@ def __call__(self, instances: Sequence[Dict[str, List[int]]]) -> Dict[str, torch

# `List[torch.Tensor]`

batch_input_ids = [

- torch.LongTensor(instance["input_ids"][: self.max_length])

- if len(instance["input_ids"]) > self.max_length

- else torch.LongTensor(instance["input_ids"])

+ (

+ torch.LongTensor(instance["input_ids"][: self.max_length])

+ if len(instance["input_ids"]) > self.max_length

+ else torch.LongTensor(instance["input_ids"])

+ )

for instance in instances

]

batch_labels = [

- torch.LongTensor(instance["labels"][: self.max_length])

- if len(instance["labels"]) > self.max_length

- else torch.LongTensor(instance["labels"])

+ (

+ torch.LongTensor(instance["labels"][: self.max_length])

+ if len(instance["labels"]) > self.max_length

+ else torch.LongTensor(instance["labels"])

+ )

for instance in instances

]

diff --git a/applications/Colossal-LLaMA/train.py b/applications/Colossal-LLaMA/train.py

index dcd7be9f4e4c..43a360a9a49c 100644

--- a/applications/Colossal-LLaMA/train.py

+++ b/applications/Colossal-LLaMA/train.py

@@ -136,7 +136,7 @@ def main() -> None:

# ==============================

# Initialize Distributed Training

# ==============================

- colossalai.launch_from_torch({})

+ colossalai.launch_from_torch()

accelerator = get_accelerator()

coordinator = DistCoordinator()

@@ -253,9 +253,11 @@ def main() -> None:

coordinator.print_on_master(f"Model params: {format_numel_str(model_numel)}")

optimizer = HybridAdam(

- model_params=filter(lambda p: p.requires_grad, model.parameters())

- if args.freeze_non_embeds_params

- else model.parameters(),

+ model_params=(

+ filter(lambda p: p.requires_grad, model.parameters())

+ if args.freeze_non_embeds_params

+ else model.parameters()

+ ),

lr=args.lr,

betas=(0.9, 0.95),

weight_decay=args.weight_decay,

diff --git a/applications/ColossalChat/benchmarks/benchmark_ppo.py b/applications/ColossalChat/benchmarks/benchmark_ppo.py

index e1b7a313f981..00edf053410f 100644

--- a/applications/ColossalChat/benchmarks/benchmark_ppo.py

+++ b/applications/ColossalChat/benchmarks/benchmark_ppo.py

@@ -66,7 +66,7 @@ def benchmark_train(args):

# ==============================

# Initialize Distributed Training

# ==============================

- colossalai.launch_from_torch({})

+ colossalai.launch_from_torch()

coordinator = DistCoordinator()

# ======================================================

diff --git a/applications/ColossalChat/examples/training_scripts/train_dpo.py b/applications/ColossalChat/examples/training_scripts/train_dpo.py

index b9287eb1a407..f06c23a9f704 100755

--- a/applications/ColossalChat/examples/training_scripts/train_dpo.py

+++ b/applications/ColossalChat/examples/training_scripts/train_dpo.py

@@ -37,7 +37,7 @@ def train(args):

# ==============================

# Initialize Distributed Training

# ==============================

- colossalai.launch_from_torch({})

+ colossalai.launch_from_torch()

coordinator = DistCoordinator()

# ==============================

diff --git a/applications/ColossalChat/examples/training_scripts/train_ppo.py b/applications/ColossalChat/examples/training_scripts/train_ppo.py

index 7c91fa347847..727cff7ca564 100755

--- a/applications/ColossalChat/examples/training_scripts/train_ppo.py

+++ b/applications/ColossalChat/examples/training_scripts/train_ppo.py

@@ -39,7 +39,7 @@ def train(args):

# ==============================

# Initialize Distributed Training

# ==============================

- colossalai.launch_from_torch({})

+ colossalai.launch_from_torch()

coordinator = DistCoordinator()

# ======================================================

diff --git a/applications/ColossalChat/examples/training_scripts/train_rm.py b/applications/ColossalChat/examples/training_scripts/train_rm.py

index a0c710f2bb7f..364198c1d78b 100755

--- a/applications/ColossalChat/examples/training_scripts/train_rm.py

+++ b/applications/ColossalChat/examples/training_scripts/train_rm.py

@@ -34,7 +34,7 @@ def train(args):

# ==============================

# Initialize Distributed Training

# ==============================

- colossalai.launch_from_torch({})

+ colossalai.launch_from_torch()

coordinator = DistCoordinator()

# ======================================================

diff --git a/applications/ColossalChat/examples/training_scripts/train_sft.py b/applications/ColossalChat/examples/training_scripts/train_sft.py

index fcd1a429cc5f..ae20f2abcb5f 100755

--- a/applications/ColossalChat/examples/training_scripts/train_sft.py

+++ b/applications/ColossalChat/examples/training_scripts/train_sft.py

@@ -29,7 +29,7 @@ def train(args):

# ==============================

# Initialize Distributed Training

# ==============================

- colossalai.launch_from_torch({})

+ colossalai.launch_from_torch()

coordinator = DistCoordinator()

# ==============================

diff --git a/applications/ColossalEval/examples/dataset_evaluation/inference.py b/applications/ColossalEval/examples/dataset_evaluation/inference.py

index 13bbb12b6990..a7307635d333 100644

--- a/applications/ColossalEval/examples/dataset_evaluation/inference.py

+++ b/applications/ColossalEval/examples/dataset_evaluation/inference.py

@@ -81,7 +81,7 @@ def rm_and_merge(

def main(args):

- colossalai.launch_from_torch(config={}, seed=42)

+ colossalai.launch_from_torch(seed=42)

accelerator = get_accelerator()

world_size = dist.get_world_size()

diff --git a/applications/ColossalEval/examples/gpt_evaluation/inference.py b/applications/ColossalEval/examples/gpt_evaluation/inference.py

index 5b09f9de8da6..408ba3e7b084 100644

--- a/applications/ColossalEval/examples/gpt_evaluation/inference.py

+++ b/applications/ColossalEval/examples/gpt_evaluation/inference.py

@@ -81,7 +81,7 @@ def rm_and_merge(

def main(args):

- colossalai.launch_from_torch(config={}, seed=42)

+ colossalai.launch_from_torch(seed=42)

world_size = dist.get_world_size()

rank = dist.get_rank()

diff --git a/applications/ColossalMoE/infer.py b/applications/ColossalMoE/infer.py

index c175fe9e3f3f..543c434d2a99 100644

--- a/applications/ColossalMoE/infer.py

+++ b/applications/ColossalMoE/infer.py

@@ -57,7 +57,7 @@ def main():

args = parse_args()

# Launch ColossalAI

- colossalai.launch_from_torch(config={}, seed=args.seed)

+ colossalai.launch_from_torch(seed=args.seed)

coordinator = DistCoordinator()

config = MixtralConfig.from_pretrained(args.model_name)

@@ -96,7 +96,11 @@ def main():

if coordinator.rank == 0:

text = ["Hello my name is"]

else:

- text = ["What's the largest country in the world?", "How many people live in China?", "帮我续写这首诗:离离原上草"]

+ text = [

+ "What's the largest country in the world?",

+ "How many people live in China?",

+ "帮我续写这首诗:离离原上草",

+ ]

tokenizer.pad_token = tokenizer.unk_token

inputs = tokenizer(text, return_tensors="pt", padding=True).to(torch.cuda.current_device())

diff --git a/applications/ColossalMoE/tests/test_mixtral_layer.py b/applications/ColossalMoE/tests/test_mixtral_layer.py

index 57589ab20d22..cbb70f195258 100644

--- a/applications/ColossalMoE/tests/test_mixtral_layer.py

+++ b/applications/ColossalMoE/tests/test_mixtral_layer.py

@@ -50,7 +50,7 @@ def check_mixtral_moe_layer():

def run_dist(rank: int, world_size: int, port: int):

- colossalai.launch({}, rank, world_size, "localhost", port)

+ colossalai.launch(rank, world_size, "localhost", port)

check_mixtral_moe_layer()

diff --git a/applications/ColossalMoE/tests/test_moe_checkpoint.py b/applications/ColossalMoE/tests/test_moe_checkpoint.py

index 822e7410f016..074dbf835fa6 100644

--- a/applications/ColossalMoE/tests/test_moe_checkpoint.py

+++ b/applications/ColossalMoE/tests/test_moe_checkpoint.py

@@ -133,7 +133,7 @@ def check_mixtral_moe_layer():

def run_dist(rank: int, world_size: int, port: int):

- colossalai.launch({}, rank, world_size, "localhost", port)

+ colossalai.launch(rank, world_size, "localhost", port)

check_mixtral_moe_layer()

diff --git a/applications/ColossalMoE/train.py b/applications/ColossalMoE/train.py

index 850236726a27..d2789d644ca5 100644

--- a/applications/ColossalMoE/train.py

+++ b/applications/ColossalMoE/train.py

@@ -145,7 +145,7 @@ def main():

args = parse_args()

# Launch ColossalAI

- colossalai.launch_from_torch(config={}, seed=args.seed)

+ colossalai.launch_from_torch(seed=args.seed)

coordinator = DistCoordinator()

# Set plugin

@@ -195,9 +195,9 @@ def main():

lr_scheduler = CosineAnnealingWarmupLR(

optimizer=optimizer,

total_steps=args.num_epochs * len(dataloader),

- warmup_steps=args.warmup_steps

- if args.warmup_steps is not None

- else int(args.num_epochs * len(dataloader) * 0.025),

+ warmup_steps=(

+ args.warmup_steps if args.warmup_steps is not None else int(args.num_epochs * len(dataloader) * 0.025)

+ ),

eta_min=0.1 * args.lr,

)

diff --git a/colossalai/auto_parallel/offload/amp_optimizer.py b/colossalai/auto_parallel/offload/amp_optimizer.py

index fe8439269f48..ab02de7ce109 100644

--- a/colossalai/auto_parallel/offload/amp_optimizer.py

+++ b/colossalai/auto_parallel/offload/amp_optimizer.py

@@ -126,7 +126,7 @@ def loss_scale(self):

return self.grad_scaler.scale.item()

def zero_grad(self, *args, **kwargs):

- self.module.overflow_counter = torch.cuda.IntTensor([0])

+ self.module.overflow_counter = torch.tensor([0], dtype=torch.int, device=get_accelerator().get_current_device())

return self.optim.zero_grad(set_to_none=True)

def step(self, *args, **kwargs):

diff --git a/colossalai/auto_parallel/offload/base_offload_module.py b/colossalai/auto_parallel/offload/base_offload_module.py

index 60de7743a52e..8afd29e436d7 100644

--- a/colossalai/auto_parallel/offload/base_offload_module.py

+++ b/colossalai/auto_parallel/offload/base_offload_module.py

@@ -4,7 +4,7 @@

import torch

import torch.nn as nn

-from colossalai.utils import _cast_float

+from colossalai.utils import _cast_float, get_current_device

from colossalai.utils.common import free_storage

from .region_manager import RegionManager

@@ -25,7 +25,7 @@ def __init__(self, model: nn.Module, region_manager: RegionManager, is_sync=True

self.model = model

self.region_manager = region_manager

self.grad_hook_list = []

- self.overflow_counter = torch.cuda.IntTensor([0])

+ self.overflow_counter = torch.tensor([0], dtype=torch.int, device=get_current_device())

self.grad_offload_stream = torch.cuda.current_stream() if is_sync else GlobalRuntimeInfo.d2h_stream

diff --git a/colossalai/booster/booster.py b/colossalai/booster/booster.py

index d73bc5babd80..56d8a0935f10 100644

--- a/colossalai/booster/booster.py

+++ b/colossalai/booster/booster.py

@@ -8,9 +8,18 @@

from torch.optim.lr_scheduler import _LRScheduler as LRScheduler

from torch.utils.data import DataLoader

+SUPPORT_PEFT = False

+try:

+ import peft

+

+ SUPPORT_PEFT = True

+except ImportError:

+ pass

+

import colossalai.interface.pretrained as pretrained_utils

from colossalai.checkpoint_io import GeneralCheckpointIO

from colossalai.interface import ModelWrapper, OptimizerWrapper

+from colossalai.quantization import BnbQuantizationConfig

from .accelerator import Accelerator

from .mixed_precision import MixedPrecision, mixed_precision_factory

@@ -221,6 +230,56 @@ def no_sync(self, model: nn.Module = None, optimizer: OptimizerWrapper = None) -

assert self.plugin.support_no_sync(), f"The plugin {self.plugin.__class__.__name__} does not support no_sync."

return self.plugin.no_sync(model, optimizer)

+ def enable_lora(

+ self,

+ model: nn.Module,

+ pretrained_dir: Optional[str] = None,

+ lora_config: "peft.LoraConfig" = None,

+ bnb_quantization_config: Optional[BnbQuantizationConfig] = None,

+ quantize=False,

+ ) -> nn.Module:

+ """

+ Wrap the passed in model with LoRA modules for training. If pretrained directory is provided, lora configs and weights are loaded from that directory.

+ Lora in ColossalAI is implemented using Huggingface peft library, so the arguments for Lora configuration are same as those of peft.

+

+ Args:

+ model (nn.Module): The model to be appended with LoRA modules.

+ pretrained_dir(str, optional): The path to the pretrained directory, can be a local directory

+ or model_id of a PEFT configuration hosted inside a model repo on the Hugging Face Hub.

+ When set to None, create new lora configs and weights for the model using the passed in lora_config. Defaults to None.

+ lora_config: (peft.LoraConfig, optional): Passed in LoraConfig for peft. Defaults to None.

+ """

+ if not SUPPORT_PEFT:

+ raise ImportError("Please install Huggingface Peft library to enable lora features in ColossalAI!")

+

+ assert self.plugin is not None, f"Lora can only be enabled when a plugin is provided."

+ assert self.plugin.support_lora(), f"The plugin {self.plugin.__class__.__name__} does not support lora."

+ if pretrained_dir is None:

+ assert (

+ lora_config is not None

+ ), "Please provide configuration for Lora when pretrained directory path isn't passed in."

+ assert isinstance(

+ lora_config, peft.LoraConfig

+ ), "The passed in configuration should be an instance of peft.LoraConfig."

+ if lora_config is None:

+ assert (

+ pretrained_dir is not None

+ ), "Please provide pretrained directory path if not passing in lora configuration."

+ if quantize is True:

+ if bnb_quantization_config is not None:

+ warnings.warn(

+ "User defined BnbQuantizationConfig is not fully tested in ColossalAI. Use it at your own risk."

+ )

+ else:

+ bnb_quantization_config = BnbQuantizationConfig(

+ load_in_4bit=True,

+ bnb_4bit_compute_dtype=torch.bfloat16,

+ bnb_4bit_use_double_quant=True,

+ bnb_4bit_quant_type="nf4",

+ )

+

+ return self.plugin.enable_lora(model, pretrained_dir, lora_config, bnb_quantization_config)

+

def load_model(self, model: Union[nn.Module, ModelWrapper], checkpoint: str, strict: bool = True) -> None:

"""Load model from checkpoint.

@@ -323,3 +382,20 @@ def load_lr_scheduler(self, lr_scheduler: LRScheduler, checkpoint: str) -> None:

checkpoint (str): Path to the checkpoint. It must be a local file path.

"""

self.checkpoint_io.load_lr_scheduler(lr_scheduler, checkpoint)

+

+ def save_lora_as_pretrained(

+ self, model: Union[nn.Module, ModelWrapper], checkpoint: str, use_safetensors: bool = False

+ ) -> None:

+ """

+ Save the lora adapters and adapter configuration file to a pretrained checkpoint directory.

+

+ Args:

+ model (Union[nn.Module, ModelWrapper]): A model boosted by Booster.

+ checkpoint (str): Path to the checkpoint directory. It must be a local path.

+ use_safetensors (bool, optional): Whether to use safe tensors when saving. Defaults to False.

+ """

+ if not SUPPORT_PEFT:

+ raise ImportError("Please install Huggingface Peft library to enable lora features in ColossalAI!")

+ assert self.plugin is not None, f"Lora can only be enabled when a plugin is provided."

+ assert self.plugin.support_lora(), f"The plugin {self.plugin.__class__.__name__} does not support lora."

+ self.checkpoint_io.save_lora_as_pretrained(model, checkpoint, use_safetensors)

diff --git a/colossalai/booster/plugin/gemini_plugin.py b/colossalai/booster/plugin/gemini_plugin.py

index a4861c1b6f35..d67fa3a24070 100644

--- a/colossalai/booster/plugin/gemini_plugin.py

+++ b/colossalai/booster/plugin/gemini_plugin.py

@@ -4,7 +4,7 @@

import random

from pathlib import Path

from types import MethodType

-from typing import Callable, Iterator, List, Optional, Tuple

+from typing import Callable, Dict, Iterator, List, Optional, Tuple

import numpy as np

import torch

@@ -446,6 +446,9 @@ def __del__(self):

def support_no_sync(self) -> bool:

return False

+ def support_lora(self) -> bool:

+ return False

+

def control_precision(self) -> bool:

return True

@@ -576,3 +579,8 @@ def get_checkpoint_io(self) -> CheckpointIO:

def no_sync(self, model: nn.Module, optimizer: OptimizerWrapper) -> Iterator[None]:

raise NotImplementedError

+

+ def enable_lora(

+ self, model: nn.Module, pretrained_dir: Optional[str] = None, lora_config: Optional[Dict] = None

+ ) -> nn.Module:

+ raise NotImplementedError

diff --git a/colossalai/booster/plugin/hybrid_parallel_plugin.py b/colossalai/booster/plugin/hybrid_parallel_plugin.py

index 5237734f0212..97057481e380 100644

--- a/colossalai/booster/plugin/hybrid_parallel_plugin.py

+++ b/colossalai/booster/plugin/hybrid_parallel_plugin.py

@@ -4,7 +4,7 @@

from contextlib import contextmanager

from functools import partial

from types import MethodType

-from typing import Any, Callable, Iterator, List, Optional, OrderedDict, Tuple, Union

+from typing import Any, Callable, Dict, Iterator, List, Optional, OrderedDict, Tuple, Union

import numpy as np

import torch

@@ -1156,6 +1156,9 @@ def control_precision(self) -> bool:

def support_no_sync(self) -> bool:

return True

+ def support_lora(self) -> bool:

+ return False

+

def control_checkpoint_io(self) -> bool:

return True

@@ -1356,3 +1359,8 @@ def no_sync(self, model: Module, optimizer: OptimizerWrapper) -> Iterator[None]:

self.zero_stage != 2

), "ZERO2 is not compatible with no_sync function, please run gradient accumulation with gradient synchronization allowed."

return optimizer.no_sync() if isinstance(optimizer, HybridParallelZeroOptimizer) else model.no_sync()

+

+ def enable_lora(

+ self, model: Module, pretrained_dir: Optional[str] = None, lora_config: Optional[Dict] = None

+ ) -> Module:

+ raise NotImplementedError

diff --git a/colossalai/booster/plugin/low_level_zero_plugin.py b/colossalai/booster/plugin/low_level_zero_plugin.py

index 650cedf65a52..68127db5b121 100644

--- a/colossalai/booster/plugin/low_level_zero_plugin.py

+++ b/colossalai/booster/plugin/low_level_zero_plugin.py

@@ -1,12 +1,15 @@

+import enum

import logging

import os

+import warnings

from functools import partial

from pathlib import Path

from types import MethodType

-from typing import Callable, Iterator, List, Optional, Tuple

+from typing import Callable, Dict, Iterator, List, Optional, Tuple

import torch

import torch.nn as nn

+from torch.nn import Parameter

from torch.optim import Optimizer

from torch.optim.lr_scheduler import _LRScheduler as LRScheduler

from torch.utils._pytree import tree_map

@@ -25,6 +28,7 @@

sharded_optimizer_loading_epilogue,

)

from colossalai.interface import AMPModelMixin, ModelWrapper, OptimizerWrapper

+from colossalai.quantization import BnbQuantizationConfig, quantize_model

from colossalai.zero import LowLevelZeroOptimizer

from .dp_plugin_base import DPPluginBase

@@ -42,6 +46,12 @@ def _convert_floating_point(x, dtype: torch.dtype = torch.float16):

SUPPORTED_PRECISION = ["fp16", "bf16", "fp32"]

+class OptimizerParamCheckState(enum.Enum):

+ ORIGIN_PARAM_FINDED = 0

+ ORIGIN_PARAM_NOT_FIND = -1

+ LORA_PARM_EXISTED = -2

+

+

class LowLevelZeroModel(ModelWrapper, AMPModelMixin):

def __init__(self, module: nn.Module, precision: str) -> None:

super().__init__(module)

@@ -209,6 +219,19 @@ def load_sharded_model(

super().load_sharded_model(model, checkpoint_index_file, strict, use_safetensors, load_sub_module)

model.update_master_params()

+ def save_lora_as_pretrained(self, model, checkpoint, use_safetensors):

+ if os.path.isfile(checkpoint):

+ logging.error(f"Provided path ({checkpoint}) should be a directory, not a file")

+ return

+ from peft import PeftModel

+

+ assert isinstance(model, ModelWrapper), "Please boost the model before saving!"

+ peft_model = model.unwrap()

+ assert isinstance(

+ peft_model, PeftModel

+ ), "The model doesn't have lora adapters, please enable lora before saving."

+ return peft_model.save_pretrained(checkpoint, safe_serialization=use_safetensors)

+

class LowLevelZeroPlugin(DPPluginBase):

"""

@@ -288,6 +311,7 @@ def __init__(

cpu_offload=cpu_offload,

master_weights=master_weights,

)

+ self.lora_enabled = False

self.verbose = verbose

# set class name with stage, for better error message

@@ -299,6 +323,9 @@ def __del__(self):

def support_no_sync(self) -> bool:

return self.stage == 1

+ def support_lora(self) -> bool:

+ return False

+

def control_precision(self) -> bool:

return True

@@ -311,6 +338,79 @@ def control_device(self) -> bool:

def supported_devices(self) -> List[str]:

return ["cuda", "npu"]

+ def support_lora(self) -> bool:

+ return True

+

+ def enable_lora(

+ self,

+ model: nn.Module,

+ pretrained_dir: Optional[str] = None,

+ lora_config: Optional[Dict] = None,

+ bnb_quantization_config: Optional[BnbQuantizationConfig] = None,

+ ) -> nn.Module:

+ from peft import PeftModel, get_peft_model

+

+ assert not isinstance(model, LowLevelZeroModel), "Lora should be enabled before boosting the model."

+ self.lora_enabled = True

+ warnings.warn("You have enabled LoRa training. Please check the hyperparameters such as lr")

+

+ if bnb_quantization_config is not None:

+ model = quantize_model(model, bnb_quantization_config)

+

+ if pretrained_dir is None:

+ peft_model = get_peft_model(model, lora_config)

+ else:

+ peft_model = PeftModel.from_pretrained(model, pretrained_dir, is_trainable=True)

+ return peft_model

+

+ def get_param_group_id(self, optimizer: Optimizer, origin_param: Parameter):

+ origin_param_id = id(origin_param)

+ for group_id, param_group in enumerate(optimizer.param_groups):

+ for p in param_group["params"]:

+ if id(p) == origin_param_id:

+ return group_id

+ return -1

+

+ def get_param_group_id(self, optimizer: Optimizer, origin_param: Parameter, lora_param: Parameter):

+ origin_param_id = id(origin_param)

+ lora_param_id = id(lora_param)

+ target_group_id = None

+ for group_id, param_group in enumerate(optimizer.param_groups):

+ for p in param_group["params"]:

+ if id(p) == lora_param_id:

+ # check if the lora parameter exists.

+ return target_group_id, OptimizerParamCheckState.LORA_PARM_EXISTED

+ if id(p) == origin_param_id:

+ target_group_id = group_id

+ if target_group_id is not None:

+ return target_group_id, OptimizerParamCheckState.ORIGIN_PARAM_FINDED

+ else:

+ return target_group_id, OptimizerParamCheckState.ORIGIN_PARAM_NOT_FIND

+

+ def add_lora_params_to_optimizer(self, model, optimizer):

+ """add lora parameters to optimizer"""

+ name2param = {}

+ for name, param in model.named_parameters():

+ name2param[name] = param

+

+ for name, param in name2param.items():

+ if "lora_A" in name or "lora_B" in name:

+ origin_key = name.replace("lora_A.", "")

+ origin_key = origin_key.replace("lora_B.", "")

+ origin_key = origin_key.replace(f"{model.active_adapter}", "base_layer")

+ origin_param = name2param[origin_key]

+ group_id, check_state = self.get_param_group_id(optimizer, origin_param, param)

+ if check_state == OptimizerParamCheckState.ORIGIN_PARAM_NOT_FIND:

+ warnings.warn(

+ "Origin parameter {origin_key} related to {name} doesn't exist in optimizer param_groups."

+ )

+ elif (

+ check_state == OptimizerParamCheckState.ORIGIN_PARAM_FINDED

+ and group_id is not None

+ and group_id >= 0

+ ):

+ optimizer.param_groups[group_id]["params"].append(param)

+

def configure(

self,

model: nn.Module,

@@ -319,6 +419,15 @@ def configure(

dataloader: Optional[DataLoader] = None,

lr_scheduler: Optional[LRScheduler] = None,

) -> Tuple[nn.Module, OptimizerWrapper, Callable, DataLoader, LRScheduler]:

+ if self.lora_enabled:

+ from peft import PeftModel

+

+ assert isinstance(

+ model, PeftModel

+ ), "The model should have been wrapped as a PeftModel when self.lora_enabled is True"

+ if optimizer is not None:

+ self.add_lora_params_to_optimizer(model, optimizer)

+

if not isinstance(model, ModelWrapper):

model = LowLevelZeroModel(model, self.precision)

diff --git a/colossalai/booster/plugin/plugin_base.py b/colossalai/booster/plugin/plugin_base.py

index 4e570cbe8abc..6dc0c560d06d 100644

--- a/colossalai/booster/plugin/plugin_base.py

+++ b/colossalai/booster/plugin/plugin_base.py

@@ -1,5 +1,5 @@

from abc import ABC, abstractmethod

-from typing import Callable, Iterator, List, Optional, Tuple

+from typing import Callable, Dict, Iterator, List, Optional, Tuple

import torch.nn as nn

from torch.optim import Optimizer

@@ -33,6 +33,10 @@ def control_device(self) -> bool:

def support_no_sync(self) -> bool:

pass

+ @abstractmethod

+ def support_lora(self) -> bool:

+ pass

+

@abstractmethod

def configure(

self,

@@ -63,6 +67,12 @@ def no_sync(self, model: nn.Module, optimizer: OptimizerWrapper) -> Iterator[Non

Context manager to disable gradient synchronization.

"""

+ @abstractmethod

+ def enable_lora(self, model: nn.Module, pretrained_dir: str, lora_config: Dict) -> nn.Module:

+ """

+ Add LoRA modules to the model passed in. Should only be called in booster.enable_lora().

+ """

+

@abstractmethod

def prepare_dataloader(

self,

diff --git a/colossalai/booster/plugin/torch_ddp_plugin.py b/colossalai/booster/plugin/torch_ddp_plugin.py

index 738634473dbc..5116446a4295 100644

--- a/colossalai/booster/plugin/torch_ddp_plugin.py

+++ b/colossalai/booster/plugin/torch_ddp_plugin.py

@@ -1,4 +1,4 @@

-from typing import Callable, Iterator, List, Optional, Tuple

+from typing import Callable, Dict, Iterator, List, Optional, Tuple, Union

import torch.nn as nn

from torch.nn.parallel import DistributedDataParallel as DDP

@@ -9,6 +9,8 @@

from colossalai.checkpoint_io import CheckpointIO, GeneralCheckpointIO

from colossalai.cluster import DistCoordinator

from colossalai.interface import ModelWrapper, OptimizerWrapper

+from colossalai.quantization import BnbQuantizationConfig, quantize_model

+from colossalai.utils import get_current_device

from .dp_plugin_base import DPPluginBase

@@ -116,6 +118,22 @@ def load_sharded_optimizer(

assert isinstance(optimizer, OptimizerWrapper), "Please boost the optimizer before loading!"

super().load_sharded_optimizer(optimizer.unwrap(), index_file_path, prefix)

+ def save_lora_as_pretrained(

+ self, model: Union[nn.Module, ModelWrapper], checkpoint: str, use_safetensors: bool = False

+ ) -> None:

+ """

+ Save the lora adapters and adapter configuration file to checkpoint directory.

+ """

+ from peft import PeftModel

+

+ assert isinstance(model, ModelWrapper), "Please boost the model before saving!"

+ if self.coordinator.is_master():

+ peft_model = model.unwrap()

+ assert isinstance(

+ peft_model, PeftModel

+ ), "The model doesn't have lora adapters, please enable lora before saving."

+ peft_model.save_pretrained(save_directory=checkpoint, safe_serialization=use_safetensors)

+

class TorchDDPModel(ModelWrapper):

def __init__(self, module: nn.Module, *args, **kwargs) -> None:

@@ -173,6 +191,9 @@ def __init__(

def support_no_sync(self) -> bool:

return True

+ def support_lora(self) -> bool:

+ return True

+

def control_precision(self) -> bool:

return False

@@ -183,7 +204,7 @@ def control_device(self) -> bool:

return True

def supported_devices(self) -> List[str]:

- return ["cuda"]

+ return ["cuda", "npu"]

def configure(

self,

@@ -194,7 +215,7 @@ def configure(

lr_scheduler: Optional[LRScheduler] = None,

) -> Tuple[nn.Module, OptimizerWrapper, Callable, DataLoader, LRScheduler]:

# cast model to cuda

- model = model.cuda()

+ model = model.to(get_current_device())

# convert model to sync bn

model = nn.SyncBatchNorm.convert_sync_batchnorm(model, None)

@@ -216,3 +237,21 @@ def get_checkpoint_io(self) -> CheckpointIO:

def no_sync(self, model: nn.Module, optimizer: OptimizerWrapper) -> Iterator[None]:

assert isinstance(model, TorchDDPModel), "Model is not boosted by TorchDDPPlugin."

return model.module.no_sync()

+

+ def enable_lora(

+ self,

+ model: nn.Module,

+ pretrained_dir: Optional[str] = None,

+ lora_config: Optional[Dict] = None,

+ bnb_quantization_config: Optional[BnbQuantizationConfig] = None,

+ ) -> nn.Module:

+ from peft import PeftModel, get_peft_model

+

+ if bnb_quantization_config is not None:

+ model = quantize_model(model, bnb_quantization_config)

+

+ assert not isinstance(model, TorchDDPModel), "Lora should be enabled before boosting the model."

+ if pretrained_dir is None:

+ return get_peft_model(model, lora_config)

+ else:

+ return PeftModel.from_pretrained(model, pretrained_dir, is_trainable=True)

diff --git a/colossalai/booster/plugin/torch_fsdp_plugin.py b/colossalai/booster/plugin/torch_fsdp_plugin.py

index 0aa0caa9aafe..cd2f9e84018a 100644

--- a/colossalai/booster/plugin/torch_fsdp_plugin.py

+++ b/colossalai/booster/plugin/torch_fsdp_plugin.py

@@ -2,7 +2,7 @@

import os

import warnings

from pathlib import Path

-from typing import Callable, Iterable, Iterator, List, Optional, Tuple

+from typing import Callable, Dict, Iterable, Iterator, List, Optional, Tuple

import torch

import torch.nn as nn

@@ -318,6 +318,9 @@ def __init__(

def support_no_sync(self) -> bool:

return False

+ def support_lora(self) -> bool:

+ return False

+

def no_sync(self, model: nn.Module, optimizer: OptimizerWrapper) -> Iterator[None]:

raise NotImplementedError("Torch fsdp no_sync func not supported yet.")

@@ -361,3 +364,8 @@ def control_checkpoint_io(self) -> bool:

def get_checkpoint_io(self) -> CheckpointIO:

return TorchFSDPCheckpointIO()

+

+ def enable_lora(

+ self, model: nn.Module, pretrained_dir: Optional[str] = None, lora_config: Optional[Dict] = None

+ ) -> nn.Module:

+ raise NotImplementedError

diff --git a/colossalai/checkpoint_io/checkpoint_io_base.py b/colossalai/checkpoint_io/checkpoint_io_base.py

index 71232421586d..949ba4d44e24 100644

--- a/colossalai/checkpoint_io/checkpoint_io_base.py

+++ b/colossalai/checkpoint_io/checkpoint_io_base.py

@@ -335,3 +335,20 @@ def load_lr_scheduler(self, lr_scheduler: LRScheduler, checkpoint: str):

"""

state_dict = torch.load(checkpoint)

lr_scheduler.load_state_dict(state_dict)

+

+ # ================================================================================

+ # Abstract method for lora saving implementation.

+ # ================================================================================

+

+ @abstractmethod

+ def save_lora_as_pretrained(

+ self, model: Union[nn.Module, ModelWrapper], checkpoint: str, use_safetensors: bool = False

+ ) -> None:

+ """

+ Save the lora adapters and adapter configuration file to a pretrained checkpoint directory.

+

+ Args:

+ model (Union[nn.Module, ModelWrapper]): A model boosted by Booster.

+ checkpoint (str): Path to the checkpoint directory. It must be a local path.

+ use_safetensors (bool, optional): Whether to use safe tensors when saving. Defaults to False.

+ """

diff --git a/colossalai/checkpoint_io/general_checkpoint_io.py b/colossalai/checkpoint_io/general_checkpoint_io.py

index a652d9b4538e..b9253a56dcbb 100644

--- a/colossalai/checkpoint_io/general_checkpoint_io.py

+++ b/colossalai/checkpoint_io/general_checkpoint_io.py

@@ -228,3 +228,6 @@ def load_sharded_model(

self.__class__.__name__, "\n\t".join(error_msgs)

)

)

+

+ def save_lora_as_pretrained(self, model: nn.Module, checkpoint: str, use_safetensors: bool = False) -> None:

+ raise NotImplementedError

diff --git a/colossalai/inference/README.md b/colossalai/inference/README.md

index 287853a86383..0bdaf347d295 100644

--- a/colossalai/inference/README.md

+++ b/colossalai/inference/README.md

@@ -114,7 +114,7 @@ import colossalai

from transformers import LlamaForCausalLM, LlamaTokenizer

#launch distributed environment

-colossalai.launch_from_torch(config={})

+colossalai.launch_from_torch()

# load original model and tokenizer

model = LlamaForCausalLM.from_pretrained("/path/to/model")

@@ -165,7 +165,7 @@ Currently the stats below are calculated based on A100 (single GPU), and we calc

##### Llama

| batch_size | 8 | 16 | 32 |

-| :---------------------: | :----: | :----: | :----: |

+|:-----------------------:|:------:|:------:|:------:|

| hugging-face torch fp16 | 199.12 | 246.56 | 278.4 |

| colossal-inference | 326.4 | 582.72 | 816.64 |

@@ -174,7 +174,7 @@ Currently the stats below are calculated based on A100 (single GPU), and we calc

#### Bloom

| batch_size | 8 | 16 | 32 |

-| :---------------------: | :----: | :----: | :----: |

+|:-----------------------:|:------:|:------:|:------:|

| hugging-face torch fp16 | 189.68 | 226.66 | 249.61 |

| colossal-inference | 323.28 | 538.52 | 611.64 |

@@ -187,40 +187,40 @@ We conducted multiple benchmark tests to evaluate the performance. We compared t

#### A10 7b, fp16

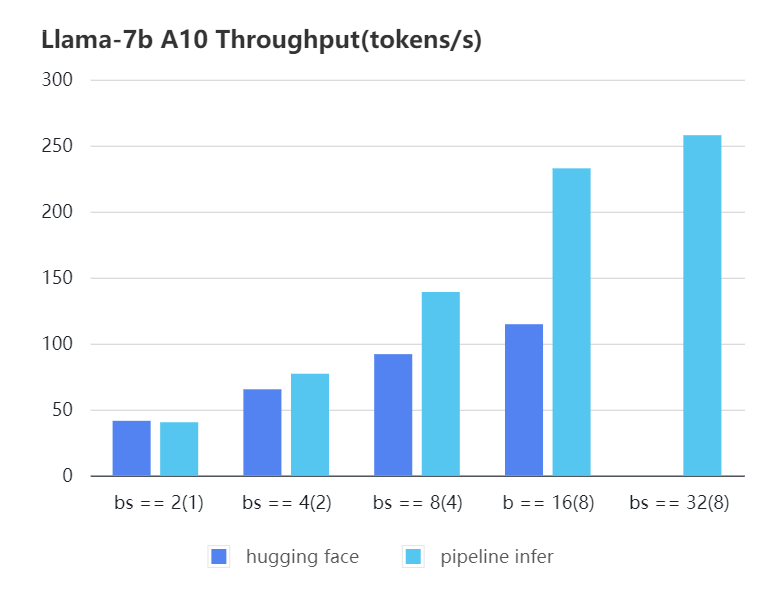

-| batch_size(micro_batch size)| 2(1) | 4(2) | 8(4) | 16(8) | 32(8) | 32(16)|

-| :-------------------------: | :---: | :---:| :---: | :---: | :---: | :---: |

-| Pipeline Inference | 40.35 | 77.10| 139.03| 232.70| 257.81| OOM |

-| Hugging Face | 41.43 | 65.30| 91.93 | 114.62| OOM | OOM |

+| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(8) | 32(8) | 32(16) |

+|:----------------------------:|:-----:|:-----:|:------:|:------:|:------:|:------:|

+| Pipeline Inference | 40.35 | 77.10 | 139.03 | 232.70 | 257.81 | OOM |

+| Hugging Face | 41.43 | 65.30 | 91.93 | 114.62 | OOM | OOM |

#### A10 13b, fp16

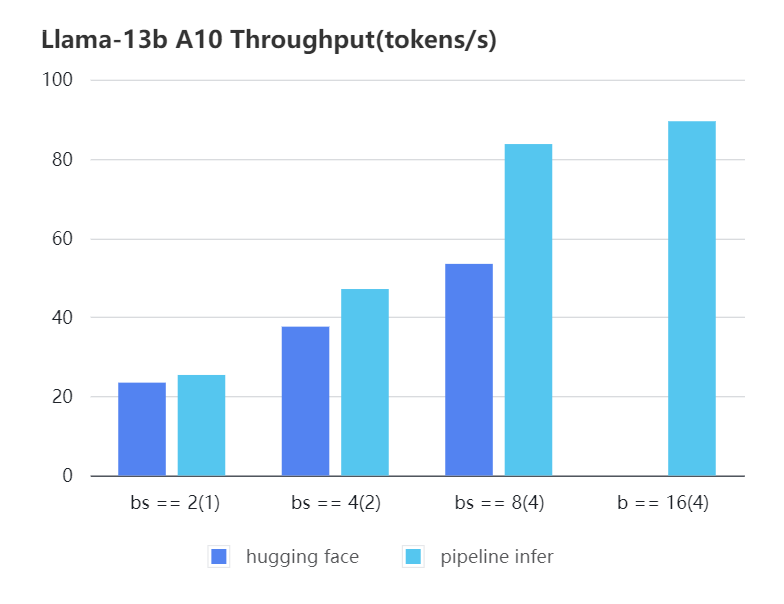

-| batch_size(micro_batch size)| 2(1) | 4(2) | 8(4) | 16(4) |

-| :---: | :---: | :---: | :---: | :---: |

-| Pipeline Inference | 25.39 | 47.09 | 83.7 | 89.46 |

-| Hugging Face | 23.48 | 37.59 | 53.44 | OOM |

+| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(4) |

+|:----------------------------:|:-----:|:-----:|:-----:|:-----:|

+| Pipeline Inference | 25.39 | 47.09 | 83.7 | 89.46 |

+| Hugging Face | 23.48 | 37.59 | 53.44 | OOM |

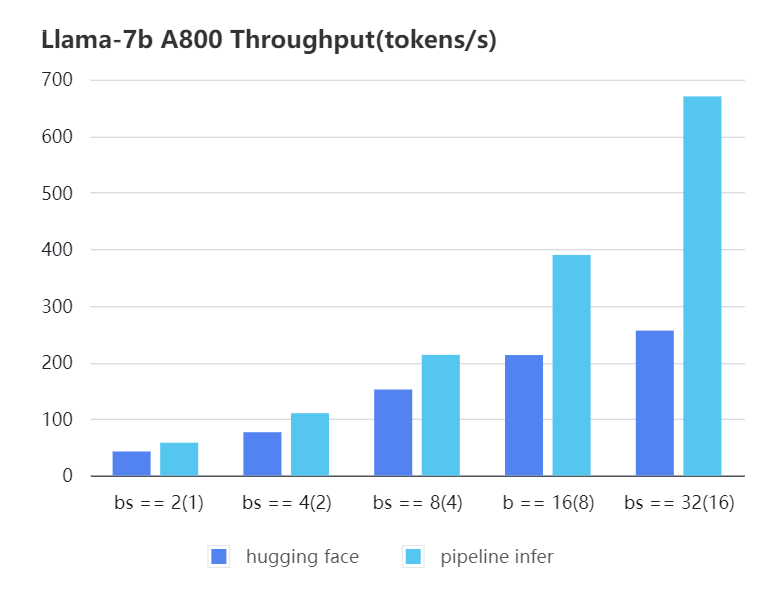

#### A800 7b, fp16

-| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(8) | 32(16) |

-| :---: | :---: | :---: | :---: | :---: | :---: |

-| Pipeline Inference| 57.97 | 110.13 | 213.33 | 389.86 | 670.12 |

-| Hugging Face | 42.44 | 76.5 | 151.97 | 212.88 | 256.13 |

+| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(8) | 32(16) |

+|:----------------------------:|:-----:|:------:|:------:|:------:|:------:|

+| Pipeline Inference | 57.97 | 110.13 | 213.33 | 389.86 | 670.12 |

+| Hugging Face | 42.44 | 76.5 | 151.97 | 212.88 | 256.13 |

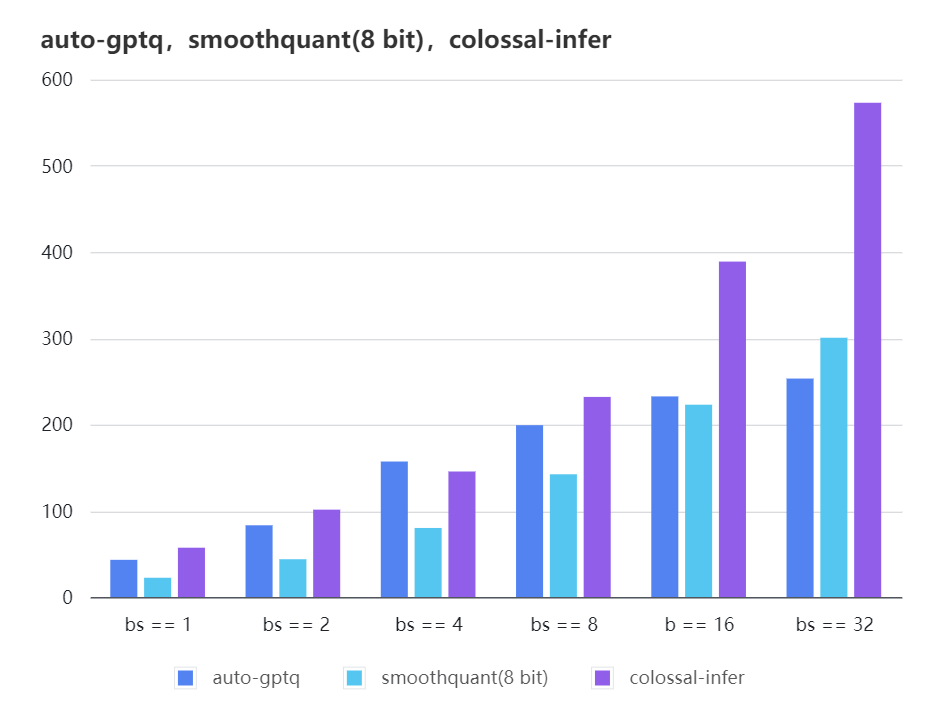

### Quantization LLama

-| batch_size | 8 | 16 | 32 |

-| :---------------------: | :----: | :----: | :----: |

-| auto-gptq | 199.20 | 232.56 | 253.26 |

-| smooth-quant | 142.28 | 222.96 | 300.59 |

-| colossal-gptq | 231.98 | 388.87 | 573.03 |

+| batch_size | 8 | 16 | 32 |

+|:-------------:|:------:|:------:|:------:|

+| auto-gptq | 199.20 | 232.56 | 253.26 |

+| smooth-quant | 142.28 | 222.96 | 300.59 |

+| colossal-gptq | 231.98 | 388.87 | 573.03 |

diff --git a/colossalai/initialize.py b/colossalai/initialize.py

index aaeaad3828f5..934555e193fc 100644

--- a/colossalai/initialize.py

+++ b/colossalai/initialize.py

@@ -2,20 +2,15 @@

# -*- encoding: utf-8 -*-

import os

-import warnings

-from pathlib import Path

-from typing import Dict, Union

import torch.distributed as dist

from colossalai.accelerator import get_accelerator

-from colossalai.context import Config

from colossalai.logging import get_dist_logger

from colossalai.utils import set_seed

def launch(

- config: Union[str, Path, Config, Dict],

rank: int,

world_size: int,

host: str,

@@ -44,8 +39,6 @@ def launch(

Raises:

Exception: Raise exception when config type is wrong

"""

- if rank == 0:

- warnings.warn("`config` is deprecated and will be removed soon.")

cur_accelerator = get_accelerator()

@@ -68,7 +61,6 @@ def launch(

def launch_from_slurm(

- config: Union[str, Path, Config, Dict],

host: str,

port: int,

backend: str = "nccl",

@@ -95,7 +87,6 @@ def launch_from_slurm(

)

launch(

- config=config,

rank=rank,

world_size=world_size,

host=host,

@@ -107,7 +98,6 @@ def launch_from_slurm(

def launch_from_openmpi(

- config: Union[str, Path, Config, Dict],

host: str,

port: int,

backend: str = "nccl",

@@ -135,7 +125,6 @@ def launch_from_openmpi(

)

launch(

- config=config,

local_rank=local_rank,

rank=rank,

world_size=world_size,

@@ -147,9 +136,7 @@ def launch_from_openmpi(

)

-def launch_from_torch(

- config: Union[str, Path, Config, Dict], backend: str = "nccl", seed: int = 1024, verbose: bool = True

-):

+def launch_from_torch(backend: str = "nccl", seed: int = 1024, verbose: bool = True):

"""A wrapper for colossalai.launch for torchrun or torch.distributed.launch by reading rank and world size

from the environment variables set by PyTorch

@@ -171,7 +158,6 @@ def launch_from_torch(

)

launch(

- config=config,

local_rank=local_rank,

rank=rank,

world_size=world_size,

diff --git a/colossalai/legacy/inference/dynamic_batching/ray_dist_init.py b/colossalai/legacy/inference/dynamic_batching/ray_dist_init.py

index 3e40bb0eeb9d..7a74fb949e8f 100644

--- a/colossalai/legacy/inference/dynamic_batching/ray_dist_init.py

+++ b/colossalai/legacy/inference/dynamic_batching/ray_dist_init.py

@@ -56,7 +56,7 @@ def setup(self, world_size, rank, port):

# initialize a ray collective group, otherwise colossalai distributed env won't be built successfully

collective.init_collective_group(world_size, rank, "nccl", "default")

# initialize and set distributed environment

- colossalai.launch(config={}, rank=rank, world_size=world_size, host="localhost", port=port, backend="nccl")

+ colossalai.launch(rank=rank, world_size=world_size, host="localhost", port=port, backend="nccl")

ray_serve_logger.info(f"Worker with rank {rank} (world size {world_size}) setting up..")

log_cuda_info("Worker.setup")

diff --git a/colossalai/legacy/inference/hybridengine/engine.py b/colossalai/legacy/inference/hybridengine/engine.py

index bc4e4fd199c0..019a678ceb02 100644

--- a/colossalai/legacy/inference/hybridengine/engine.py

+++ b/colossalai/legacy/inference/hybridengine/engine.py

@@ -42,7 +42,7 @@ class CaiInferEngine:

import colossalai

from transformers import LlamaForCausalLM, LlamaTokenizer

- colossalai.launch_from_torch(config={})

+ colossalai.launch_from_torch()

model = LlamaForCausalLM.from_pretrained("your_path_to_model")

tokenizer = LlamaTokenizer.from_pretrained("/home/lczyh/share/models/llama-7b-hf")

diff --git a/colossalai/legacy/inference/pipeline/README.md b/colossalai/legacy/inference/pipeline/README.md

index f9bb35cc4d4c..cbe96fff0404 100644

--- a/colossalai/legacy/inference/pipeline/README.md

+++ b/colossalai/legacy/inference/pipeline/README.md

@@ -36,7 +36,7 @@ from colossalai.inference.pipeline.policies import LlamaModelInferPolicy

import colossalai

from transformers import LlamaForCausalLM, LlamaTokenizer

-colossalai.launch_from_torch(config={})

+colossalai.launch_from_torch()

model = LlamaForCausalLM.from_pretrained("/path/to/model")

tokenizer = LlamaTokenizer.from_pretrained("/path/to/model")

@@ -57,27 +57,27 @@ We conducted multiple benchmark tests to evaluate the performance. We compared t

### Llama Throughput (tokens/s) | input length=1024, output length=128

#### A10 7b, fp16

-| batch_size(micro_batch size)| 2(1) | 4(2) | 8(4) | 16(8) | 32(8) | 32(16)|

-| :---: | :---: | :---: | :---: | :---: | :---: | :---:|

-| Pipeline Inference | 40.35 | 77.1 | 139.03 | 232.7 | 257.81 | OOM |

-| Hugging Face | 41.43 | 65.30 | 91.93 | 114.62 | OOM| OOM |

+| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(8) | 32(8) | 32(16) |

+|:----------------------------:|:-----:|:-----:|:------:|:------:|:------:|:------:|

+| Pipeline Inference | 40.35 | 77.1 | 139.03 | 232.7 | 257.81 | OOM |

+| Hugging Face | 41.43 | 65.30 | 91.93 | 114.62 | OOM | OOM |

#### A10 13b, fp16

-| batch_size(micro_batch size)| 2(1) | 4(2) | 8(4) | 16(4) |

-| :---: | :---: | :---: | :---: | :---: |

-| Pipeline Inference | 25.39 | 47.09 | 83.7 | 89.46 |

-| Hugging Face | 23.48 | 37.59 | 53.44 | OOM |

+| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(4) |

+|:----------------------------:|:-----:|:-----:|:-----:|:-----:|

+| Pipeline Inference | 25.39 | 47.09 | 83.7 | 89.46 |

+| Hugging Face | 23.48 | 37.59 | 53.44 | OOM |

#### A800 7b, fp16

-| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(8) | 32(16) |

-| :---: | :---: | :---: | :---: | :---: | :---: |

-| Pipeline Inference| 57.97 | 110.13 | 213.33 | 389.86 | 670.12 |

-| Hugging Face | 42.44 | 76.5 | 151.97 | 212.88 | 256.13 |

+| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(8) | 32(16) |

+|:----------------------------:|:-----:|:------:|:------:|:------:|:------:|

+| Pipeline Inference | 57.97 | 110.13 | 213.33 | 389.86 | 670.12 |

+| Hugging Face | 42.44 | 76.5 | 151.97 | 212.88 | 256.13 |

#### A800 13b, fp16

-| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(8) | 32(16) |

-| :---: | :---: | :---: | :---: | :---: | :---: |

-| Pipeline Inference | 41.78 | 94.18 | 172.67| 310.75| 470.15 |

-| Hugging Face | 36.57 | 68.4 | 105.81 | 139.51 | 166.34 |

+| batch_size(micro_batch size) | 2(1) | 4(2) | 8(4) | 16(8) | 32(16) |

+|:----------------------------:|:-----:|:-----:|:------:|:------:|:------:|

+| Pipeline Inference | 41.78 | 94.18 | 172.67 | 310.75 | 470.15 |

+| Hugging Face | 36.57 | 68.4 | 105.81 | 139.51 | 166.34 |

diff --git a/colossalai/legacy/inference/pipeline/benchmark/benchmark.py b/colossalai/legacy/inference/pipeline/benchmark/benchmark.py

index 8392d0a1e579..7bb89f4f44f8 100644

--- a/colossalai/legacy/inference/pipeline/benchmark/benchmark.py

+++ b/colossalai/legacy/inference/pipeline/benchmark/benchmark.py

@@ -12,7 +12,7 @@

GIGABYTE = 1024**3

MEGABYTE = 1024 * 1024

-colossalai.launch_from_torch(config={})

+colossalai.launch_from_torch()

def data_gen(batch_size: int = 4, seq_len: int = 512):

diff --git a/colossalai/legacy/inference/serving/ray_serve/Colossal_Inference_rayserve.py b/colossalai/legacy/inference/serving/ray_serve/Colossal_Inference_rayserve.py

index d758b467c730..37e7bae419e8 100644

--- a/colossalai/legacy/inference/serving/ray_serve/Colossal_Inference_rayserve.py

+++ b/colossalai/legacy/inference/serving/ray_serve/Colossal_Inference_rayserve.py

@@ -56,7 +56,7 @@ def setup(self, world_size, rank, port):

# initialize a ray collective group, otherwise colossalai distributed env won't be built successfully

collective.init_collective_group(world_size, rank, "nccl", "default")

# initialize and set distributed environment

- colossalai.launch(config={}, rank=rank, world_size=world_size, host="localhost", port=port, backend="nccl")

+ colossalai.launch(rank=rank, world_size=world_size, host="localhost", port=port, backend="nccl")

ray_serve_logger.info(f"Worker with rank {rank} (world size {world_size}) setting up..")

log_cuda_info("Worker.setup")

diff --git a/colossalai/legacy/inference/serving/torch_serve/Colossal_Inference_Handler.py b/colossalai/legacy/inference/serving/torch_serve/Colossal_Inference_Handler.py

index e07494b8a1a9..bcbdee951021 100644

--- a/colossalai/legacy/inference/serving/torch_serve/Colossal_Inference_Handler.py

+++ b/colossalai/legacy/inference/serving/torch_serve/Colossal_Inference_Handler.py

@@ -98,7 +98,7 @@ def initialize(self, ctx):

self.model.cuda()

self.model.eval()

- colossalai.launch(config={}, rank=rank, world_size=world_size, host=host, port=port, backend="nccl")

+ colossalai.launch(rank=rank, world_size=world_size, host=host, port=port, backend="nccl")

logger.info("Initializing TPInferEngine ...")

shard_config = ShardConfig(

enable_tensor_parallelism=True if self.tp_size > 1 else False, extra_kwargs={"inference_only": True}

diff --git a/colossalai/legacy/pipeline/rpc/utils.py b/colossalai/legacy/pipeline/rpc/utils.py

index 808de301a2a0..87060ab8a8ba 100644

--- a/colossalai/legacy/pipeline/rpc/utils.py

+++ b/colossalai/legacy/pipeline/rpc/utils.py

@@ -114,7 +114,7 @@ def run_worker(rank, args, master_func):

port = args.master_port

backend = "nccl" if device == "cuda" else "gloo"

- launch(dict(), rank, world_size, host, int(port), backend, verbose=False)

+ launch(rank, world_size, host, int(port), backend, verbose=False)

ppg.set_global_info(

rank=rank,

world_size=world_size,

diff --git a/colossalai/nn/optimizer/fused_adam.py b/colossalai/nn/optimizer/fused_adam.py

index aeb5cc91bb9e..c12551657318 100644

--- a/colossalai/nn/optimizer/fused_adam.py

+++ b/colossalai/nn/optimizer/fused_adam.py

@@ -8,7 +8,7 @@

"""

import torch

-from colossalai.utils import multi_tensor_applier

+from colossalai.utils import get_current_device, multi_tensor_applier

class FusedAdam(torch.optim.Optimizer):

@@ -75,7 +75,7 @@ def __init__(

fused_optim = FusedOptimizerLoader().load()

# Skip buffer

- self._dummy_overflow_buf = torch.cuda.IntTensor([0])

+ self._dummy_overflow_buf = torch.tensor([0], dtype=torch.int, device=get_current_device())

self.multi_tensor_adam = fused_optim.multi_tensor_adam

else:

raise RuntimeError("FusedAdam requires cuda extensions")

diff --git a/colossalai/nn/optimizer/hybrid_adam.py b/colossalai/nn/optimizer/hybrid_adam.py

index c9c1f81bfc9a..417881a0b93f 100644

--- a/colossalai/nn/optimizer/hybrid_adam.py

+++ b/colossalai/nn/optimizer/hybrid_adam.py

@@ -3,7 +3,7 @@

import torch

from colossalai.kernel.kernel_loader import FusedOptimizerLoader

-from colossalai.utils import multi_tensor_applier

+from colossalai.utils import get_current_device, multi_tensor_applier

from .cpu_adam import CPUAdam

@@ -87,7 +87,7 @@ def __init__(

if torch.cuda.is_available():

fused_optim = FusedOptimizerLoader().load()

self.gpu_adam_op = fused_optim.multi_tensor_adam

- self._dummy_overflow_buf = torch.cuda.IntTensor([0])

+ self._dummy_overflow_buf = torch.tensor([0], dtype=torch.int, device=get_current_device())

@torch.no_grad()

def step(self, closure=None, div_scale: float = -1):

diff --git a/colossalai/pipeline/p2p.py b/colossalai/pipeline/p2p.py

index 5588aa5789a9..1b55b140c0ba 100644

--- a/colossalai/pipeline/p2p.py

+++ b/colossalai/pipeline/p2p.py

@@ -45,6 +45,18 @@ def _cuda_safe_tensor_to_object(tensor: torch.Tensor, tensor_size: torch.Size) -

return unpickle

+def check_for_nccl_backend(group):

+ pg = group or c10d._get_default_group()

+ # Gate PG wrapper check on Gloo availability.

+ if c10d._GLOO_AVAILABLE:

+ # It is not expected for PG to be wrapped many times, but support it just

+ # in case

+ while isinstance(pg, c10d._ProcessGroupWrapper):

+ pg = pg.wrapped_pg

+

+ return c10d.is_nccl_available() and pg.name() == c10d.Backend.NCCL

+

+

# NOTE: FIXME: NPU DOES NOT support isend nor irecv, so broadcast is kept for future use

def _broadcast_object_list(

object_list: List[Any], src: int, group: ProcessGroup, device: Optional[Union[torch.device, str, int]] = None

diff --git a/colossalai/quantization/__init__.py b/colossalai/quantization/__init__.py

new file mode 100644

index 000000000000..e9707b479691

--- /dev/null

+++ b/colossalai/quantization/__init__.py

@@ -0,0 +1,7 @@

+from .bnb import quantize_model

+from .bnb_config import BnbQuantizationConfig

+

+__all__ = [

+ "BnbQuantizationConfig",

+ "quantize_model",

+]

diff --git a/colossalai/quantization/bnb.py b/colossalai/quantization/bnb.py

new file mode 100644

index 000000000000..fa214116afd1

--- /dev/null

+++ b/colossalai/quantization/bnb.py

@@ -0,0 +1,321 @@

+# adapted from Hugging Face accelerate/utils/bnb.py accelerate/utils/modeling.py

+

+import logging

+

+import torch

+import torch.nn as nn

+

+from .bnb_config import BnbQuantizationConfig

+

+try:

+ import bitsandbytes as bnb

+

+ IS_4BIT_BNB_AVAILABLE = bnb.__version__ >= "0.39.0"

+ IS_8BIT_BNB_AVAILABLE = bnb.__version__ >= "0.37.2"

+except ImportError:

+ pass

+

+

+logger = logging.getLogger(__name__)

+

+

+def quantize_model(

+ model: torch.nn.Module,

+ bnb_quantization_config: BnbQuantizationConfig,

+):

+ """

+ This function will quantize the input loaded model with the associated config passed in `bnb_quantization_config`.

+ We will quantize the model and put the model on the GPU.

+

+ Args:

+ model (`torch.nn.Module`):

+ Input model. The model already loaded

+ bnb_quantization_config (`BnbQuantizationConfig`):

+ The bitsandbytes quantization parameters

+

+ Returns:

+ `torch.nn.Module`: The quantized model

+ """

+

+ load_in_4bit = bnb_quantization_config.load_in_4bit

+ load_in_8bit = bnb_quantization_config.load_in_8bit

+

+ if load_in_8bit and not IS_8BIT_BNB_AVAILABLE:

+ raise ImportError(

+ "You have a version of `bitsandbytes` that is not compatible with 8bit quantization,"

+ " make sure you have the latest version of `bitsandbytes` installed."

+ )

+ if load_in_4bit and not IS_4BIT_BNB_AVAILABLE:

+ raise ValueError(

+ "You have a version of `bitsandbytes` that is not compatible with 4bit quantization,"

+ "make sure you have the latest version of `bitsandbytes` installed."

+ )

+

+ # We keep some modules such as the lm_head in their original dtype for numerical stability reasons

+ if bnb_quantization_config.skip_modules is None:

+ bnb_quantization_config.skip_modules = get_keys_to_not_convert(model)

+

+ modules_to_not_convert = bnb_quantization_config.skip_modules

+

+ # We add the modules we want to keep in full precision

+ if bnb_quantization_config.keep_in_fp32_modules is None:

+ bnb_quantization_config.keep_in_fp32_modules = []

+ keep_in_fp32_modules = bnb_quantization_config.keep_in_fp32_modules

+

+ # compatibility with peft

+ model.is_loaded_in_4bit = load_in_4bit

+ model.is_loaded_in_8bit = load_in_8bit

+

+ # assert model_device is cuda

+ model_device = next(model.parameters()).device

+

+ model = replace_with_bnb_layers(model, bnb_quantization_config, modules_to_not_convert=modules_to_not_convert)

+

+ # convert param to the right dtype

+ dtype = bnb_quantization_config.torch_dtype

+ for name, param in model.state_dict().items():

+ if any(module_to_keep_in_fp32 in name for module_to_keep_in_fp32 in keep_in_fp32_modules):

+ param.to(torch.float32)

+ if param.dtype != torch.float32:

+ name = name.replace(".weight", "").replace(".bias", "")

+ param = getattr(model, name, None)

+ if param is not None:

+ param.to(torch.float32)

+ elif torch.is_floating_point(param):

+ param.to(dtype)

+ if model_device.type == "cuda":

+ # move everything to cpu in the first place because we can't do quantization if the weights are already on cuda

+ model.cuda(torch.cuda.current_device())

+ torch.cuda.empty_cache()

+ elif torch.cuda.is_available():

+ model.to(torch.cuda.current_device())

+ logger.info(

+ f"The model device type is {model_device.type}. However, cuda is needed for quantization."

+ "We move the model to cuda."

+ )

+ else:

+ raise RuntimeError("No GPU found. A GPU is needed for quantization.")

+ return model

+

+

+def replace_with_bnb_layers(model, bnb_quantization_config, modules_to_not_convert=None, current_key_name=None):

+ """

+ A helper function to replace all `torch.nn.Linear` modules by `bnb.nn.Linear8bit` modules or by `bnb.nn.Linear4bit`

+ modules from the `bitsandbytes`library. The function will be run recursively and replace `torch.nn.Linear` modules.

+

+ Parameters:

+ model (`torch.nn.Module`):

+ Input model or `torch.nn.Module` as the function is run recursively.

+ modules_to_not_convert (`List[str]`):

+ Names of the modules to not quantize convert. In practice we keep the `lm_head` in full precision for

+ numerical stability reasons.

+ current_key_name (`List[str]`, *optional*):

+ An array to track the current key of the recursion. This is used to check whether the current key (part of

+ it) is not in the list of modules to not convert.

+ """

+

+ if modules_to_not_convert is None:

+ modules_to_not_convert = []

+

+ model, has_been_replaced = _replace_with_bnb_layers(

+ model, bnb_quantization_config, modules_to_not_convert, current_key_name

+ )

+ if not has_been_replaced:

+ logger.warning(

+ "You are loading your model in 8bit or 4bit but no linear modules were found in your model."

+ " this can happen for some architectures such as gpt2 that uses Conv1D instead of Linear layers."

+ " Please double check your model architecture, or submit an issue on github if you think this is"

+ " a bug."

+ )

+ return model

+

+

+def _replace_with_bnb_layers(

+ model,

+ bnb_quantization_config,

+ modules_to_not_convert=None,

+ current_key_name=None,

+):

+ """

+ Private method that wraps the recursion for module replacement.

+

+ Returns the converted model and a boolean that indicates if the conversion has been successfull or not.

+ """

+ # bitsandbytes will initialize CUDA on import, so it needs to be imported lazily

+

+ has_been_replaced = False

+ for name, module in model.named_children():

+ if current_key_name is None:

+ current_key_name = []

+ current_key_name.append(name)

+ if isinstance(module, nn.Linear) and name not in modules_to_not_convert:

+ # Check if the current key is not in the `modules_to_not_convert`

+ current_key_name_str = ".".join(current_key_name)

+ proceed = True

+ for key in modules_to_not_convert:

+ if (

+ (key in current_key_name_str) and (key + "." in current_key_name_str)

+ ) or key == current_key_name_str:

+ proceed = False

+ break

+ if proceed:

+ # Load bnb module with empty weight and replace ``nn.Linear` module

+ if bnb_quantization_config.load_in_8bit:

+ bnb_module = bnb.nn.Linear8bitLt(

+ module.in_features,

+ module.out_features,

+ module.bias is not None,

+ has_fp16_weights=False,

+ threshold=bnb_quantization_config.llm_int8_threshold,

+ )

+ elif bnb_quantization_config.load_in_4bit:

+ bnb_module = bnb.nn.Linear4bit(

+ module.in_features,

+ module.out_features,

+ module.bias is not None,

+ bnb_quantization_config.bnb_4bit_compute_dtype,

+ compress_statistics=bnb_quantization_config.bnb_4bit_use_double_quant,

+ quant_type=bnb_quantization_config.bnb_4bit_quant_type,

+ )

+ else:

+ raise ValueError("load_in_8bit and load_in_4bit can't be both False")

+ bnb_module.weight.data = module.weight.data

+ bnb_module.weight.skip_zero_check = True

+ if module.bias is not None:

+ bnb_module.bias.data = module.bias.data

+ bnb_module.bias.skip_zero_check = True

+ bnb_module.requires_grad_(False)

+ setattr(model, name, bnb_module)

+ has_been_replaced = True

+ if len(list(module.children())) > 0:

+ _, _has_been_replaced = _replace_with_bnb_layers(

+ module, bnb_quantization_config, modules_to_not_convert, current_key_name

+ )

+ has_been_replaced = has_been_replaced | _has_been_replaced

+ # Remove the last key for recursion

+ current_key_name.pop(-1)

+ return model, has_been_replaced

+

+

+def get_keys_to_not_convert(model):

+ r"""

+ An utility function to get the key of the module to keep in full precision if any For example for CausalLM modules

+ we may want to keep the lm_head in full precision for numerical stability reasons. For other architectures, we want

+ to keep the tied weights of the model. The function will return a list of the keys of the modules to not convert in

+ int8.

+

+ Parameters:

+ model (`torch.nn.Module`):

+ Input model

+ """

+ # Create a copy of the model

+ # with init_empty_weights():

+ # tied_model = deepcopy(model) # this has 0 cost since it is done inside `init_empty_weights` context manager`

+ tied_model = model

+

+ tied_params = find_tied_parameters(tied_model)

+ # For compatibility with Accelerate < 0.18

+ if isinstance(tied_params, dict):

+ tied_keys = sum(list(tied_params.values()), []) + list(tied_params.keys())

+ else:

+ tied_keys = sum(tied_params, [])

+ has_tied_params = len(tied_keys) > 0

+

+ # Check if it is a base model

+ is_base_model = False

+ if hasattr(model, "base_model_prefix"):

+ is_base_model = not hasattr(model, model.base_model_prefix)

+

+ # Ignore this for base models (BertModel, GPT2Model, etc.)

+ if (not has_tied_params) and is_base_model:

+ return []

+

+ # otherwise they have an attached head

+ list_modules = list(model.named_children())

+ list_last_module = [list_modules[-1][0]]

+

+ # add last module together with tied weights

+ intersection = set(list_last_module) - set(tied_keys)

+ list_untouched = list(set(tied_keys)) + list(intersection)

+

+ # remove ".weight" from the keys

+ names_to_remove = [".weight", ".bias"]

+ filtered_module_names = []

+ for name in list_untouched:

+ for name_to_remove in names_to_remove:

+ if name_to_remove in name:

+ name = name.replace(name_to_remove, "")

+ filtered_module_names.append(name)

+

+ return filtered_module_names

+

+

+def find_tied_parameters(model: nn.Module, **kwargs):

+ """

+ Find the tied parameters in a given model.

+

+

+

+ The signature accepts keyword arguments, but they are for the recursive part of this function and you should ignore

+ them.

+

+

+

+ Args:

+ model (`torch.nn.Module`): The model to inspect.

+

+ Returns:

+ List[List[str]]: A list of lists of parameter names being all tied together.

+

+ Example:

+

+ ```py

+ >>> from collections import OrderedDict

+ >>> import torch.nn as nn

+

+ >>> model = nn.Sequential(OrderedDict([("linear1", nn.Linear(4, 4)), ("linear2", nn.Linear(4, 4))]))

+ >>> model.linear2.weight = model.linear1.weight

+ >>> find_tied_parameters(model)

+ [['linear1.weight', 'linear2.weight']]

+ ```

+ """

+ # Initialize result and named_parameters before recursing.

+ named_parameters = kwargs.get("named_parameters", None)

+ prefix = kwargs.get("prefix", "")

+ result = kwargs.get("result", {})

+

+ if named_parameters is None:

+ named_parameters = {n: p for n, p in model.named_parameters()}

+ else:

+ # A tied parameter will not be in the full `named_parameters` seen above but will be in the `named_parameters`

+ # of the submodule it belongs to. So while recursing we track the names that are not in the initial

+ # `named_parameters`.

+ for name, parameter in model.named_parameters():

+ full_name = name if prefix == "" else f"{prefix}.{name}"

+ if full_name not in named_parameters:

+ # When we find one, it has to be one of the existing parameters.

+ for new_name, new_param in named_parameters.items():

+ if new_param is parameter:

+ if new_name not in result:

+ result[new_name] = []

+ result[new_name].append(full_name)

+

+ # Once we have treated direct parameters, we move to the child modules.

+ for name, child in model.named_children():

+ child_name = name if prefix == "" else f"{prefix}.{name}"

+ find_tied_parameters(child, named_parameters=named_parameters, prefix=child_name, result=result)

+

+ return FindTiedParametersResult([sorted([weight] + list(set(tied))) for weight, tied in result.items()])

+

+

+class FindTiedParametersResult(list):

+ """

+ This is a subclass of a list to handle backward compatibility for Transformers. Do not rely on the fact this is not

+ a list or on the `values` method as in the future this will be removed.

+ """

+

+ def __init__(self, *args, **kwargs):

+ super().__init__(*args, **kwargs)

+

+ def values(self):

+ return sum([x[1:] for x in self], [])

diff --git a/colossalai/quantization/bnb_config.py b/colossalai/quantization/bnb_config.py

new file mode 100644

index 000000000000..98a30211b13d

--- /dev/null

+++ b/colossalai/quantization/bnb_config.py

@@ -0,0 +1,113 @@

+# adapted from Hugging Face accelerate/utils/dataclasses.py

+

+import warnings

+from dataclasses import dataclass, field

+from typing import List

+

+import torch

+

+

+@dataclass

+class BnbQuantizationConfig:

+ """

+ A plugin to enable BitsAndBytes 4bit and 8bit quantization

+ """

+

+ load_in_8bit: bool = field(default=False, metadata={"help": "enable 8bit quantization."})

+

+ llm_int8_threshold: float = field(

+ default=6.0, metadata={"help": "value of the outliner threshold. only relevant when load_in_8bit=True"}

+ )

+

+ load_in_4bit: bool = field(default=False, metadata={"help": "enable 4bit quantization."})

+

+ bnb_4bit_quant_type: str = field(

+ default="fp4",

+ metadata={

+ "help": "set the quantization data type in the `bnb.nn.Linear4Bit` layers. Options are {'fp4','np4'}."

+ },

+ )

+

+ bnb_4bit_use_double_quant: bool = field(

+ default=False,

+ metadata={

+ "help": "enable nested quantization where the quantization constants from the first quantization are quantized again."

+ },

+ )

+

+ bnb_4bit_compute_dtype: bool = field(

+ default="fp16",

+ metadata={

+ "help": "This sets the computational type which might be different than the input time. For example, inputs might be "

+ "fp32, but computation can be set to bf16 for speedups. Options are {'fp32','fp16','bf16'}."

+ },

+ )

+

+ torch_dtype: torch.dtype = field(

+ default=None,

+ metadata={

+ "help": "this sets the dtype of the remaining non quantized layers. `bitsandbytes` library suggests to set the value"

+ "to `torch.float16` for 8 bit model and use the same dtype as the compute dtype for 4 bit model "

+ },

+ )

+

+ skip_modules: List[str] = field(

+ default=None,

+ metadata={

+ "help": "an explicit list of the modules that we don't quantize. The dtype of these modules will be `torch_dtype`."

+ },

+ )

+

+ keep_in_fp32_modules: List[str] = field(

+ default=None,

+ metadata={"help": "an explicit list of the modules that we don't quantize. We keep them in `torch.float32`."},

+ )

+

+ def __post_init__(self):

+ if isinstance(self.bnb_4bit_compute_dtype, str):

+ if self.bnb_4bit_compute_dtype == "fp32":

+ self.bnb_4bit_compute_dtype = torch.float32

+ elif self.bnb_4bit_compute_dtype == "fp16":

+ self.bnb_4bit_compute_dtype = torch.float16

+ elif self.bnb_4bit_compute_dtype == "bf16":

+ self.bnb_4bit_compute_dtype = torch.bfloat16

+ else:

+ raise ValueError(

+ f"bnb_4bit_compute_dtype must be in ['fp32','fp16','bf16'] but found {self.bnb_4bit_compute_dtype}"

+ )

+ elif not isinstance(self.bnb_4bit_compute_dtype, torch.dtype):

+ raise ValueError("bnb_4bit_compute_dtype must be a string or a torch.dtype")

+

+ if self.skip_modules is not None and not isinstance(self.skip_modules, list):

+ raise ValueError("skip_modules must be a list of strings")

+

+ if self.keep_in_fp32_modules is not None and not isinstance(self.keep_in_fp32_modules, list):

+ raise ValueError("keep_in_fp_32_modules must be a list of strings")

+

+ if self.load_in_4bit:

+ self.target_dtype = "int4"

+

+ if self.load_in_8bit:

+ self.target_dtype = torch.int8

+

+ if self.load_in_4bit and self.llm_int8_threshold != 6.0:

+ warnings.warn("llm_int8_threshold can only be used for model loaded in 8bit")

+

+ if isinstance(self.torch_dtype, str):

+ if self.torch_dtype == "fp32":

+ self.torch_dtype = torch.float32

+ elif self.torch_dtype == "fp16":

+ self.torch_dtype = torch.float16

+ elif self.torch_dtype == "bf16":

+ self.torch_dtype = torch.bfloat16

+ else:

+ raise ValueError(f"torch_dtype must be in ['fp32','fp16','bf16'] but found {self.torch_dtype}")

+

+ if self.load_in_8bit and self.torch_dtype is None:

+ self.torch_dtype = torch.float16

+

+ if self.load_in_4bit and self.torch_dtype is None:

+ self.torch_dtype = self.bnb_4bit_compute_dtype

+

+ if not isinstance(self.torch_dtype, torch.dtype):

+ raise ValueError("torch_dtype must be a torch.dtype")

diff --git a/colossalai/shardformer/README.md b/colossalai/shardformer/README.md

index d45421868321..47ef98ccf7e8 100644

--- a/colossalai/shardformer/README.md

+++ b/colossalai/shardformer/README.md

@@ -38,7 +38,7 @@ from transformers import BertForMaskedLM

import colossalai

# launch colossalai

-colossalai.launch_from_torch(config={})

+colossalai.launch_from_torch()

# create model

config = BertConfig.from_pretrained('bert-base-uncased')

diff --git a/colossalai/shardformer/examples/convergence_benchmark.py b/colossalai/shardformer/examples/convergence_benchmark.py

index b03e6201dce8..4caf61eb4ec4 100644

--- a/colossalai/shardformer/examples/convergence_benchmark.py

+++ b/colossalai/shardformer/examples/convergence_benchmark.py

@@ -28,7 +28,7 @@ def _to(t: Any):

def train(args):

- colossalai.launch_from_torch(config={}, seed=42)

+ colossalai.launch_from_torch(seed=42)

coordinator = DistCoordinator()

# prepare for data and dataset

diff --git a/colossalai/shardformer/examples/performance_benchmark.py b/colossalai/shardformer/examples/performance_benchmark.py

index 81215dcdf5d4..cce8b6f3a40f 100644

--- a/colossalai/shardformer/examples/performance_benchmark.py

+++ b/colossalai/shardformer/examples/performance_benchmark.py

@@ -1,6 +1,7 @@

"""

Shardformer Benchmark

"""

+

import torch

import torch.distributed as dist

import transformers

@@ -84,5 +85,5 @@ def bench_shardformer(BATCH, N_CTX, provider, model_func, dtype=torch.float32, d

# start benchmark, command:

# torchrun --standalone --nproc_per_node=2 performance_benchmark.py

if __name__ == "__main__":

- colossalai.launch_from_torch({})

+ colossalai.launch_from_torch()

bench_shardformer.run(save_path=".", print_data=dist.get_rank() == 0)

diff --git a/colossalai/shardformer/modeling/bert.py b/colossalai/shardformer/modeling/bert.py

index 0838fcee682e..e7679f0ec846 100644

--- a/colossalai/shardformer/modeling/bert.py

+++ b/colossalai/shardformer/modeling/bert.py

@@ -1287,3 +1287,16 @@ def forward(

)

return forward

+

+

+def get_jit_fused_bert_intermediate_forward():

+ from transformers.models.bert.modeling_bert import BertIntermediate

+

+ from colossalai.kernel.jit.bias_gelu import GeLUFunction as JitGeLUFunction

+

+ def forward(self: BertIntermediate, hidden_states: torch.Tensor) -> torch.Tensor:

+ hidden_states, bias = self.dense(hidden_states)

+ hidden_states = JitGeLUFunction.apply(hidden_states, bias)

+ return hidden_states

+

+ return forward

diff --git a/colossalai/shardformer/modeling/blip2.py b/colossalai/shardformer/modeling/blip2.py

index bd84c87c667d..96e8a9d0c127 100644

--- a/colossalai/shardformer/modeling/blip2.py

+++ b/colossalai/shardformer/modeling/blip2.py

@@ -129,3 +129,17 @@ def forward(

return hidden_states

return forward

+

+

+def get_jit_fused_blip2_mlp_forward():

+ from transformers.models.blip_2.modeling_blip_2 import Blip2MLP

+

+ from colossalai.kernel.jit.bias_gelu import GeLUFunction as JitGeLUFunction

+

+ def forward(self: Blip2MLP, hidden_states: torch.Tensor) -> torch.Tensor:

+ hidden_states, bias = self.fc1(hidden_states)

+ hidden_states = JitGeLUFunction.apply(hidden_states, bias)

+ hidden_states = self.fc2(hidden_states)

+ return hidden_states

+

+ return forward

diff --git a/colossalai/shardformer/modeling/gpt2.py b/colossalai/shardformer/modeling/gpt2.py

index 17acdf7fcbba..bfa995645ef1 100644

--- a/colossalai/shardformer/modeling/gpt2.py

+++ b/colossalai/shardformer/modeling/gpt2.py

@@ -1310,3 +1310,18 @@ def forward(

)

return forward

+

+

+def get_jit_fused_gpt2_mlp_forward():

+ from transformers.models.gpt2.modeling_gpt2 import GPT2MLP

+