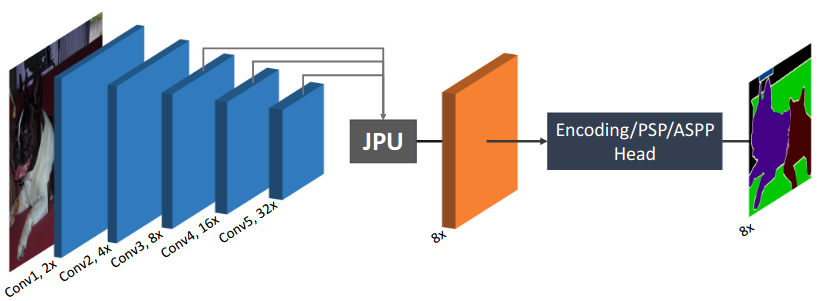

Modern approaches for semantic segmentation usually employ dilated convolutions in the backbone to extract high-resolution feature maps, which brings heavy computation complexity and memory footprint. To replace the time and memory consuming dilated convolutions, we propose a novel joint upsampling module named Joint Pyramid Upsampling (JPU) by formulating the task of extracting high-resolution feature maps into a joint upsampling problem. With the proposed JPU, our method reduces the computation complexity by more than three times without performance loss. Experiments show that JPU is superior to other upsampling modules, which can be plugged into many existing approaches to reduce computation complexity and improve performance. By replacing dilated convolutions with the proposed JPU module, our method achieves the state-of-the-art performance in Pascal Context dataset (mIoU of 53.13%) and ADE20K dataset (final score of 0.5584) while running 3 times faster.

FastFCN (ArXiv'2019)

@article{wu2019fastfcn,

title={Fastfcn: Rethinking dilated convolution in the backbone for semantic segmentation},

author={Wu, Huikai and Zhang, Junge and Huang, Kaiqi and Liang, Kongming and Yu, Yizhou},

journal={arXiv preprint arXiv:1903.11816},

year={2019}

}| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | mIoU | mIoU(ms+flip) | config | download |

|---|---|---|---|---|---|---|---|---|---|

| DeepLabV3 + JPU | R-50-D32 | 512x1024 | 80000 | 5.67 | 2.64 | 79.12 | 80.58 | config | model | log |

| DeepLabV3 + JPU (4x4) | R-50-D32 | 512x1024 | 80000 | 9.79 | - | 79.52 | 80.91 | config | model | log |

| PSPNet + JPU | R-50-D32 | 512x1024 | 80000 | 5.67 | 4.40 | 79.26 | 80.86 | config | model | log |

| PSPNet + JPU (4x4) | R-50-D32 | 512x1024 | 80000 | 9.94 | - | 78.76 | 80.03 | config | model | log |

| EncNet + JPU | R-50-D32 | 512x1024 | 80000 | 8.15 | 4.77 | 77.97 | 79.92 | config | model | log |

| EncNet + JPU (4x4) | R-50-D32 | 512x1024 | 80000 | 15.45 | - | 78.6 | 80.25 | config | model | log |

| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | mIoU | mIoU(ms+flip) | config | download |

|---|---|---|---|---|---|---|---|---|---|

| DeepLabV3 + JPU | R-50-D32 | 512x1024 | 80000 | 8.46 | 12.06 | 41.88 | 42.91 | config | model | log |

| DeepLabV3 + JPU | R-50-D32 | 512x1024 | 160000 | - | - | 43.58 | 44.92 | config | model | log |

| PSPNet + JPU | R-50-D32 | 512x1024 | 80000 | 8.02 | 19.21 | 41.40 | 42.12 | config | model | log |

| PSPNet + JPU | R-50-D32 | 512x1024 | 160000 | - | - | 42.63 | 43.71 | config | model | log |

| EncNet + JPU | R-50-D32 | 512x1024 | 80000 | 9.67 | 17.23 | 40.88 | 42.36 | config | model | log |

| EncNet + JPU | R-50-D32 | 512x1024 | 160000 | - | - | 42.50 | 44.21 | config | model | log |

Note:

4x4means 4 GPUs with 4 samples per GPU in training, default setting is 4 GPUs with 2 samples per GPU in training.- Results of DeepLabV3 (mIoU: 79.32), PSPNet (mIoU: 78.55) and ENCNet (mIoU: 77.94) can be found in each original repository.