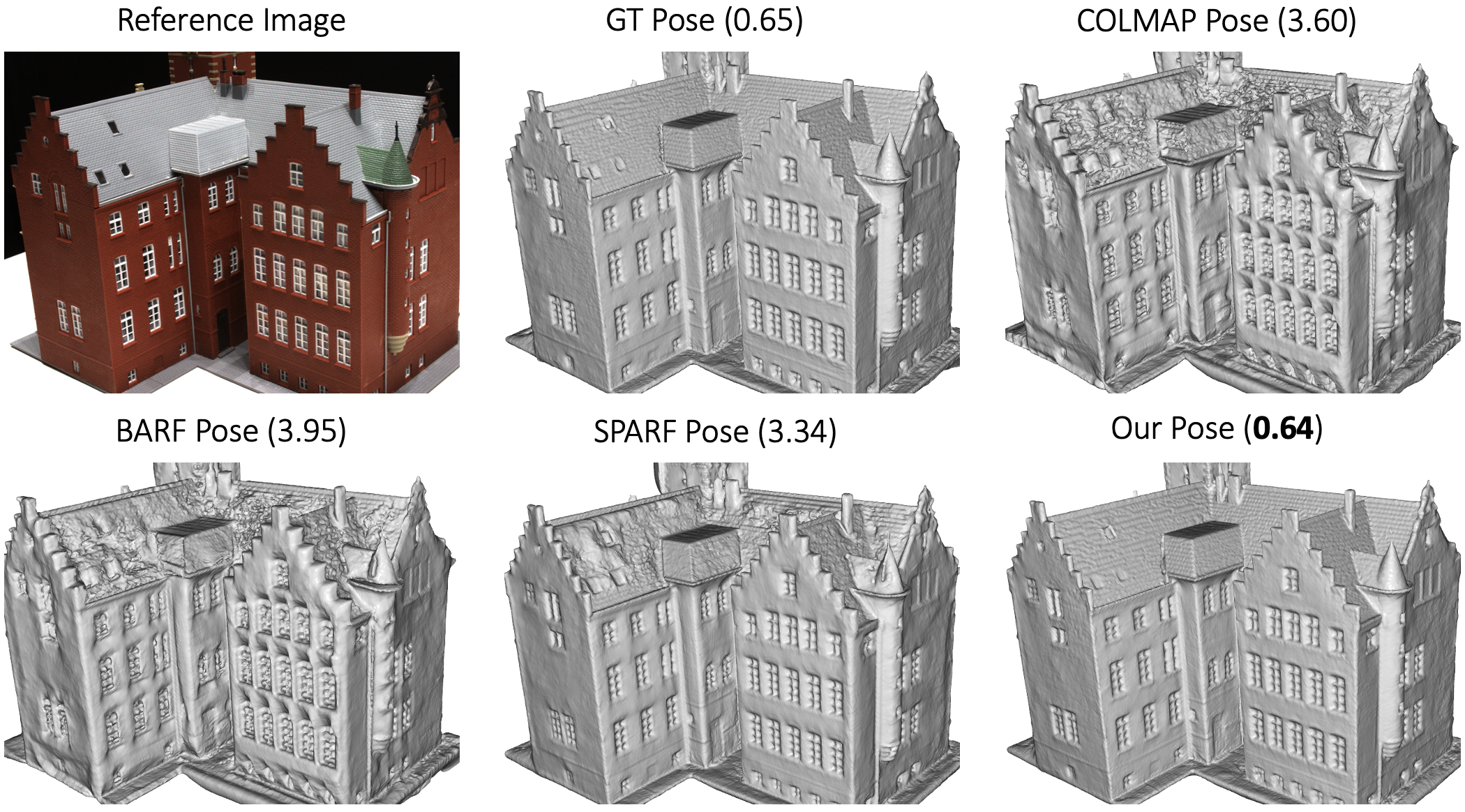

We present PoRF (pose residual field) for joint optimisation of neural surface reconstruction and camera pose. It uses an MLP to refine the camera poses for all images in the dataset instead of optimising pose parameters for each image independently. The following figure shows that our method can take the COLMAP pose as input and our refined camera pose can be comparable to the GT pose in 3D surface reconstruction. The Chamfer distances (mm) are compared.

Project page | Paper | Data

This is the official repo for the implementation of PoRF: Pose Residual Field for Accurate Neural Surface Reconstruction.

The data is organized as follows:

<case_name>

|-- cameras.npz # GT camera parameters

|-- cameras_colmap.npz # COLMAP camera parameters

|-- image

|-- 000.png # target image for each view

|-- 001.png

...

|-- colmap_matches

|-- 000000.npz # matches exported from COLMAP

|-- 000001.npz

...

Here the cameras.npz follows the data format in IDR, where world_mat_xx denotes the world to image projection matrix, and scale_mat_xx denotes the normalization matrix.

Clone this repository

git clone https://github.com/ActiveVisionLab/porf.git

cd porf

conda create -n porf python=3.9

conda activate porf

conda install pytorch==1.13.1 torchvision==0.14.1 pytorch-cuda=11.7 -c pytorch -c nvidia

pip install -r requirements.txt- Example (you need to change the address)

bash scripts/train_sift_dtu.sh- Training

python train.py --mode train --conf confs/dtu_sift_porf.conf --case <case_name>After training, a mesh should be found in exp/<case_name>/<exp_name>/meshes/<iter_steps>.ply. Note that it is used for debugging the first-stage pose optimisation. If you need high-quality mesh as shown in the paper, you should export the refined camera pose and use it to train a Voxurf model.

- Export Refined Camera Pose (change folder address)

python export_camera_file.pyCite below if you find this repository helpful to your project:

@inproceedings{bian2024porf,

title={PoRF: Pose Residual Field for Accurate Neural Surface Reconstruction},

author={Jia-Wang Bian and Wenjing Bian and Victor Adrian Prisacariu and Philip Torr},

booktitle={ICLR},

year={2024}

}

Some code snippets are borrowed from NeuS. Thanks for these great projects.