-

-

Notifications

You must be signed in to change notification settings - Fork 5.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

glibc is optimized for memory allocations microbenchmarks #42566

Comments

|

Edit: This comment is probably not worth your time to read I'm on a mac (11.4) and for this comment I'm using the standard Julia REPL from 1.6.1 in a standard mac terminal. I'm not sure what #fresh session

#95 MB

#your function declarations

#116 MB

iterated(1)

#1.81 GB

GC.gc()

#631 MB

iterated(10)

#1.47 GB

GC.gc()

#648 MBAnd in another way #Fresh julia session

#95 MB

function inner_df(Nrow,Ncol) # Create small dataframe

[rand(Nrow) for i in 1:Ncol]

end

function outer_df(N, Nrow, Ncol) #Stack small dataframes

df=[Vector{Float64}(undef, 0) for i in 1:Ncol]

for i = 1:N

df2 = inner_df(Nrow,Ncol)

for j in 1:Ncol

append!(df[j],df2[j])

end

end

return df

end

function iterated(Niter, N, Nrow, Ncol) # Create a large DataFrame many times.

for i =1:Niter

println(i)

@time pan = outer_df(N, Nrow, Ncol)

end

nothing

end

#128 MB

iterated(1,1,1,1); GC.gc()

#134 MB

iterated(10,100_000,76,11); GC.gc()

#621 MB

iterated(10,100_000,76,11); GC.gc()

#621 MBAnd in another way #Fresh julia session

#95 MB

function inner_df(Nrow,Ncol) # Create small dataframe

[rand(Nrow) for i in 1:Ncol]

end

function outer_df(N, Nrow, Ncol) #Stack small dataframes

df=[Vector{Float64}(undef, 0) for i in 1:Ncol]

for i = 1:N

df2 = inner_df(Nrow,Ncol)

for j in 1:Ncol

append!(df[j],df2[j])

end

end

return df

end

#125 MB

outer_df(1_000, 67, 21); GC.gc()

#149 MB

outer_df(10_000, 67, 21); GC.gc()

#160 MB

outer_df(100_000, 67, 21); GC.gc()

#465 MB

outer_df(300_000, 67, 21); GC.gc()

#821 MB

outer_df(300_000, 67, 21); GC.gc()

#822 MB

1+1; GC.gc()

#822 MBI conclude that I hope to look into this and try to simplify the MWE more later today. |

|

Well, at least it's great to see that I'm not the only one with this issue. Cross-referencing to the issue I created on DataFrames.jl (JuliaData/DataFrames.jl#2902). On my end I have checked using Julia 1.5.0, 1.5.3, 1.6.1 and using two different computers (one with Ubuntu 18.12 and one with a 20.04 Ubuntu-clone (Pop!_os). Edited to add: @LilithHafner the |

|

I've reproduced this issue. Here is a MWE: julia> [zeros(10) for _ in 1:10^7]; GC.gc()Or julia> f(a,b) = [zeros(a) for _ in 1:b]

f (generic function with 1 method)

julia> f(10, 10^7); GC.gc()Both of which leave Julia using 1.6 GB of memory after garbage collection. |

|

I think the problem happens when Julia needs many pointers at the same time: function re_use(n)

x = Vector{Vector{Int}}(undef, n)

el = Int[]

for i in 1:n

x[i] = el

end

end

function many_pointers(n)

x = Vector{Vector{Int}}(undef, n)

el = Int[]

for i in 1:n

y = Int[]

x[i] = el

end

end

function many_pointers_same_time(n)

x = Vector{Vector{Int}}(undef, n)

for i in 1:n

x[i] = Int[]

end

end

@time re_use(10^7)

# 0.064684 seconds (3 allocations: 76.294 MiB, 8.75% gc time)

@time many_pointers(10^7)

# 0.614698 seconds (10.00 M allocations: 839.234 MiB, 29.12% gc time)

@time many_pointers_same_time(10^7)

# 1.559850 seconds (10.00 M allocations: 839.233 MiB, 39.60% gc time)

#Only now does GC.gc() fail to reduce memory use |

|

What I have been able to reproduce is not a leak per se, because repeated actions do not increase memory usage, but it is nonetheless large memory usage where I think there should be much less. Has anyone been able to reproduce a leak which involves a linear growth in unreleased memory with respect to iterations of a supposedly pure function? |

|

What happens if you use |

|

Most likely, this is not really a "leak" since, as you can see, we are still able to reuse the memory on future runs. It seems to be about when memory is released to the system. Might be similar to #30653, where we needed to call Line 3185 in 1389c2f

|

|

Yes! It does seem like a memory release to system problem.

Does not fix it. @JeffBezanson is it possible to call |

|

If Perhaps we are allocating many times from the heap, in a way that requires a lot of space (i.e. not In the example of, say |

|

FYI: I tested this on Windows 10 and there it's not a problem. |

|

Okay, sounds like this is a glibc issue then. |

|

Looks like this one, perhaps: https://sourceware.org/bugzilla/show_bug.cgi?id=27103 Perhaps it isn't actually accepted as a bug? I don't know how this community works: |

|

@vtjnash |

|

Because it is not a Julia bug, but seems to be an intentional feature of the linux/gnu OS, and it makes it harder to track real bugs. |

|

Duplicate of #128 |

|

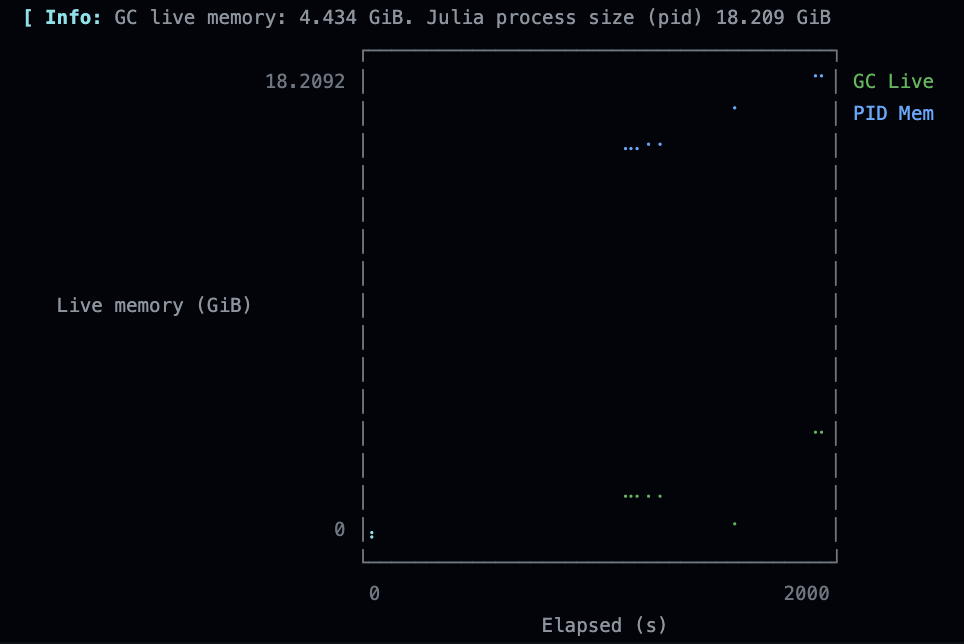

I've been seeing a long running ubuntu x86 CI run getting OOM killed and it appears to be related to this. All the tests are enclosed in Periodically monitoring I see the following profile over time (this is just before the run was killed) I tried the heap snapshot PR rebased on master, but it's currently segfaulting |

|

Ok, I got the heap snapshot to work, and this is with (ignore the row highlights)

The array is present in the Also, given |

In the #30653 (comment) they call it as |

|

I modified the source to call that for every GC.gc already. But.. it was just pointed out to me that MacOS doesn't use glibc so perhaps I should have done something similar but for MacOS. |

|

There is no malloc_trim in macos libc, at least man and google tell me so, apparently it's a GNU specific extension. |

|

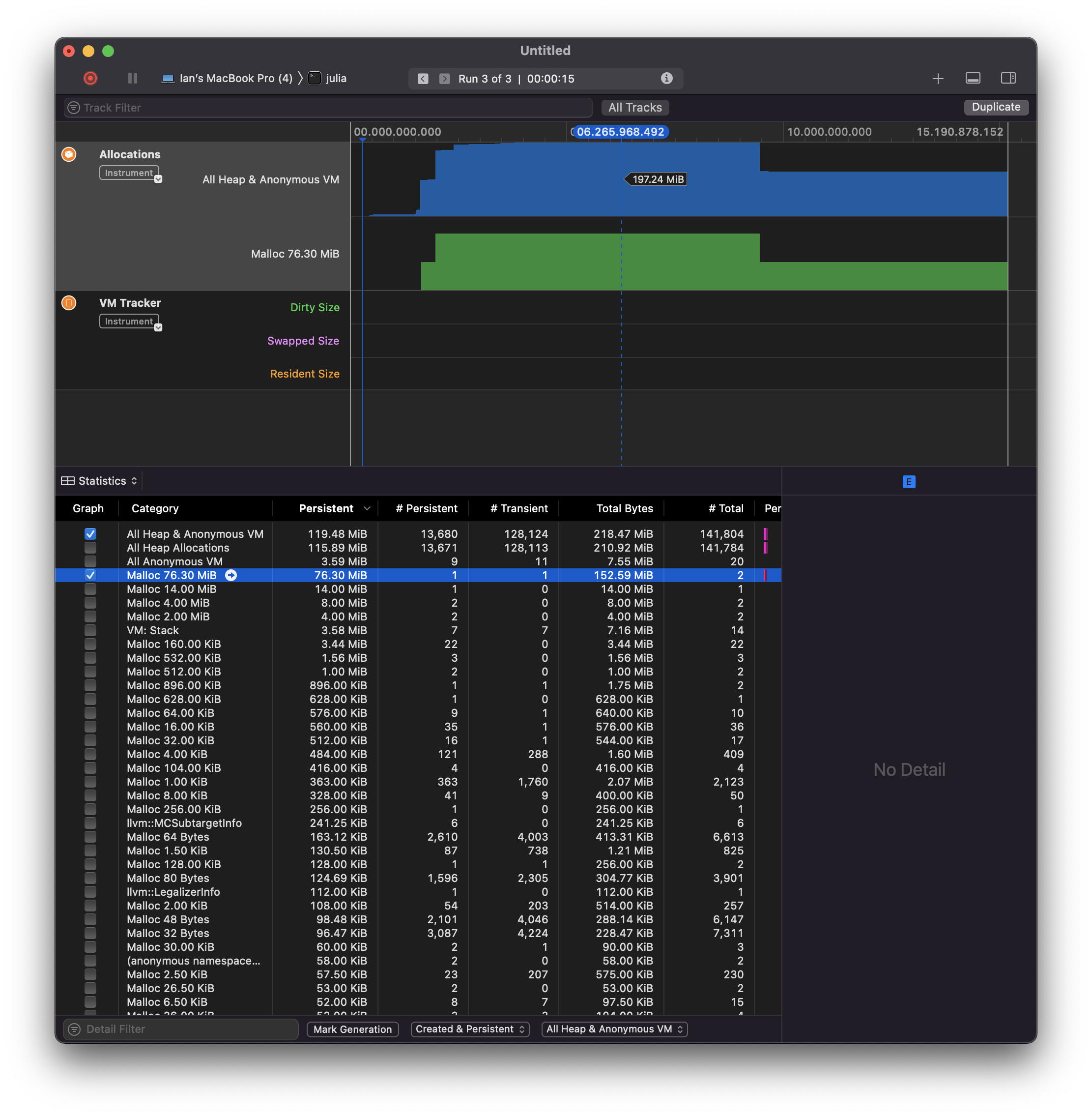

Another datapoint. This is with MacOS's instruments running It appears to only know about a few hundred MiB, but the process rises to 1.5 GB and stays there Given MacOS, this may either be a different issue, or the same issue but not glibc related |

|

On Arch Linux, with 1.7.1 I get (based on your earlier examples): So could be a MacOS specific thing, as I don't see the large process size at exit on Linux? |

|

#42566 (comment) was on ubuntu (just clarified in the comment) |

Okay, but I guess that was with much more extensive code than the simple array allocation above? And possibly multi-threaded? Seems good to reduce simple test cases, hence what I observed on Linux compared to your MacOS. |

|

I was doing some reading and apparently macos is very lazy on returning memory to the kernel, if the memory pressure increases it does do it, but only then. On an ARM ubuntu server the original OP sometimes shows that the OS hasn't requested the memory back, while other times it does get it back. I imagine it's different behaviour from the libcs. |

|

Ok. Adding |

|

Just cross-referencing, in my hunt for freeing more memory I learned the |

|

This issue might be related to the behavior I reported on the discourse here: https://discourse.julialang.org/t/determining-size-of-dataframe-for-memory-management/85277/36 MWE is from reading in and sub-sampling some big CSV files available from the Census: At the end of this I have like 10GB of memory usage by the Julia process but varinfo() only knows about ~100MB of variables. Eventually systemd-oomd kills my session. |

|

|

Upstream bug has been closed https://sourceware.org/bugzilla/show_bug.cgi?id=27103#c2

On my ubuntu, neither |

|

I havn't been following the discussion in detail, but have encountered it elsewhere. It seems to me that some correspondents seem to miss the point that any allocation located above blocks of free memory will prevent Also in the case of multiple threaded allocations tl;dr |

This attitude is really flawed. In my example the systemd-oomd is killing Julia because it's taking 75% of the system RAM even though only 1% of that ram is actually in use by Julia. |

|

Yeah, this is a problem. At a minimum, it should be possible to deliberately ask Julia to compact its memory. I'm currently looking at an 8 GB process. All it does it load data and save it; it shouldn't retain any references. The memory might be needed again in a day or a week, but not immediately. |

|

@BioTurboNick Could you try #47062 in your workload? And maybe compare the performance(runtime and max memory)? |

@gbaraldi what would I need to do? |

|

Just build julia on my PR and try your workload ;) |

This problem was originally reported in the helpdesk Slack against DataFrames, but I believe I have replicated it just using vectors of vectors.

OP was using Julia 1.5.0 with DataFrame 1.2.2 on Linux

I used Julia 1.6.2 on WSL2 Ubuntu

Just using vectors, everything behaves as expected and julia's allocated memory does not increase:

Using vectors of vectors, there seems to be a memory leak:

Please feel free to close if this is a vagary of Linux memory management.

The text was updated successfully, but these errors were encountered: