Object detection is a powerful tool in hands of computer vision engineer.

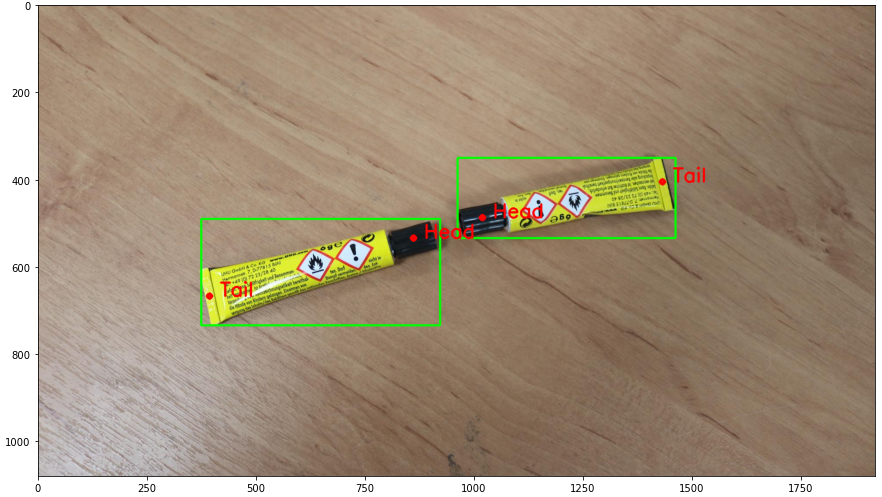

However, object detection models, like Yolo5, can just identify the coordinates of the bounding box of an object, but they can't provide any information about object's orientation in space.

Such information can be provided by coordinates of the object's keypoints.

I was interested to get to know how to train a keypoint detection model, Keypoint RCNN, on a custom dataset. For that, I needed to create a simple dataset with objects related to one class, where each object would be annotated with no more than 2 keypoints, which would be enough to identify its orientation in space.

Glue tube was the ideal candidate to become such an object. So, I've made 134 photos and annotated them in Roboflow.

Roboflow doesn't provide keypoint annotation functionality, but this task can be easily done by annotating keypoints with small rectangles.

With help of a custom script, we can convert these annotations

into these annotations

Tutorial on how to create rectangle annontations in Roboflow and convert them into keypoints is here

Tutorial on how to train a custom keypoint detection model is here

https://medium.com/@alexppppp/how-to-annotate-keypoints-using-roboflow-9bc2aa8915cd

This repository contains two folders.

glue_tubes is a folder with the original dataset (only images). This dataset should be labeled in Roboflow. To save your time, you don't need to label this dataset by yourself. It's already labeled and is placed in Glue Tubes Keypoints Project.v1-version-1.yolov5pytorch folder.

Glue Tubes Keypoints Project.v1-version-1.yolov5pytorch is a folder with Roboflow-labeled dataset (images and txt-files with coordinates of rectangles). Rectangle labels in txt-files are ready to be transformed into keypoints and bounding boxes.