-

Notifications

You must be signed in to change notification settings - Fork 28.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-23433][SPARK-25250] [CORE][BRANCH-2.3] Later created TaskSet should learn about the finished partitions #24006

Closed

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

… doesn't exist ## What changes were proposed in this pull request? This PR proposes to follow up apache#15153 and complete SPARK-17599. `FileSystem` operation (`fs.getFileBlockLocations`) can still fail if the file path does not exist. For example see the exception message below: ``` Error occurred while processing: File does not exist: /rel/00171151/input/PJ/part-00136-b6403bac-a240-44f8-a792-fc2e174682b7-c000.csv ... java.io.FileNotFoundException: File does not exist: /rel/00171151/input/PJ/part-00136-b6403bac-a240-44f8-a792-fc2e174682b7-c000.csv ... org.apache.hadoop.hdfs.DistributedFileSystem.getFileBlockLocations(DistributedFileSystem.java:249) at org.apache.hadoop.hdfs.DistributedFileSystem.getFileBlockLocations(DistributedFileSystem.java:229) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex$$anonfun$org$apache$spark$sql$execution$datasources$InMemoryFileIndex$$listLeafFiles$3.apply(InMemoryFileIndex.scala:314) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex$$anonfun$org$apache$spark$sql$execution$datasources$InMemoryFileIndex$$listLeafFiles$3.apply(InMemoryFileIndex.scala:297) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.IndexedSeqOptimized$class.foreach(IndexedSeqOptimized.scala:33) at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:186) at scala.collection.TraversableLike$class.map(TraversableLike.scala:234) at scala.collection.mutable.ArrayOps$ofRef.map(ArrayOps.scala:186) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex$.org$apache$spark$sql$execution$datasources$InMemoryFileIndex$$listLeafFiles(InMemoryFileIndex.scala:297) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex$$anonfun$org$apache$spark$sql$execution$datasources$InMemoryFileIndex$$bulkListLeafFiles$1.apply(InMemoryFileIndex.scala:174) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex$$anonfun$org$apache$spark$sql$execution$datasources$InMemoryFileIndex$$bulkListLeafFiles$1.apply(InMemoryFileIndex.scala:173) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234) at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59) at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48) at scala.collection.TraversableLike$class.map(TraversableLike.scala:234) at scala.collection.AbstractTraversable.map(Traversable.scala:104) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex$.org$apache$spark$sql$execution$datasources$InMemoryFileIndex$$bulkListLeafFiles(InMemoryFileIndex.scala:173) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex.listLeafFiles(InMemoryFileIndex.scala:126) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex.refresh0(InMemoryFileIndex.scala:91) at org.apache.spark.sql.execution.datasources.InMemoryFileIndex.<init>(InMemoryFileIndex.scala:67) at org.apache.spark.sql.execution.datasources.DataSource.tempFileIndex$lzycompute$1(DataSource.scala:161) at org.apache.spark.sql.execution.datasources.DataSource.org$apache$spark$sql$execution$datasources$DataSource$$tempFileIndex$1(DataSource.scala:152) at org.apache.spark.sql.execution.datasources.DataSource.getOrInferFileFormatSchema(DataSource.scala:166) at org.apache.spark.sql.execution.datasources.DataSource.sourceSchema(DataSource.scala:261) at org.apache.spark.sql.execution.datasources.DataSource.sourceInfo$lzycompute(DataSource.scala:94) at org.apache.spark.sql.execution.datasources.DataSource.sourceInfo(DataSource.scala:94) at org.apache.spark.sql.execution.streaming.StreamingRelation$.apply(StreamingRelation.scala:33) at org.apache.spark.sql.streaming.DataStreamReader.load(DataStreamReader.scala:196) at org.apache.spark.sql.streaming.DataStreamReader.load(DataStreamReader.scala:206) at com.hwx.StreamTest$.main(StreamTest.scala:97) at com.hwx.StreamTest.main(StreamTest.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52) at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:906) at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:197) at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:227) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:136) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) Caused by: org.apache.hadoop.ipc.RemoteException(java.io.FileNotFoundException): File does not exist: /rel/00171151/input/PJ/part-00136-b6403bac-a240-44f8-a792-fc2e174682b7-c000.csv ... ``` So, it fixes it to make a warning instead. ## How was this patch tested? It's hard to write a test. Manually tested multiple times. Author: hyukjinkwon <[email protected]> Closes apache#21408 from HyukjinKwon/missing-files. (cherry picked from commit 8a54582) Signed-off-by: hyukjinkwon <[email protected]>

…y filters. ## What changes were proposed in this pull request? I missed this commit when preparing apache#21070. When Parquet is able to filter blocks with dictionary filtering, the expected total value count to be too high in Spark, leading to an error when there were fewer than expected row groups to process. Spark should get the row groups from Parquet to pick up new filter schemes in Parquet like dictionary filtering. ## How was this patch tested? Using in production at Netflix. Added test case for dictionary-filtered blocks. Author: Ryan Blue <[email protected]> Closes apache#21295 from rdblue/SPARK-24230-fix-parquet-block-tracking. (cherry picked from commit 3469f5c) Signed-off-by: Wenchen Fan <[email protected]>

## What changes were proposed in this pull request? Fix `date_trunc` function incorrect examples. ## How was this patch tested? N/A Author: Yuming Wang <[email protected]> Closes apache#21423 from wangyum/SPARK-24378.

## What changes were proposed in this pull request? SPARK-17874 introduced a new configuration to set the port where SSL services bind to. We missed to update the scaladoc and the `toString` method, though. The PR adds it in the missing places ## How was this patch tested? checked the `toString` output in the logs Author: Marco Gaido <[email protected]> Closes apache#21429 from mgaido91/minor_ssl. (cherry picked from commit fd315f5) Signed-off-by: Marcelo Vanzin <[email protected]>

… to configuration. ## What changes were proposed in this pull request? Spark provides four codecs: `lz4`, `lzf`, `snappy`, and `zstd`. This pr add missing shortCompressionCodecNames to configuration. ## How was this patch tested? manually tested Author: Yuming Wang <[email protected]> Closes apache#21431 from wangyum/SPARK-19112. (cherry picked from commit ed1a654) Signed-off-by: hyukjinkwon <[email protected]>

…shutdown of Arrow memory allocator

## What changes were proposed in this pull request?

There is a race condition of closing Arrow VectorSchemaRoot and Allocator in the writer thread of ArrowPythonRunner.

The race results in memory leak exception when closing the allocator. This patch removes the closing routine from the TaskCompletionListener and make the writer thread responsible for cleaning up the Arrow memory.

This issue be reproduced by this test:

```

def test_memory_leak(self):

from pyspark.sql.functions import pandas_udf, col, PandasUDFType, array, lit, explode

# Have all data in a single executor thread so it can trigger the race condition easier

with self.sql_conf({'spark.sql.shuffle.partitions': 1}):

df = self.spark.range(0, 1000)

df = df.withColumn('id', array([lit(i) for i in range(0, 300)])) \

.withColumn('id', explode(col('id'))) \

.withColumn('v', array([lit(i) for i in range(0, 1000)]))

pandas_udf(df.schema, PandasUDFType.GROUPED_MAP)

def foo(pdf):

xxx

return pdf

result = df.groupby('id').apply(foo)

with QuietTest(self.sc):

with self.assertRaises(py4j.protocol.Py4JJavaError) as context:

result.count()

self.assertTrue('Memory leaked' not in str(context.exception))

```

Note: Because of the race condition, the test case cannot reproduce the issue reliably so it's not added to test cases.

## How was this patch tested?

Because of the race condition, the bug cannot be unit test easily. So far it has only happens on large amount of data. This is currently tested manually.

Author: Li Jin <[email protected]>

Closes apache#21397 from icexelloss/SPARK-24334-arrow-memory-leak.

(cherry picked from commit 672209f)

Signed-off-by: hyukjinkwon <[email protected]>

The pandas_udf functionality was introduced in 2.3.0, but is not completely stable and still evolving. This adds a label to indicate it is still an experimental API. NA Author: Bryan Cutler <[email protected]> Closes apache#21435 from BryanCutler/arrow-pandas_udf-experimental-SPARK-24392. (cherry picked from commit fa2ae9d) Signed-off-by: hyukjinkwon <[email protected]>

…and KeyValueGroupedDataset's child When we create a `RelationalGroupedDataset` or a `KeyValueGroupedDataset` we set its child to the `logicalPlan` of the `DataFrame` we need to aggregate. Since the `logicalPlan` is already analyzed, we should not analyze it again. But this happens when the new plan of the aggregate is analyzed. The current behavior in most of the cases is likely to produce no harm, but in other cases re-analyzing an analyzed plan can change it, since the analysis is not idempotent. This can cause issues like the one described in the JIRA (missing to find a cached plan). The PR adds an `AnalysisBarrier` to the `logicalPlan` which is used as child of `RelationalGroupedDataset` or a `KeyValueGroupedDataset`. added UT Author: Marco Gaido <[email protected]> Closes apache#21432 from mgaido91/SPARK-24373. (cherry picked from commit de01a8d) Signed-off-by: Wenchen Fan <[email protected]>

…amePrefix ## What changes were proposed in this pull request? `Random.nextString` is good for generating random string data, but it's not proper for directory name prefix in `Utils.createDirectory(tempDir, Random.nextString(10))`. This PR uses more safe directory namePrefix. ```scala scala> scala.util.Random.nextString(10) res0: String = 馨쭔ᎰႻ穚䃈兩㻞藑並 ``` ```scala StateStoreRDDSuite: - versioning and immutability - recovering from files - usage with iterators - only gets and only puts - preferred locations using StateStoreCoordinator *** FAILED *** java.io.IOException: Failed to create a temp directory (under /.../spark/sql/core/target/tmp/StateStoreRDDSuite8712796397908632676) after 10 attempts! at org.apache.spark.util.Utils$.createDirectory(Utils.scala:295) at org.apache.spark.sql.execution.streaming.state.StateStoreRDDSuite$$anonfun$13$$anonfun$apply$6.apply(StateStoreRDDSuite.scala:152) at org.apache.spark.sql.execution.streaming.state.StateStoreRDDSuite$$anonfun$13$$anonfun$apply$6.apply(StateStoreRDDSuite.scala:149) at org.apache.spark.sql.catalyst.util.package$.quietly(package.scala:42) at org.apache.spark.sql.execution.streaming.state.StateStoreRDDSuite$$anonfun$13.apply(StateStoreRDDSuite.scala:149) at org.apache.spark.sql.execution.streaming.state.StateStoreRDDSuite$$anonfun$13.apply(StateStoreRDDSuite.scala:149) ... - distributed test *** FAILED *** java.io.IOException: Failed to create a temp directory (under /.../spark/sql/core/target/tmp/StateStoreRDDSuite8712796397908632676) after 10 attempts! at org.apache.spark.util.Utils$.createDirectory(Utils.scala:295) ``` ## How was this patch tested? Pass the existing tests.StateStoreRDDSuite: Author: Dongjoon Hyun <[email protected]> Closes apache#21446 from dongjoon-hyun/SPARK-19613. (cherry picked from commit b31b587) Signed-off-by: hyukjinkwon <[email protected]>

…eBlocksToBatch When blocks tried to get allocated to a batch and WAL write fails then the blocks will be removed from the received block queue. This fact simply produces data loss because the next allocation will not find the mentioned blocks in the queue. In this PR blocks will be removed from the received queue only if WAL write succeded. Additional unit test. Author: Gabor Somogyi <[email protected]> Closes apache#21430 from gaborgsomogyi/SPARK-23991. Change-Id: I5ead84f0233f0c95e6d9f2854ac2ff6906f6b341 (cherry picked from commit aca65c6) Signed-off-by: jerryshao <[email protected]>

…s having the same argument set

## What changes were proposed in this pull request?

This pr fixed an issue when having multiple distinct aggregations having the same argument set, e.g.,

```

scala>: paste

val df = sql(

s"""SELECT corr(DISTINCT x, y), corr(DISTINCT y, x), count(*)

| FROM (VALUES (1, 1), (2, 2), (2, 2)) t(x, y)

""".stripMargin)

java.lang.RuntimeException

You hit a query analyzer bug. Please report your query to Spark user mailing list.

```

The root cause is that `RewriteDistinctAggregates` can't detect multiple distinct aggregations if they have the same argument set. This pr modified code so that `RewriteDistinctAggregates` could count the number of aggregate expressions with `isDistinct=true`.

## How was this patch tested?

Added tests in `DataFrameAggregateSuite`.

Author: Takeshi Yamamuro <[email protected]>

Closes apache#21443 from maropu/SPARK-24369.

(cherry picked from commit 1e46f92)

Signed-off-by: Wenchen Fan <[email protected]>

…code ## What changes are proposed Make sure that `StopIteration`s raised in users' code do not silently interrupt processing by spark, but are raised as exceptions to the users. The users' functions are wrapped in `safe_iter` (in `shuffle.py`), which re-raises `StopIteration`s as `RuntimeError`s ## How were the changes tested Unit tests, making sure that the exceptions are indeed raised. I am not sure how to check whether a `Py4JJavaError` contains my exception, so I simply looked for the exception message in the java exception's `toString`. Can you propose a better way? This is my original work, licensed in the same way as spark --- Author: e-dorigatti <emilio.dorigattigmail.com> Closes apache#21383 from e-dorigatti/fix_spark_23754. (cherry picked from commit 0ebb0c0) Author: e-dorigatti <[email protected]> Closes apache#21463 from e-dorigatti/branch-2.3.

…onRunner in submit with client mode in spark-submit

## What changes were proposed in this pull request?

In client side before context initialization specifically, .py file doesn't work in client side before context initialization when the application is a Python file. See below:

```

$ cat /home/spark/tmp.py

def testtest():

return 1

```

This works:

```

$ cat app.py

import pyspark

pyspark.sql.SparkSession.builder.getOrCreate()

import tmp

print("************************%s" % tmp.testtest())

$ ./bin/spark-submit --master yarn --deploy-mode client --py-files /home/spark/tmp.py app.py

...

************************1

```

but this doesn't:

```

$ cat app.py

import pyspark

import tmp

pyspark.sql.SparkSession.builder.getOrCreate()

print("************************%s" % tmp.testtest())

$ ./bin/spark-submit --master yarn --deploy-mode client --py-files /home/spark/tmp.py app.py

Traceback (most recent call last):

File "/home/spark/spark/app.py", line 2, in <module>

import tmp

ImportError: No module named tmp

```

### How did it happen?

In client mode specifically, the paths are being added into PythonRunner as are:

https://github.com/apache/spark/blob/628c7b517969c4a7ccb26ea67ab3dd61266073ca/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#L430

https://github.com/apache/spark/blob/628c7b517969c4a7ccb26ea67ab3dd61266073ca/core/src/main/scala/org/apache/spark/deploy/PythonRunner.scala#L49-L88

The problem here is, .py file shouldn't be added as are since `PYTHONPATH` expects a directory or an archive like zip or egg.

### How does this PR fix?

We shouldn't simply just add its parent directory because other files in the parent directory could also be added into the `PYTHONPATH` in client mode before context initialization.

Therefore, we copy .py files into a temp directory for .py files and add it to `PYTHONPATH`.

## How was this patch tested?

Unit tests are added and manually tested in both standalond and yarn client modes with submit.

Author: hyukjinkwon <[email protected]>

Closes apache#21426 from HyukjinKwon/SPARK-24384.

(cherry picked from commit b142157)

Signed-off-by: Marcelo Vanzin <[email protected]>

As discussed separately, this avoids the possibility of XSS on certain request param keys. CC vanzin Author: Sean Owen <[email protected]> Closes apache#21464 from srowen/XSS2. (cherry picked from commit 698b9a0) Signed-off-by: Marcelo Vanzin <[email protected]>

This change takes into account all non-pending tasks when calculating the number of tasks to be shown. This also means that when the stage is pending, the task table (or, in fact, most of the data in the stage page) will not be rendered. I also fixed the label when the known number of tasks is larger than the recorded number of tasks (it was inverted). Author: Marcelo Vanzin <[email protected]> Closes apache#21457 from vanzin/SPARK-24414. (cherry picked from commit 7a82e93) Signed-off-by: Marcelo Vanzin <[email protected]>

…plain column assignment ## What changes were proposed in this pull request? Added sections to pandas_udf docs, in the grouped map section, to indicate columns are assigned by position. Backported to branch-2.3. ## How was this patch tested? NA Author: Bryan Cutler <[email protected]> Closes apache#21478 from BryanCutler/arrow-doc-pandas_udf-column_by_pos-2_3_1-SPARK-21427.

…regations having the same argument set" This reverts commit 66289a3.

change runTasks to submitTasks in the TaskSchedulerImpl.scala 's comment Author: xueyu <[email protected]> Author: Xue Yu <[email protected]> Closes apache#21485 from xueyumusic/fixtypo1. (cherry picked from commit a2166ec) Signed-off-by: hyukjinkwon <[email protected]>

…s having the same argument set ## What changes were proposed in this pull request? bring back apache#21443 This is a different approach: just change the check to count distinct columns with `toSet` ## How was this patch tested? a new test to verify the planner behavior. Author: Wenchen Fan <[email protected]> Author: Takeshi Yamamuro <[email protected]> Closes apache#21487 from cloud-fan/back. (cherry picked from commit 416cd1f) Signed-off-by: Xiao Li <[email protected]>

… decimal operations ## What changes were proposed in this pull request? In SPARK-22036 we introduced the possibility to allow precision loss in arithmetic operations (according to the SQL standard). The implementation was drawn from Hive's one, where Decimals with a negative scale are not allowed in the operations. The PR handles the case when the scale is negative, removing the assertion that it is not. ## How was this patch tested? added UTs Author: Marco Gaido <[email protected]> Closes apache#21499 from mgaido91/SPARK-24468. (cherry picked from commit f07c506) Signed-off-by: Wenchen Fan <[email protected]>

Apply the suggestion on the bug to fix source links. Tested with the 2.3.1 release docs. Author: Marcelo Vanzin <[email protected]> Closes apache#21521 from vanzin/SPARK-23732.

## What changes were proposed in this pull request? `UnsafeRowSerializerSuite` calls `UnsafeProjection.create` which accesses `SQLConf.get`, while the current active SparkSession may already be stopped, and we may hit exception like this ``` sbt.ForkMain$ForkError: java.lang.IllegalStateException: LiveListenerBus is stopped. at org.apache.spark.scheduler.LiveListenerBus.addToQueue(LiveListenerBus.scala:97) at org.apache.spark.scheduler.LiveListenerBus.addToStatusQueue(LiveListenerBus.scala:80) at org.apache.spark.sql.internal.SharedState.<init>(SharedState.scala:93) at org.apache.spark.sql.SparkSession$$anonfun$sharedState$1.apply(SparkSession.scala:120) at org.apache.spark.sql.SparkSession$$anonfun$sharedState$1.apply(SparkSession.scala:120) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.sql.SparkSession.sharedState$lzycompute(SparkSession.scala:120) at org.apache.spark.sql.SparkSession.sharedState(SparkSession.scala:119) at org.apache.spark.sql.internal.BaseSessionStateBuilder.build(BaseSessionStateBuilder.scala:286) at org.apache.spark.sql.test.TestSparkSession.sessionState$lzycompute(TestSQLContext.scala:42) at org.apache.spark.sql.test.TestSparkSession.sessionState(TestSQLContext.scala:41) at org.apache.spark.sql.SparkSession$$anonfun$1$$anonfun$apply$1.apply(SparkSession.scala:95) at org.apache.spark.sql.SparkSession$$anonfun$1$$anonfun$apply$1.apply(SparkSession.scala:95) at scala.Option.map(Option.scala:146) at org.apache.spark.sql.SparkSession$$anonfun$1.apply(SparkSession.scala:95) at org.apache.spark.sql.SparkSession$$anonfun$1.apply(SparkSession.scala:94) at org.apache.spark.sql.internal.SQLConf$.get(SQLConf.scala:126) at org.apache.spark.sql.catalyst.expressions.CodeGeneratorWithInterpretedFallback.createObject(CodeGeneratorWithInterpretedFallback.scala:54) at org.apache.spark.sql.catalyst.expressions.UnsafeProjection$.create(Projection.scala:157) at org.apache.spark.sql.catalyst.expressions.UnsafeProjection$.create(Projection.scala:150) at org.apache.spark.sql.execution.UnsafeRowSerializerSuite.org$apache$spark$sql$execution$UnsafeRowSerializerSuite$$unsafeRowConverter(UnsafeRowSerializerSuite.scala:54) at org.apache.spark.sql.execution.UnsafeRowSerializerSuite.org$apache$spark$sql$execution$UnsafeRowSerializerSuite$$toUnsafeRow(UnsafeRowSerializerSuite.scala:49) at org.apache.spark.sql.execution.UnsafeRowSerializerSuite$$anonfun$2.apply(UnsafeRowSerializerSuite.scala:63) at org.apache.spark.sql.execution.UnsafeRowSerializerSuite$$anonfun$2.apply(UnsafeRowSerializerSuite.scala:60) ... ``` ## How was this patch tested? N/A Author: Wenchen Fan <[email protected]> Closes apache#21518 from cloud-fan/test. (cherry picked from commit 01452ea) Signed-off-by: Wenchen Fan <[email protected]>

…veExternalCatalogVersionsSuite ## What changes were proposed in this pull request? Removing version 2.2.0 from testing versions in HiveExternalCatalogVersionsSuite as it is not present anymore in the mirrors and this is blocking all the open PRs. ## How was this patch tested? running UTs Author: Marco Gaido <[email protected]> Closes apache#21540 from mgaido91/SPARK-24531. (cherry picked from commit 2824f14) Signed-off-by: Xiao Li <[email protected]>

## What changes were proposed in this pull request? Currently, `spark.ui.filters` are not applied to the handlers added after binding the server. This means that every page which is added after starting the UI will not have the filters configured on it. This can allow unauthorized access to the pages. The PR adds the filters also to the handlers added after the UI starts. ## How was this patch tested? manual tests (without the patch, starting the thriftserver with `--conf spark.ui.filters=org.apache.hadoop.security.authentication.server.AuthenticationFilter --conf spark.org.apache.hadoop.security.authentication.server.AuthenticationFilter.params="type=simple"` you can access `http://localhost:4040/sqlserver`; with the patch, 401 is the response as for the other pages). Author: Marco Gaido <[email protected]> Closes apache#21523 from mgaido91/SPARK-24506. (cherry picked from commit f53818d) Signed-off-by: Marcelo Vanzin <[email protected]>

… wrapping from driver to executor SPARK-23754 was fixed in apache#21383 by changing the UDF code to wrap the user function, but this required a hack to save its argspec. This PR reverts this change and fixes the `StopIteration` bug in the worker. The root of the problem is that when an user-supplied function raises a `StopIteration`, pyspark might stop processing data, if this function is used in a for-loop. The solution is to catch `StopIteration`s exceptions and re-raise them as `RuntimeError`s, so that the execution fails and the error is reported to the user. This is done using the `fail_on_stopiteration` wrapper, in different ways depending on where the function is used: - In RDDs, the user function is wrapped in the driver, because this function is also called in the driver itself. - In SQL UDFs, the function is wrapped in the worker, since all processing happens there. Moreover, the worker needs the signature of the user function, which is lost when wrapping it, but passing this signature to the worker requires a not so nice hack. HyukjinKwon Author: edorigatti <[email protected]> Author: e-dorigatti <[email protected]> Closes apache#21538 from e-dorigatti/branch-2.3.

## What changes were proposed in this pull request? We don't require specific ordering of the input data, the sort action is not necessary and misleading. ## How was this patch tested? Existing test suite. Author: Xingbo Jiang <[email protected]> Closes apache#21536 from jiangxb1987/sorterSuite. (cherry picked from commit 534065e) Signed-off-by: hyukjinkwon <[email protected]>

…variable to the UI display …not synchronized to the UI display ## What changes were proposed in this pull request? The amount of memory used by the broadcast variable is not synchronized to the UI display. I added the case for BroadcastBlockId and updated the memory usage. ## How was this patch tested? We can test this patch with unit tests. Closes apache#23649 from httfighter/SPARK-26726. Lead-authored-by: 韩田田00222924 <[email protected]> Co-authored-by: [email protected] <[email protected]> Signed-off-by: Marcelo Vanzin <[email protected]> (cherry picked from commit f4a17e9) Signed-off-by: Marcelo Vanzin <[email protected]>

## What changes were proposed in this pull request? Previously a "java.lang.UnsupportedOperationException: empty collection" exception would be thrown due to using `reduce`, rather than `fold` or similar that can tolerate empty RDDs. This behaviour has existed for the Vertex RDDs since it was introduced in b30e0ae. It seems this behaviour was inherited by the Edge RDDs via copy-paste in ee29ef3. ## How was this patch tested? Two new unit tests. Closes apache#23681 from huonw/empty-graphx. Authored-by: Huon Wilson <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit da52698) Signed-off-by: Sean Owen <[email protected]>

## What changes were proposed in this pull request?

Right now, EventTimeStats.merge doesn't handle `zero.merge(zero)` correctly. This will make `avg` become `NaN`. And whatever gets merged with the result of `zero.merge(zero)`, `avg` will still be `NaN`. Then finally, we call `NaN.toLong` and get `0`, and the user will see the following incorrect report:

```

"eventTime" : {

"avg" : "1970-01-01T00:00:00.000Z",

"max" : "2019-01-31T12:57:00.000Z",

"min" : "2019-01-30T18:44:04.000Z",

"watermark" : "1970-01-01T00:00:00.000Z"

}

```

This issue was reported by liancheng .

This PR fixes the above issue.

## How was this patch tested?

The new unit tests.

Closes apache#23718 from zsxwing/merge-zero.

Authored-by: Shixiong Zhu <[email protected]>

Signed-off-by: Shixiong Zhu <[email protected]>

(cherry picked from commit 03a928c)

Signed-off-by: Shixiong Zhu <[email protected]>

…nd throw exception which is not HiveSQLException ## What changes were proposed in this pull request? When we run in background and we get exception which is not HiveSQLException, we may encounter memory leak since handleToOperation will not removed correctly. The reason is below: 1. When calling operation.run() in HiveSessionImpl#executeStatementInternal we throw an exception which is not HiveSQLException 2. Then the opHandle generated by SparkSQLOperationManager will not be added into opHandleSet of HiveSessionImpl , and operationManager.closeOperation(opHandle) will not be called 3. When we close the session we will also call operationManager.closeOperation(opHandle),since we did not add this opHandle into the opHandleSet. For the reasons above,the opHandled will always in SparkSQLOperationManager#handleToOperation,which will cause memory leak. More details and a case has attached on https://issues.apache.org/jira/browse/SPARK-26751 This patch will always throw HiveSQLException when running in background ## How was this patch tested? Exist UT Closes apache#23673 from caneGuy/zhoukang/fix-hivesessionimpl-leak. Authored-by: zhoukang <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 255faaf) Signed-off-by: Sean Owen <[email protected]>

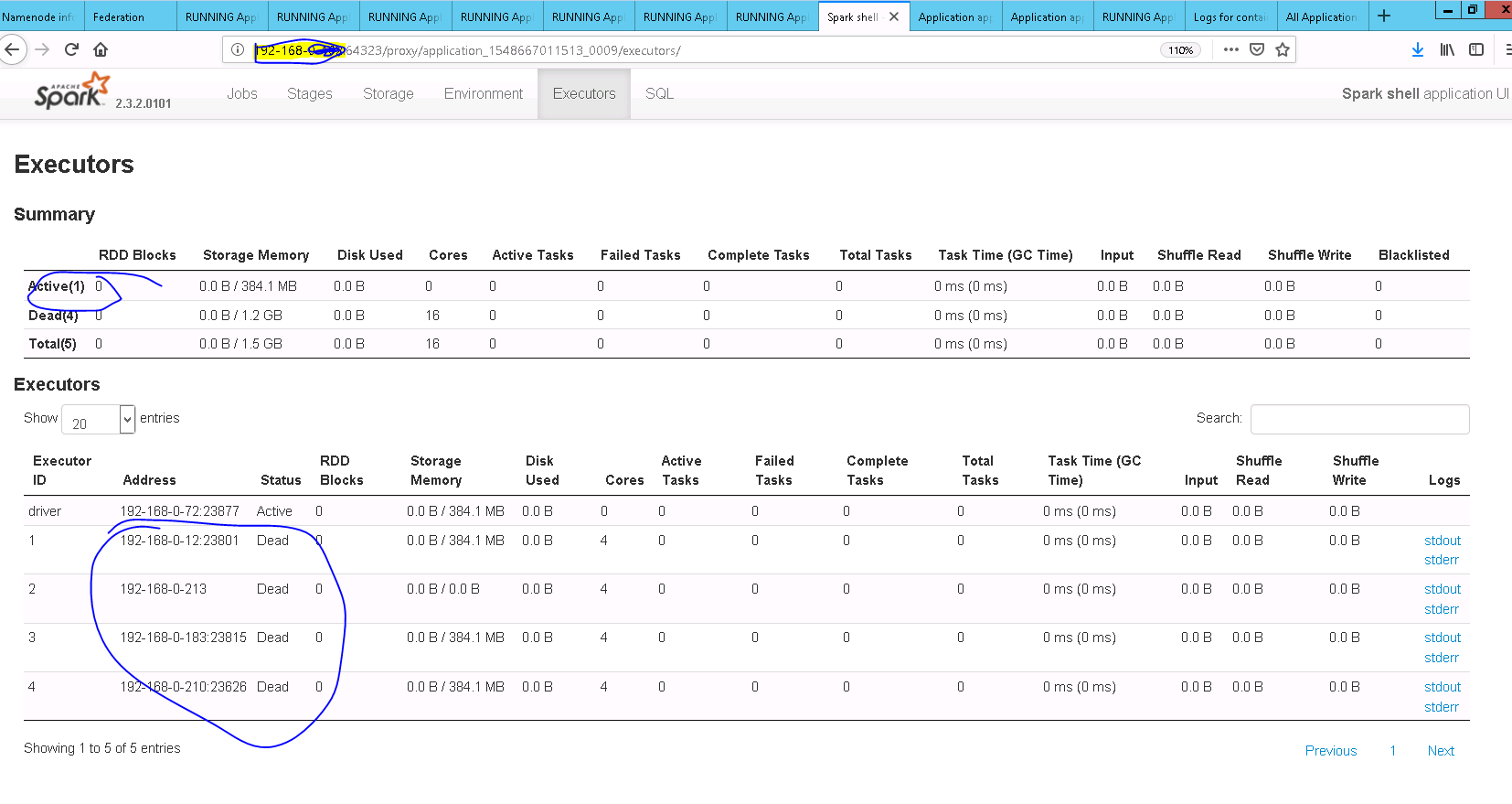

….dynamiAllocation.executorIdleTimeout value ## What changes were proposed in this pull request? **updateAndSyncNumExecutorsTarget** API should be called after **initializing** flag is unset ## How was this patch tested? Added UT and also manually tested After Fix  Closes apache#23697 from sandeep-katta/executorIssue. Authored-by: sandeep-katta <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 1dd7419) Signed-off-by: Sean Owen <[email protected]>

## What changes were proposed in this pull request? SPARK-23991 introduced a bug in `ReceivedBlockTracker#allocateBlocksToBatch`: when a queue with more than a few thousand blocks are in the queue, serializing the queue throws a StackOverflowError. This change just adds `dequeueAll` to the new `clone` operation on the queue so that the fix in 23991 is preserved but the serialized data comes from an ArrayBuffer which doesn't have the serialization problems that mutable.Queue has. ## How was this patch tested? A unit test was added. Closes apache#23716 from rlodge/SPARK-26734. Authored-by: Ross Lodge <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 8427e9b) Signed-off-by: Sean Owen <[email protected]>

## What changes were proposed in this pull request? * change MesosClusterScheduler to use correct argument name for Mesos fetch cache (spark.mesos.fetchCache.enable -> spark.mesos.fetcherCache.enable) ## How was this patch tested? Not sure this requires a test, since it's just a string change. Closes apache#23734 from mwlon/SPARK-26082. Authored-by: mwlon <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit c0811e8) Signed-off-by: Dongjoon Hyun <[email protected]>

…sClusterScheduler ## What changes were proposed in this pull request? This patch adds UT on testing SPARK-26082 to avoid regression. While apache#23743 reduces the possibility to make a similar mistake, the needed lines of code for adding tests are not that huge, so I guess it might be worth to add them. ## How was this patch tested? Newly added UTs. Test "supports setting fetcher cache" fails when apache#23743 is not applied and succeeds when apache#23743 is applied. Closes apache#23744 from HeartSaVioR/SPARK-26082-add-unit-test. Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit b4e1d14) Signed-off-by: Dongjoon Hyun <[email protected]>

… on MesosClusterScheduler" This reverts commit 3abf45d.

…tion on MesosClusterScheduler ## What changes were proposed in this pull request? This patch adds UT on testing SPARK-26082 to avoid regression. While apache#23743 reduces the possibility to make a similar mistake, the needed lines of code for adding tests are not that huge, so I guess it might be worth to add them. ## How was this patch tested? Newly added UTs. Test "supports setting fetcher cache" fails when apache#23734 is not applied and succeeds when apache#23734 is applied. Closes apache#23754 from HeartSaVioR/SPARK-26082-branch-2.3. Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…in Streaming*JoinSuite ## What changes were proposed in this pull request? **The best way to review this PR is to ignore whitespace/indent changes. Use this link - https://github.com/apache/spark/pull/20650/files?w=1** The stream-stream join tests add data to multiple sources and expect it all to show up in the next batch. But there's a race condition; the new batch might trigger when only one of the AddData actions has been reached. Prior attempt to solve this issue by jose-torres in apache#20646 attempted to simultaneously synchronize on all memory sources together when consecutive AddData was found in the actions. However, this carries the risk of deadlock as well as unintended modification of stress tests (see the above PR for a detailed explanation). Instead, this PR attempts the following. - A new action called `StreamProgressBlockedActions` that allows multiple actions to be executed while the streaming query is blocked from making progress. This allows data to be added to multiple sources that are made visible simultaneously in the next batch. - An alias of `StreamProgressBlockedActions` called `MultiAddData` is explicitly used in the `Streaming*JoinSuites` to add data to two memory sources simultaneously. This should avoid unintentional modification of the stress tests (or any other test for that matter) while making sure that the flaky tests are deterministic. NOTE: This patch is modified a bit from origin PR (apache#20650) to cover DSv2 incompatibility between Spark 2.3 and 2.4: StreamingDataSourceV2Relation is a class for 2.3, whereas it is a case class for 2.4 ## How was this patch tested? Modified test cases in `Streaming*JoinSuites` where there are consecutive `AddData` actions. Closes apache#23757 from HeartSaVioR/fix-streaming-join-test-flakiness-branch-2.3. Lead-authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Co-authored-by: Tathagata Das <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…cution reconfiguring ## What changes were proposed in this pull request? Remove queryExecutionThread.interrupt() from ContinuousExecution. As detailed in the JIRA, interrupting the thread is only relevant in the microbatch case; for continuous processing the query execution can quickly clean itself up without. ## How was this patch tested? existing tests Author: Jose Torres <[email protected]> Closes apache#20622 from jose-torres/SPARK-23441.

## What changes were proposed in this pull request? Add a specific stop method for ContinuousExecution. The previous StreamExecution.stop() method had a race condition as applied to continuous processing: if the cancellation was round-tripped to the driver too quickly, the generic SparkException it caused would be reported as the query death cause. We earlier decided that SparkException should not be added to the StreamExecution.isInterruptionException() whitelist, so we need to ensure this never happens instead. ## How was this patch tested? Existing tests. I could consistently reproduce the previous flakiness by putting Thread.sleep(1000) between the first job cancellation and thread interruption in StreamExecution.stop(). Author: Jose Torres <[email protected]> Closes apache#21384 from jose-torres/fixKafka.

This PR is a correctness fix in `HashAggregateExec` code generation. It forces evaluation of result expressions before calling `consume()` to avoid multiple executions. This PR fixes a use case where an aggregate is nested into a broadcast join and appears on the "stream" side. The issue is that Broadcast join generates it's own loop. And without forcing evaluation of `resultExpressions` of `HashAggregateExec` before the join's loop these expressions can be executed multiple times giving incorrect results. New UT was added. Closes apache#23731 from peter-toth/SPARK-26572. Authored-by: Peter Toth <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit 2228ee5) Signed-off-by: Wenchen Fan <[email protected]>

….1.x in HiveExternalCatalogVersionsSuite ## What changes were proposed in this pull request? This pr just removed workaround for 2.2.0 and 2.1.x in HiveExternalCatalogVersionsSuite. ## How was this patch tested? Pass the Jenkins. Closes apache#23817 from maropu/SPARK-26607-FOLLOWUP. Authored-by: Takeshi Yamamuro <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]> (cherry picked from commit e2b8cc6) Signed-off-by: Hyukjin Kwon <[email protected]>

…HiveExternalCatalogVersionsSuite ## What changes were proposed in this pull request? The maintenance release of `branch-2.3` (v2.3.3) vote passed, so this issue updates PROCESS_TABLES.testingVersions in HiveExternalCatalogVersionsSuite ## How was this patch tested? Pass the Jenkins. Closes apache#23816 from maropu/SPARK-26897-BRANCH-2.3. Authored-by: Takeshi Yamamuro <[email protected]> Signed-off-by: Sean Owen <[email protected]>

## What changes were proposed in this pull request? Backport SPARK-26873 (apache#23777) to branch-2.3. ## How was this patch tested? Existing tests to cover regressions. Closes apache#23832 from rdblue/SPARK-26873-branch-2.3. Authored-by: Ryan Blue <[email protected]> Signed-off-by: Marcelo Vanzin <[email protected]>

doesn't port cleanly to 2.4. we need this in branch-2.4 and branch-2.3 Author: Felix Cheung <[email protected]> Closes apache#23860 from felixcheung/2.4rdesc. (cherry picked from commit d857630) Signed-off-by: Felix Cheung <[email protected]>

## What changes were proposed in this pull request? apache#23852 doesn't port cleanly to 2.3. we need this in branch-2.4 and branch-2.3 Closes apache#23861 from felixcheung/2.3rdesc. Authored-by: Felix Cheung <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]>

…uble.NaN for all NaN values ## What changes were proposed in this pull request? Apache Spark uses the predefined `Float.NaN` and `Double.NaN` for NaN values, but there exists more NaN values with different binary presentations. ```scala scala> java.nio.ByteBuffer.allocate(4).putFloat(Float.NaN).array res1: Array[Byte] = Array(127, -64, 0, 0) scala> val x = java.lang.Float.intBitsToFloat(-6966608) x: Float = NaN scala> java.nio.ByteBuffer.allocate(4).putFloat(x).array res2: Array[Byte] = Array(-1, -107, -78, -80) ``` Since users can have these values, `RandomDataGenerator` generates these NaN values. However, this causes `checkEvaluationWithUnsafeProjection` failures due to the difference between `UnsafeRow` binary presentation. The following is the UT failure instance. This PR aims to fix this UT flakiness. - https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/102528/testReport/ ## How was this patch tested? Pass the Jenkins with the newly added test cases. Closes apache#23851 from dongjoon-hyun/SPARK-26950. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit ffef3d4) Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit ef67be3) Signed-off-by: Dongjoon Hyun <[email protected]>

## What changes were proposed in this pull request? Below build failed with Java checkstyle test, but instead of violation it shows FileNotFound on dtd file. https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/102751/ Looks like the link of dtd file is dead `http://www.puppycrawl.com/dtds/configuration_1_3.dtd`. This patch updates the dtd link to "https://checkstyle.org/dtds/" given checkstyle repository also updated the URL path. checkstyle/checkstyle#5601 ## How was this patch tested? Checked the new links. Closes apache#23887 from HeartSaVioR/java-checkstyle-dtd-change-url. Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Signed-off-by: Marcelo Vanzin <[email protected]> (cherry picked from commit c5de804) Signed-off-by: Marcelo Vanzin <[email protected]>

…d' dependency, not package it ## What changes were proposed in this pull request? Spark apps do not need to package Spark. In fact it can cause problems in some cases. Our examples should show depending on Spark as a 'provided' dependency. Packaging Spark makes the app much bigger by tens of megabytes. It can also bring in conflicting dependencies that wouldn't otherwise be a problem. https://issues.apache.org/jira/browse/SPARK-26146 was what reminded me of this. ## How was this patch tested? Doc build Closes apache#23938 from srowen/Provided. Authored-by: Sean Owen <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 3909223) Signed-off-by: Sean Owen <[email protected]>

## What changes were proposed in this pull request? Before dropping database refresh the tables of that database, so as to refresh all cached entries associated with those tables. We follow the same when dropping a table. UT is added Closes apache#23905 from Udbhav30/SPARK-24669. Authored-by: Udbhav30 <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]> (cherry picked from commit 9bddf71) Signed-off-by: Dongjoon Hyun <[email protected]>

…a stage ## What changes were proposed in this pull request? This is another attempt to fix the more-than-one-active-task-set-managers bug. apache#17208 is the first attempt. It marks the TSM as zombie before sending a task completion event to DAGScheduler. This is necessary, because when the DAGScheduler gets the task completion event, and it's for the last partition, then the stage is finished. However, if it's a shuffle stage and it has missing map outputs, DAGScheduler will resubmit it(see the [code](https://github.com/apache/spark/blob/v2.4.0/core/src/main/scala/org/apache/spark/scheduler/DAGScheduler.scala#L1416-L1422)) and create a new TSM for this stage. This leads to more than one active TSM of a stage and fail. This fix has a hole: Let's say a stage has 10 partitions and 2 task set managers: TSM1(zombie) and TSM2(active). TSM1 has a running task for partition 10 and it completes. TSM2 finishes tasks for partitions 1-9, and thinks he is still active because he hasn't finished partition 10 yet. However, DAGScheduler gets task completion events for all the 10 partitions and thinks the stage is finished. Then the same problem occurs: DAGScheduler may resubmit the stage and cause more than one actice TSM error. apache#21131 fixed this hole by notifying all the task set managers when a task finishes. For the above case, TSM2 will know that partition 10 is already completed, so he can mark himself as zombie after partitions 1-9 are completed. However, apache#21131 still has a hole: TSM2 may be created after the task from TSM1 is completed. Then TSM2 can't get notified about the task completion, and leads to the more than one active TSM error. apache#22806 and apache#23871 are created to fix this hole. However the fix is complicated and there are still ongoing discussions. This PR proposes a simple fix, which can be easy to backport: mark all existing task set managers as zombie when trying to create a new task set manager. After this PR, apache#21131 is still necessary, to avoid launching unnecessary tasks and fix [SPARK-25250](https://issues.apache.org/jira/browse/SPARK-25250 ). apache#22806 and apache#23871 are its followups to fix the hole. ## How was this patch tested? existing tests. Closes apache#23927 from cloud-fan/scheduler. Authored-by: Wenchen Fan <[email protected]> Signed-off-by: Imran Rashid <[email protected]> (cherry picked from commit cb20fbc) Signed-off-by: Imran Rashid <[email protected]>

…y or not in updateAndGetCompilationStats ## What changes were proposed in this pull request? `CodeGenerator.updateAndGetCompilationStats` throws an unsupported exception for empty code size statistics. This pr added code to check if it is empty or not. ## How was this patch tested? Pass Jenkins. Closes apache#23947 from maropu/SPARK-21871-FOLLOWUP. Authored-by: Takeshi Yamamuro <[email protected]> Signed-off-by: Takeshi Yamamuro <[email protected]>

|

Test build #103141 has finished for PR 24006 at commit

|

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

What changes were proposed in this pull request?

This is an optional solution for #22806 .

#21131 firstly implement that a previous successful completed task from zombie TaskSetManager could also succeed the active TaskSetManager, which based on an assumption that an active TaskSetManager always exists for that stage when this happen. But that's not always true as an active TaskSetManager may haven't been created when a previous task succeed, and this is the reason why #22806 hit the issue.

This pr extends #21131 's behavior by adding stageIdToFinishedPartitions into TaskSchedulerImpl, which recording the finished partition whenever a task(from zombie or active) succeed. Thus, a later created active TaskSetManager could also learn about the finished partition by looking into stageIdToFinishedPartitions and won't launch any duplicate tasks.

How was this patch tested?

Add.