-

Notifications

You must be signed in to change notification settings - Fork 11

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Merge branch 'master' of github.com:brain-life/warehouse

- Loading branch information

Showing

42 changed files

with

878 additions

and

575 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,4 +1,19 @@ | ||

| # Brainlife Warehouse | ||

|

|

||

| Please see http://www.brainlife.io/warehouse/ | ||

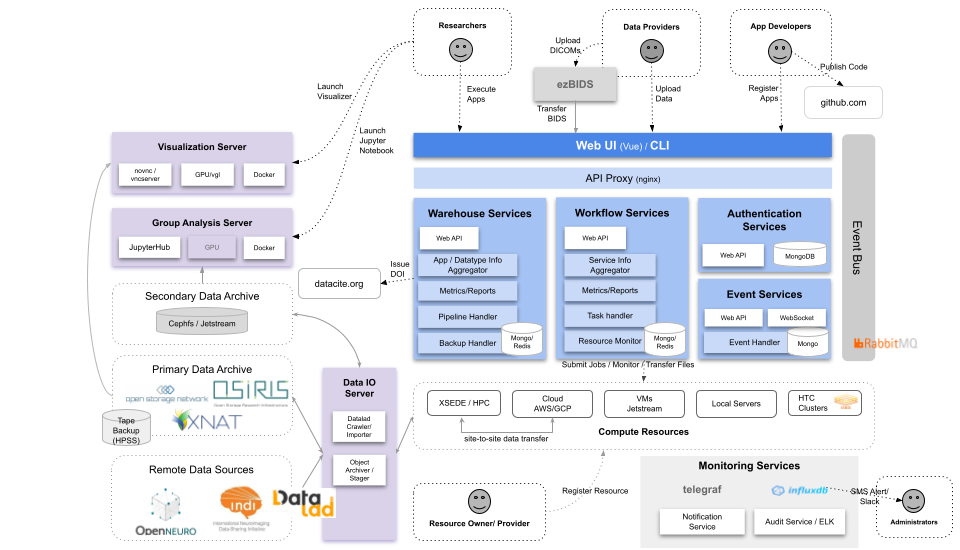

| Brainlife warehouse provides most of web UI hosted under https://brainlife.io and the API services that are unique to Brainlife. | ||

|

|

||

| touched | ||

|  | ||

|

|

||

| For more information, please read [Brainlife Doc](https://brain-life.github.io/docs/) | ||

|

|

||

| For Warehouse API doc, please read [Warehouse API Doc](https://brain-life.github.io/warehouse/apidoc/) | ||

|

|

||

| ### Authors | ||

| - Soichi Hayashi ([email protected]) | ||

|

|

||

| ### Project directors | ||

| - Franco Pestilli ([email protected]) | ||

|

|

||

| ### Funding | ||

| [](https://nsf.gov/awardsearch/showAward?AWD_ID=1734853) | ||

| [](https://nsf.gov/awardsearch/showAward?AWD_ID=1636893) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.