-

Notifications

You must be signed in to change notification settings - Fork 13

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Introduce concurrent circular buffer #29

base: master

Are you sure you want to change the base?

Conversation

|

Also, I think that there should be a leaky mode the queue can be constructed with which removes crossbeam protection from the buffer at the cost of leaking it. I suspect there are many applications which would make this tradeoff, that might only have a few queues of this style which don't grow too large over the course of things. |

|

Great work, happy to see the area of concurrent queues being explored further! I don't have any objections to adding this to Crossbeam, but there are several questions we should try to resolve first. Differences from

|

| bounded | unbounded | |

|---|---|---|

| SPSC | simple circular buffer | CircBuf |

| SPMC | ???(1) | CircBuf |

| MPSC | ???(2) | MPSC queue by DV |

| MPMC | MPMC queue by DV | MsQueue or SegQueue |

We already have some implementations ready to fill this matrix (e.g. MsQueue and SegQueue). The bounded MPMC variant can be easily filled by ripping out the array-based queue from crossbeam-channel. We can have unbounded MPSC queues by stripping down std::sync::mpsc. And so on.

I like CircBuf because it's pretty versatile and fills two slots in the matrix, maybe even three. It might also be the right choice for the bounded SPMC (1) variant. And the bounded MPSC (2) variant should probably be just a slightly tweaked version of the bounded MPMC variant.

Maybe we should create crossbeam-queue with modules named spsc, spmc, mpsc, and mpmc, where each module contains a bounded and unbounded variant? What do you think? The purpose of this crate would be to provide mostly low-level queues to be used as building blocks in schedulers, real-time audio, actor frameworks, and so on. The module structure would make it easy to choose the right variant for your use case. Just food for thought...

|

@schets @stjepang Thanks for your valuable inputs! Because of the bad SPMC performance results, I'd like to close this PR. I "guess" LCRQ or Yang-Mellor-Crummey queue are faster than circbuf for SPMC and even SPSC scenarios, I guess. I'll explore that direction.

|

|

status report: I've changed my mind, and I'm retrying to merge this RFC. As discussed above, hopefully it can fill several entries in the concurrent queue implementation matrix. I revised the But the performance is still bad. I optimized the circular buffer as @schets said, but unfortunately, I couldn't get any speedup. It's still ~40% slower than the segmented queue for SPMC scenarios. I'll put some more energy to find out why it's slow. |

|

@jeehoonkang it looks like tx and rx share a cache line, which they shouldn't have to. Also, the writer should cache the last-seen reader index so it doesn't have to hit the other cache line all the time |

|

@schets Thanks for your comments! After applying the optimizations you mentioned, I finally managed to get a good performance result :) @stjepang I'm thinking of providing A remaining question is whether (1) we publish |

I'd prefer going with

While I like categorizing queues in terms of spsc/spmc/mpsc/mpmc and bounded/unbounded, there are also other possible strategies. For example, Java has

Do you mean using |

|

@stjepang yeah, probably we're discussing what should be the API of We already had a discussion on how to organize crates in the context of Then how about I can think of two remaining questions. The first question is, what'll the queue/channel trait looks like? Should we have 8 traits for bounded/unbounded, multi/single-producer, multi/single-consumer queues? (Or more for blocking/nonblocking variants?) Can we refactor them in a nice way? I wonder if we can get inspiration from C++'s policy-based design. The second question is, how to effectively manage queue implementations in terms of development cost and user friendliness, if there are too many? Though this problem can be mitigated by merging all crates into a monorepo and writing a clear guideline on choosing queue implementations. |

|

Ok, looks like we have a few unresolved questions and potential solutions, but are feeling hesitant to commit to any of them. How about we put this queue into This is why I've decided to add |

|

@jeehoonkang I have a new, more pragmatic suggestion on how to move forward here. :) Besides schedulers, it is not yet clear what is the ideal use case for Tokio is currently building a fast and fair scheduler. Currently, it uses Circular buffers would generally be faster in fair schedulers, but I don't think we need a brand new name for the data structure nor a dedicated crate for it. Instead, we can simply add an option to The idea is to have two internal flavors of More concretely, this is what I have in mind: enum Flavor<T> {

// Chase-Lev deque (the current `crossbeam-deque`).

Lifo(LifoQueue<T>),

// Circular buffer (`CircBuf`).

Fifo(FifoQueue<T>),

}

pub struct Deque<T>(Arc<Flavor<T>>);

pub struct Stealer<T>(Arc<Flavor<T>>);

impl<T> Deque<T> {

// This is just a suggestion; we may name these functions differently.

pub fn new_lifo() -> Deque<T>;

pub fn new_fifo() -> Deque<T>;

}Choosing between LIFO and FIFO here only affects the behavior of the A big advantage of having the option of both LIFO and FIFO ordering within the same interface is simplicity. For example, it means Rayon can set up its job queue like this: let d = if breadth_first {

Deque::new_fifo()

} else {

Deque::new_lifo()

};

let s = d.stealer();This approach has worked out very well in Java's If you like this idea, I can merge |

Rendered

Implementation

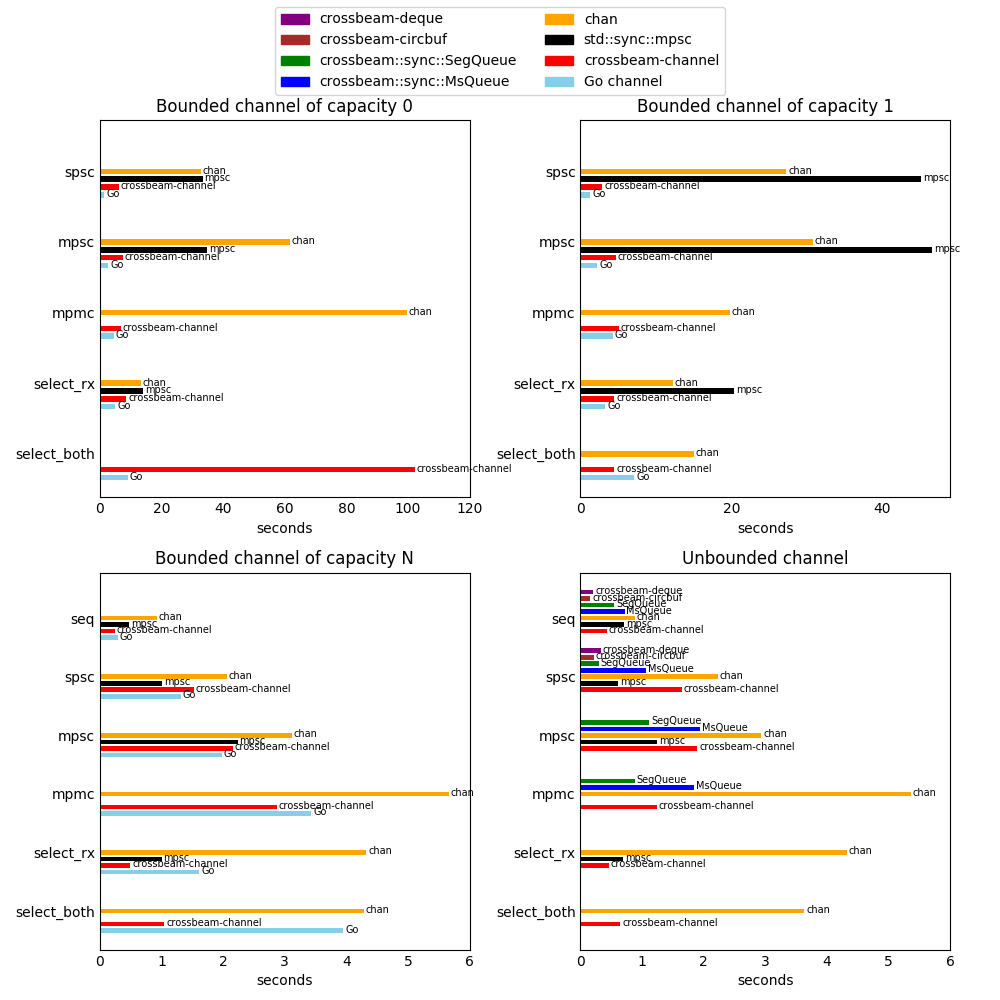

A benchmark result: