✨ Click here to see the dashboard! ✨

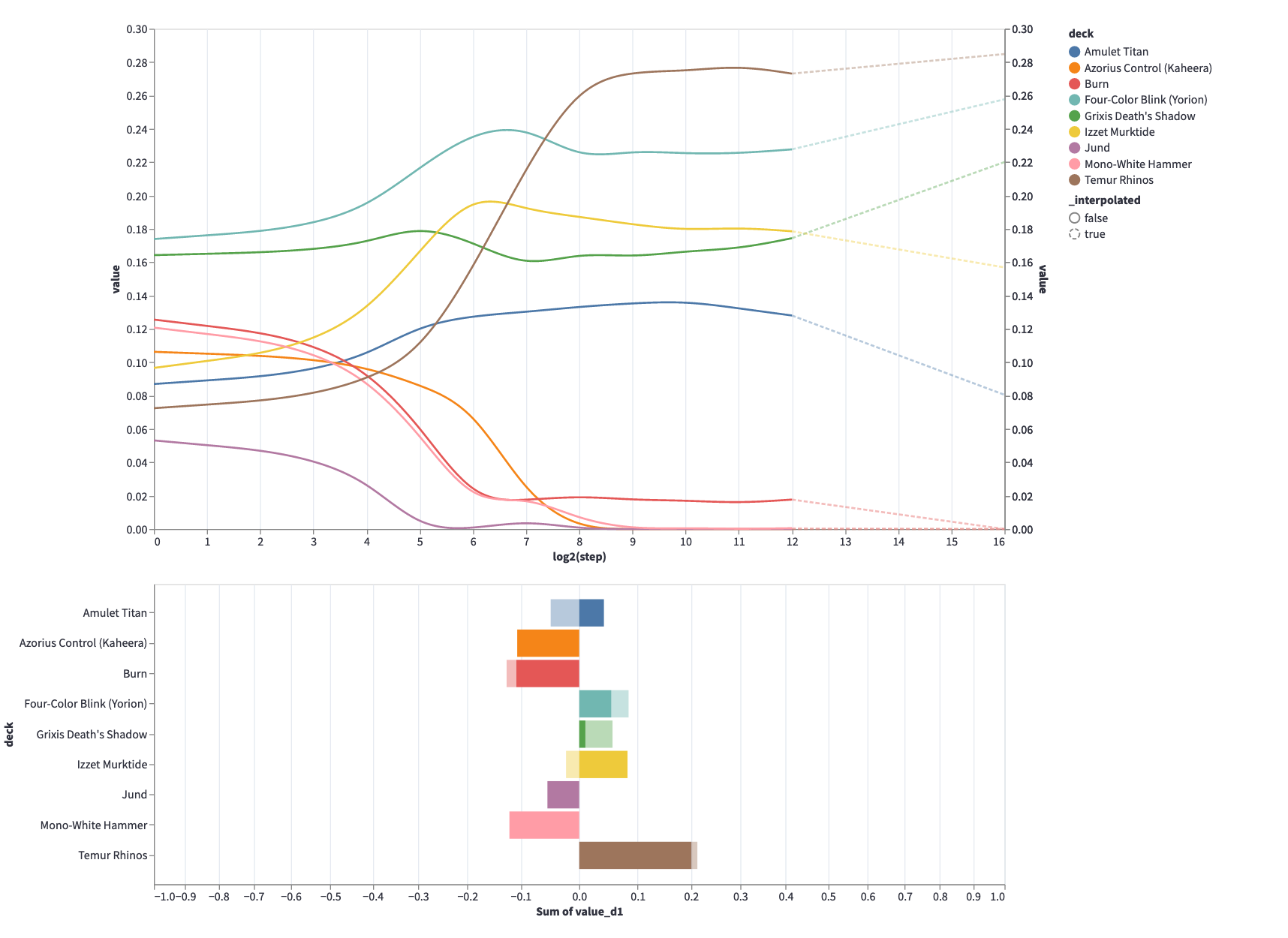

Demo of a metagame solver for Magic: The Gathering.

Run locally with:

pip install -r requirements.txt

streamlit run app/main.pyWhen training younger data scientists, one of the things I try to instill in them is that importing from SKLearn and using a pre-packaged algorithm isn't going to get you very far in most quantative problems. A lot of the best work you're going to do is with actual math (both pen & paper and WolframAlpha), and thinking through problems creatively, precisely, and with some subject matter expertise.

A lot of the quantitative work I've done on that are under NDA, so I have a hard time explaining to people what I mean by this. In this project I've tried to take on a problem in a somewhat novel way to show what I mean by that.

JAX is often used for machine learning research, but you don't need to be building neural networks to use it. Gradients are very useful for solving various real world optimization problems. Here is one such example.