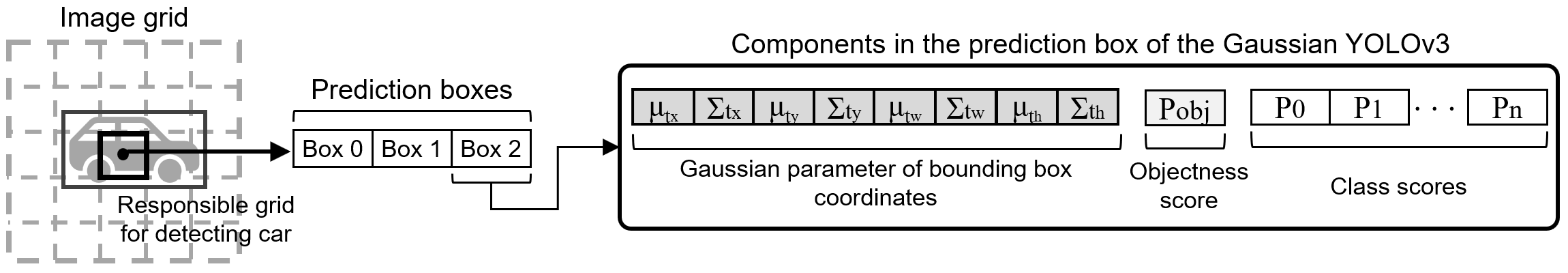

Gaussian YOLOv3: An Accurate and Fast Object Detector Using Localization Uncertainty for Autonomous Driving

Gaussian YOLOv3 implementation

This repository contains the code for our ICCV 2019 Paper

The proposed algorithm is implemented based on the YOLOv3 official code.

The provided example weight file ("Gaussian_yolov3_BDD.weights") is not the weight file used in the paper, but newly trained weight for release code validation. Because this weight file is more accurate than the weight used in the paper, we provide this file in the repository.

@InProceedings{Choi_2019_ICCV,

author = {Choi, Jiwoong and Chun, Dayoung and Kim, Hyun and Lee, Hyuk-Jae},

title = {Gaussian YOLOv3: An Accurate and Fast Object Detector Using Localization Uncertainty for Autonomous Driving},

booktitle = {The IEEE International Conference on Computer Vision (ICCV)},

month = {October},

year = {2019}

}

The code was tested on

Ubuntu 16.04, NVIDIA GTX 1080 Ti with CUDA 8.0 and cuDNNv7, OpenCV 3.4.0

Ubuntu 16.04, NVIDIA Titan Xp with CUDA 9.0 and cuDNNv7, OpenCV 3.3.0

Please see the YOLOv3 website instructions setup

We tested our algorithm using Berkeley deep drive (BDD) dataset.

If you want to use BDD dataset, please see BDD website and download the dataset.

For training, you must make image list file (e.g., "train_bdd_list.txt") and ground-truth data. Please see these websites: YOLOv3, How to train YOLO

List files ("train_bdd_list.txt", "val_bdd_list.txt", "test_bdd_list.txt") in the repository are an example. You must modify the directory of the file name in the list to match the path where the dataset is located on your computer.

Download pre-trained weights darknet53.conv.74

Download the code

git clone https://github.com/jwchoi384/Gaussian_YOLOv3cd Gaussian_YOLOv3Compile the code

makeSet batch=64 and subdivisions=16 in the cfg file.

We used 4 gpus in our experiment. If your computer runs out of GPU memory when training, please increase subdivision size in the cfg file.

Start training by using the command line

./darknet detector train cfg/BDD.data cfg/Gaussian_yolov3_BDD.cfg darknet53.conv.74If you want to use multiple gpus,

./darknet detector train cfg/BDD.data cfg/Gaussian_yolov3_BDD.cfg darknet53.conv.74 -gpus 0,1,2,3Download the Gaussian_YOLOv3 example weight file. Gaussian_yolov3_BDD.weights

Set batch=1 and subdivisions=1 in the cfg file.

Run the following commands.

make./darknet detector test cfg/BDD.data cfg/Gaussian_yolov3_BDD.cfg Gaussian_yolov3_BDD.weights data/example.jpg

You can see the result:

Download the Gaussian_YOLOv3 example weight file. Gaussian_yolov3_BDD.weights

For evaluation, you MUST change the batch and subdivision size in cfg file.

Like this: batch = 1, subdivision = 1

Run the following commands. You can get a detection speed of more than 42 FPS.

-

make -

./darknet detector valid cfg/BDD.data cfg/Gaussian_yolov3_BDD.cfg Gaussian_yolov3_BDD.weights -

cd bdd_evaluation/(We got this code from https://github.com/ucbdrive/bdd-data) -

python evaluate.py det gt_bdd_val.json ../results/bdd_results.json

You will get:

AP : 9.45 (bike)

AP : 40.28 (bus)

AP : 40.56 (car)

AP : 8.66 (motor)

AP : 16.85 (person)

AP : 10.59 (rider)

AP : 7.91 (traffic light)

AP : 23.15 (traffic sign)

AP : 0.00 (train)

AP : 40.28 (truck)

[9.448295420802772, 40.28022967768842, 40.562338308273596, 8.658317480713093, 16.85103955706777, 10.588396343004272, 7.914563796458698, 23.147189144825003, 0.0, 40.27786994583501]

9.45 40.28 40.56 8.66 16.85 10.59 7.91 23.15 0.00 40.28

mAP 19.77 (512 x 512 input resolution)

If you want to get the mAP for BDD test set,

makeChange the list file in cfg file ("val_bdd_list.txt" --> "test_bdd_list.txt" in "cfg/BDD.data")./darknet detector valid cfg/BDD.data cfg/Gaussian_yolov3_BDD.cfg Gaussian_yolov3_BDD.weightsUpload result file ("results/bdd_results.json") on BDD evaluation serverLink

On the BDD test set, we got 19.2 mAP (512 x 512 input resolution).

- Keras implementation: xuannianz/keras-GaussianYOLOv3

- PyTorch implementation: motokimura/PyTorch_Gaussian_YOLOv3

For questions about our paper or code, please contact Jiwoong Choi.