Filter and format a newline-delimited JSON stream of Wikibase entities.

Typically useful to create a formatted subset of a Wikibase JSON dump.

Some context: This tool was formerly known as wikidata-filter. Wikidata is an instance of Wikibase. This tool was primarly designed with Wikidata in mind, but should be usable for any Wikibase instance.

This project received a Wikimedia Project Grant.

this tool requires to have NodeJs installed.

# Install globally

npm install -g wikibase-dump-filter

# Or install just to be used in the scripts of the current project

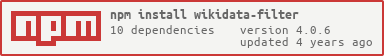

npm install wikibase-dump-filterSee CHANGELOG.md for version info

Wikidata provides a bunch of database dumps, among which the desired JSON dump. As a Wikidata dump is a very laaarge file (April 2020: 75GB compressed), it is recommended to download that file first before doing operations on it, so that if anything crashes, you don't have to start the download from zero (the download time being usually the bottleneck).

wget --continue https://dumps.wikimedia.org/wikidatawiki/entities/latest-all.json.gz

cat latest-all.json.gz | gzip -d | wikibase-dump-filter --claim P31:Q5 > humans.ndjsonYou can generate a JSON dump using the script dumpJson.php. If you are running Wikibase with wikibase-docker, you could use the following command:

cd wikibase-docker

docker-compose exec wikibase /bin/sh -c "php ./extensions/Wikibase/repo/maintenance/dumpJson.php --log /dev/null" > dump.json

cat dump.json | wikibase-dump-filter --claim P1:Q1 > entities_with_claim_P1_Q1.ndjsonThis package can both be used as a command-line tool (CLI) and as a NodeJS module. Those 2 uses have their own documentation page but the options stay the same, and are documented in the CLI section

- wikibase-dump-formatter: Extends Wikibase RDF dump prefixed URIs with a custom domain.

- wikibase-cli: The command-line interface to Wikibase

- wikibase-sdk: A javascript tool suite to query and work with Wikibase data

- wikibase-edit: Edit Wikibase from NodeJS, used in wikidata-cli for all write operations

- wikidata-subset-search-engine: Tools to setup an ElasticSearch instance fed with subsets of Wikidata

- import-wikidata-dump-to-couchdb: Import a subset or a full Wikidata dump into a CouchDB database

- wikidata-taxonomy: A command-line tool to extract taxonomies from Wikidata

- Other Wikidata external tools

Do you know Inventaire? It's a web app to share books with your friends, built on top of Wikidata! And its libre software too.