-

Notifications

You must be signed in to change notification settings - Fork 123

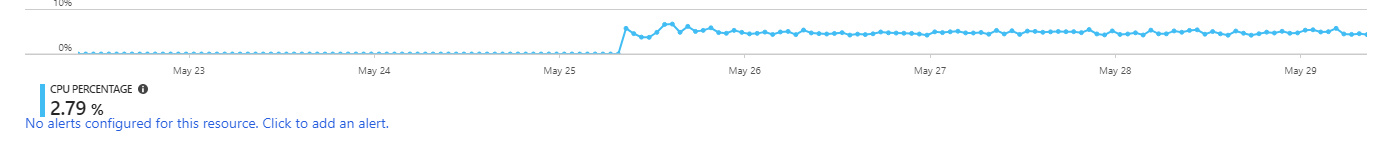

CPU usage slowly increases over time #690

Comments

|

Thanks for reporting. We'll try to setup a repro and investigate more. |

|

2.3.0-beta2, running on an ASP.NET Core 2.1-rc1 app.

…On Wed, May 23, 2018, 3:08 PM Cijo Thomas ***@***.***> wrote:

Thanks for reporting. We'll try to setup a repro and investigate more.

What was the version of application insights SDK which you used?

—

You are receiving this because you authored the thread.

Reply to this email directly, view it on GitHub

<#690 (comment)>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AAL9OE-fJkMOt_09ld_JL3Tl9Vhj0MMoks5t1bPEgaJpZM4UFhGL>

.

|

|

I'll setup something for linux now. |

|

We got same issue on Ubuntu 16.04

Before Upgrade: After: |

|

Thanks for reporting this. We will setup a linux test to do deeper investigation soon. |

|

Have an asp.net core 2.1 app with AI SDK 2.3, running in ubuntu 16_04. A script is pinging the app once every 10 sec. |

|

6hours, no visible change in CPU load. CPU is constant around .39% |

|

Same thing happening on Windows as well (Azure App service) |

|

repro-ed on linux vm. Need to investigate more. |

|

@SteveDesmond-ca @mehmetilker @thohng I've seen a similar thing since upgrading to .NET Core 2.1 and not only does our CPU usage increase over time, but our open handles increase dramatically since the upgrade: Could you take a look at your handle count and confirm if you have the same issue or not? |

|

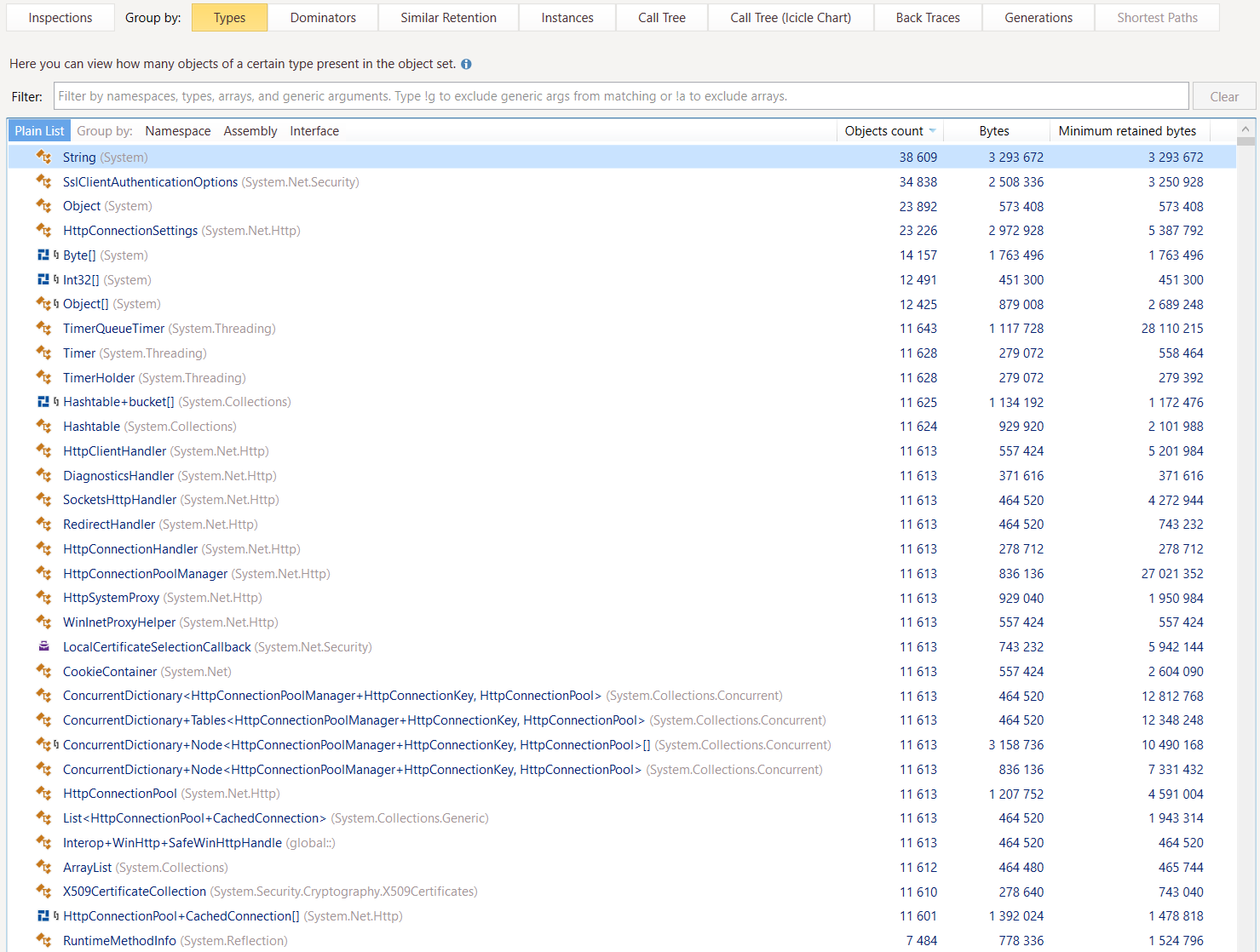

I'm recently having issues with increased memory usage. I checked it with dotMemory and I've seen many System.Net.Http related objects - e.g. open handlers that haven't been closed etc. I'm not yet sure if it's Application Insights though (I will do some more tests next week) - but if it is, maybe this clue helps finding the issue. |

|

I also have increasing CPU which seems to be from HTTP. It was not noticeable until I went to production which looked great after deployment, but a few hours later everything was redlined due to ever-increasing CPU demand as shown below. Unfortunately, this is a show stopper and is forcing me to rollback. Runtime: core 2.1 Linux x64 |

|

We've downgraded Application Insights back to v2.2.1 and don't see the increasing CPU and memory usage anymore, so I can confirm that this is an issue with Application Insights. @cijothomas Any chance this can be looked into? This basically makes version 2.3.0 not usable for production scenarios |

|

@flagbug Thanks for helping narrow down to 2.3.0. We will prioritize this investigation. |

|

Related, maybe the cause as well: microsoft/ApplicationInsights-dotnet#594 |

|

Might want to see if |

|

I now also ran our application without App Insights for a few days and I don't see any stale Http-related objects in memory so I'm also "blaming" Application Insights for now. Could you please prioritize this? This has been open for more than a month and a profiler that leaks memory is a "really bad thing" ™️... /cc @SergeyKanzhelev @lmolkova (fyi, in case you didn't know about this yet) |

|

@cwe1ss Were you facing issues in linux or windows or both? |

|

@cwe1ss what are the back traces/call tree like on some of these objects? 11,613 object count ones are interesting; especially since it looks like there are 11,613 sets of

|

|

@benaadams can I get this from the process dump via dotMemory / WinDbg or do I need to trace this with PerfView / dotTrace ? |

|

oh... I've seen the tabs in dotMemory... but they are empty and just say "No allocation info was collected during profiling". So I guess I need PerfView / dotTrace, right?! |

|

I clicked around a bit more in dotMemory (I don't know much about it, sorry) and I've seen that you can get more details about the object instances. Here's some screenshots for a random instance of Hope this helps?! |

|

I'm guessing the CPU increase is from the constantly increasing number of

Not sure why the timers are constantly increasing though... |

|

Could this be an issue with how HeartbeatProvider uses

@benaadams thank you very much for looking into this!!! |

|

I upgraded to the First screenshot showing 2 threads working only and constantly on the CPU leak, I found that the amount of threads depend on uptime of the process, so leak increasing in time and was not fixed in 2.4.1 Second screenshot showing the increase ratio where 600% is a hardware limit, after some time AspNetCore app working only on the leak. @cijothomas Please advise here. |

|

@Svisstack what version of .NET are you running on? High CPU usage in the .NET Core 2.1 will throw an exception when an infinite loop would previously occur due to this https://github.com/dotnet/corefx/issues/28123 It could happen if |

|

@benaadams I'm running this on the .NET Framework 4.7.2 and compiling with the 4.7.1 If this is true, it looks like a major bug in app insights; why someone would put a SpinLock-like reader around the dictionary item which could not exist. I had an idea to create a workaround and still keep the app insights, with disabling dependency tracking to avoid this issue to happen as it's related to the database dependency, however it looks like there is no option to disable dependency tracking. |

The dictionary for the header collection is reused for each request on the same connection; so at the end of the request it is cleared. If that clearing happens while another thread is reading the header collection (e.g. it reads it outside of the normal request flow); then the read can go into an infinite loop as the arrays inside the dictionary are changed (Dictionary's are not threadsafe for a concurrent write with any other operation on it). In .NET Core 2.1 it will detect the infinite loop being formed and throw an exception; though it won't fix the concurrent use. |

|

@benaadams Thanks for detailed explanation. I understand now what's happening, but didn't see a clear solution/fix for this as it looks like the bug is actually in the AspNetCore which allows concurrent access to the dictionary instance which is not thread-safe ofc. |

Well... even if concurrent access was prevented (at a performance cost); it still wouldn't help as the data it read would still be wrong. If its conflicting with

At a guess... Looking at the stack from dotTrace, it could be a Sql query that's started as part of the Request; but not When it completes it would then be trying to log the shallow copied (i.e. reference to) the captured Request data as part of the Application Insight's Telemetry event; using the mutable Request information that has now changed and is no longer valid for the event. I'm not entirely sure how public void OnEndExecuteCallback(long id, bool success, bool synchronous, int sqlExceptionNumber)

{

DependencyCollectorEventSource.Log.EndCallbackCalled(id.ToString(CultureInfo.InvariantCulture));

var telemetryTuple = this.TelemetryTable.Get(id);

if (telemetryTuple == null)

{

DependencyCollectorEventSource.Log.EndCallbackWithNoBegin(id.ToString(CultureInfo.InvariantCulture));

return;

}

if (!telemetryTuple.Item2)

{

this.TelemetryTable.Remove(id);

var telemetry = telemetryTuple.Item1 as DependencyTelemetry;

telemetry.Success = success;

telemetry.ResultCode = sqlExceptionNumber != 0 ? sqlExceptionNumber.ToString(CultureInfo.InvariantCulture) : string.Empty;

DependencyCollectorEventSource.Log.AutoTrackingDependencyItem(telemetry.Name);

ClientServerDependencyTracker.EndTracking(this.telemetryClient, telemetry);

}

} |

|

If its a Using a pattern similar to dotnet/corefx#26075 |

|

Or aspnet's NonCapturingTimer helper dotnet/extensions#395 |

|

@benaadams Thanks, I actually found the Tasks which can and probably are ending after the request is finished and they contain the SQL code, I will wait on them and report about the state of the issue after deploying the new version today. Based on your very useful input, I think it's still issue in the AspNetCore as it should not provide functions which delivering possible random data, if Headers dictionary is shared between requests then function for accessing that headers should have required some kind of request ID (static long incrementing every new request) based on which internal function could decide that these headers no longer exists and throw an exception instead of providing random data. |

Its more the Headers are for the connection as there can only be one active request on a connection at any time. If they are accessed via However, while that may help with the CPU issue; it would also mean the telemetry from What should probably happen is Application Insights during I'm not entirely sure how Application Insights is capturing/reading the headers so I can't provide code suggestions for the changes, alas... |

|

@benaadams Removing the Tasks with SQL code that could end after the request solved the issue. Many thanks, I really appreciate your input here. So it's no action needed here as there is a new interface which didn't have this issue? I want to make sure that we should fix that for the community, or at least in known Insights bugs should be information that no dependency should end after the request is already finished. |

|

Well I do think AI should collect the information it needs on event start (i.e. key,value for headers); then use that collected information for event end; rather than rereading it from the HttpHeader collection; which should solve this issue more holistically. |

|

@benaadams Can you then reopen this issue? |

Alas, no, I don't work for MS or have rights to this repo. Sorry |

|

@cijothomas Can you reopen this? We still have bug in insights related to this problem. |

|

@Svisstack Can you open this issue in https://github.com/Microsoft/ApplicationInsights-dotnet-server/issues? |

|

@cijothomas It's done. |

|

@Svisstack thanks. Will discuss on othed thread. |

Hi @Svisstack, How did you solve that with this way? Could you share any code detail? |

|

@turgay I was able to wait on all the tasks or rewriting a sync code into the sync one before the request ended, in the future I could not probably do that then I believe migrating to the other lib or waiting for the fix are the only options.

… On 14 Dec 2561 BE, at 00:32, Turgay ***@***.***> wrote:

@benaadams Thanks, I actually found the Tasks which can and probably are ending after the request is finished and they contain the SQL code, I will wait on them and report about the state of the issue after deploying the new version today.

Based on your very useful input, I think it's still issue in the AspNetCore as it should not provide functions which delivering possible random data, if Headers dictionary is shared between requests then function for accessing that headers should have required some kind of request ID (static long incrementing every new request) based on which internal function could decide that these headers no longer exists and throw an exception instead of providing random data.

Hi @Svisstack,

How did you solve that with this way? Could you share any code detail?

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.

|

|

@Svisstack I guess you meant @turgayozgur but not me :) |

I had previously used Application Insights, but no longer do, however the App Insights code remained in my app. I noticed when I recently migrated hosts and upgraded to .NET Core 2.1.0-rc1 that the CPU usage gradually increased over the course of a few days.

The drops back to 0 are Docker container restarts.

Removing Application Insights from the site results in a steady almost-zero CPU usage.

See also this Twitter thread, though there's not much more there.

Repro Steps

app.AddApplicationInsightsTelemetry("{no-longer-valid instrumentation key}")Actual Behavior

Expected Behavior

Version Info

SDK Version : 2.1.0-rc1

.NET Version : 2.1.0-rc1

How Application was onboarded with SDK(VisualStudio/StatusMonitor/Azure Extension) :

OS : Ubuntu 16.04

Hosting Info (IIS/Azure WebApps/ etc) : Azure VM

The text was updated successfully, but these errors were encountered: