-

Notifications

You must be signed in to change notification settings - Fork 2.6k

Provide cutoff to block proposer to avoid wasting work #1354

Comments

|

Are you running in release or debug mode? In Aura consensus we cannot author blocks that take longer than the block time to produce. |

|

I run in release mode. The normal block time is 4 seconds. Under 90 transactions, the block time reached 9 seconds.

… On 7/01/2019, at 10:58 PM, Robert Habermeier ***@***.***> wrote:

Are you running in release or debug mode?

In Aura consensus we cannot author blocks that take longer than the block time to produce.

—

You are receiving this because you authored the thread.

Reply to this email directly, view it on GitHub <#1354 (comment)>, or mute the thread <https://github.com/notifications/unsubscribe-auth/Ad4DEfpQTkGBAyHHDCVTLcwFSXxvGNiaks5vAxpAgaJpZM4ZzE_q>.

|

I guess you found your answer :) |

|

I think this is a bad performance issue that could impact the liveness of the network. The block producer should be aware the amount of the time consumed while producing the block and propose the block before the timeout reached. i.e. Construct block with 60 transactions and propose it, instead of construct block with 100 transactions and failed to propose it within the time limit. The trouble is the that currently only limitations on how many transactions can be put into a block is block size (and total gas fee for smart contracts), which does not consider the actual computation time of all other transactions. This is fine if all transactions are fast (i.e. impossible to causing block construction time > block proposer time limit), which I don't see how feasible to enforce that. Therefore it is very possible that the block producer trying to put too many slow transactions (in this case, balance transfer, which shouldn't be slow at all) into the block and failed to construct the block within the time limit and causing liveness issue (if all validators are doing the same thing). Otherwise it is just another attack vector to substrate-based network. Just need to find out the most effective computation expensive transaction (consider speed and tx size and tx fee to find most cost effective method) and spam it. If such tx exists, the network will be vulnerable. I see two areas needs to improved

|

|

The simple way to do this would be to extend the The main problem I see here is that sealing and generating a state root will take more time, the more transactions/work is done. Or that there may be large/bulky transactions that cannot be cut off asynchronously. Releasing outside of the step is still likely a problem as long as the timestamp is set correctly, but probably not a huge one as long as it happens rarely. It will often mean that the block, although still authored, will be orphaned. |

|

Actually, looking closer at the logs provided, what's happening is that import is doing the work again, so we could ~double throughput via #1232 . Prior comment still stands. |

|

In general, it should be up to the chain logic to figure out when there are "too many transactions" in the block. Ethereum does this with a gas limit, Bitcoin with a block size limit. Substrate shouldn't really prejudice what strategy be used, only that it's possible to state one. For now, it should probably just be an alternative error return variant in |

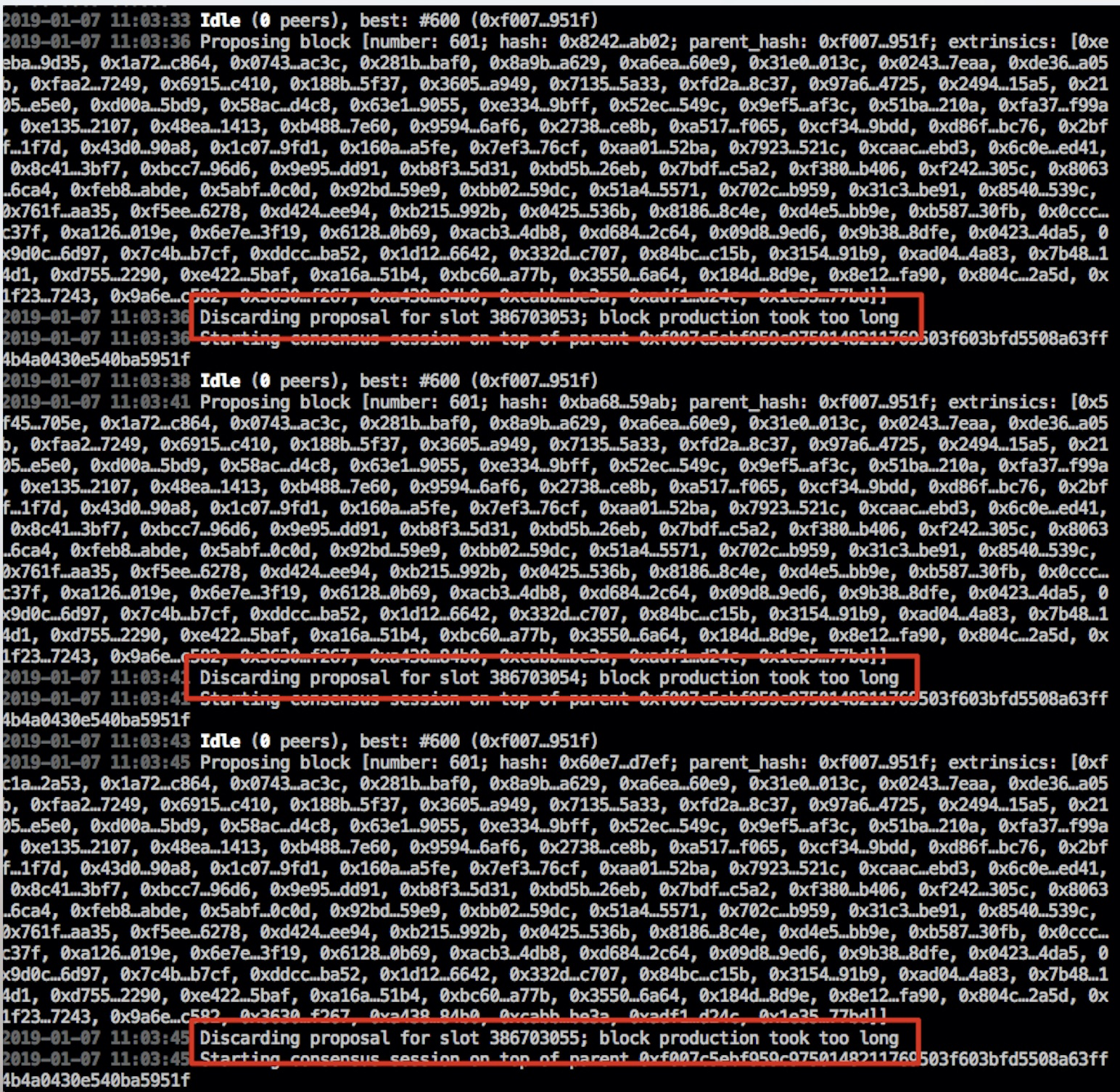

Hi Polkadot and Substrate team, we're trying to test how many transactions can be handled in one block, and encountered the 'block production took too long' issue when injecting 100 transactions:

I'm running a single node network on my local machine (Macbook Pro 2017, Mac OS High Sierra)

We believe the block can handle more than 100 transaction.

Could you please advise us how to fix the issue? Thanks a lot.

The text was updated successfully, but these errors were encountered: