By Peiyun Hu, Jason Ziglar, David Held, and Deva Ramanan

You can find our paper on CVF Open Access. If you find our work useful, please consider citing:

@inproceedings{hu20wysiwyg,

title={What You See Is What You Get: Exploiting Visibility for 3d Object Detection},

author={Hu, Peiyun and Ziglar, Jason and Held, David and Ramanan, Deva},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={11001--11009},

year={2020}

}

The code is developed based on SECOND, a well-known deep network based 3D object detector. Please refer to this README and NUSCENES-GUIDE on how to set up a working environment for the SECOND detector.

Perhaps most importantly, you have to install spconv.

Download the pre-trained weights of the proposed model (early fusion) from Google Drive.

The model configuration is located at second/configs/nuscenes/all.pp.mhead.vpn.config.

Run the following command to start training our model.

python script.py train_nuscenes --base_cfg="all.pp.mhead.vpn.config" --sample_factor=1 --epochs=20 --eval_epoch=2 --sweep_db=True --label=vp_pp_oa_ta_learn --resume=True

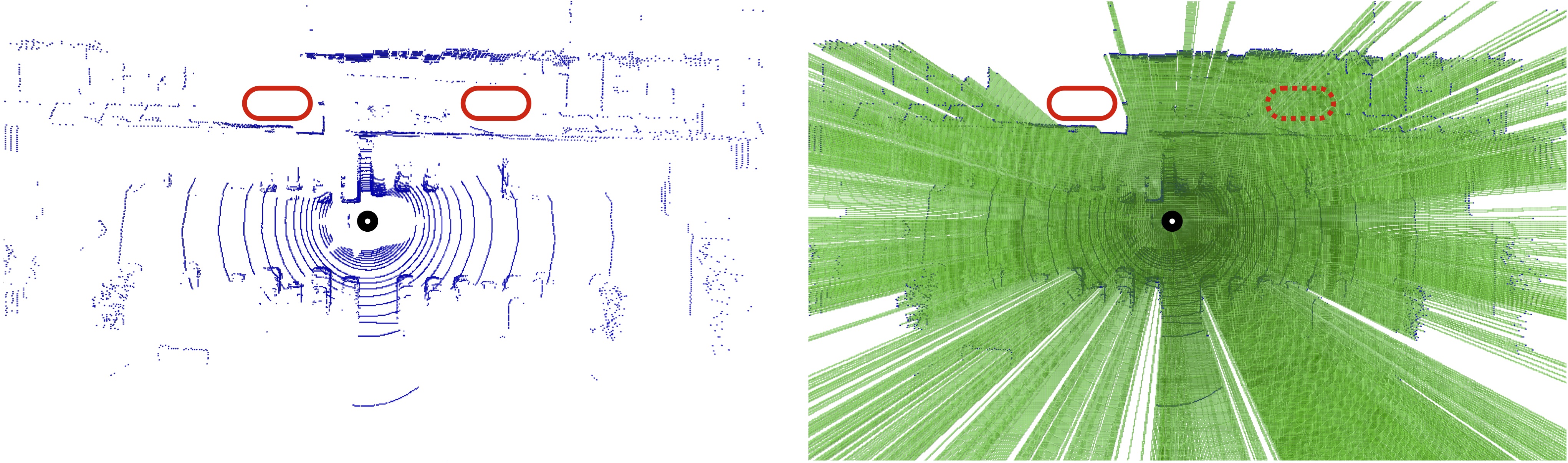

You can find the code for raycasting under second/utils/mapping. The default setting for raycasting drilling.

We call raycasting functions in second/data/preprocess.py. If you are interested in integrating visibility into your own work, you can use it as an example of how to extract a visibility volume out of a LiDAR point cloud.

You will need to compile the code using CMake.