-

Notifications

You must be signed in to change notification settings - Fork 12.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Run proc macro invocations in separate threads. #56058

Comments

As a preliminary "fix" we could probably prohibit I'm also not quite sure what the reasoning behind this being undesirable is, and I think it would be good to include that in this issue so that the cost/benefit could be better analyzed. |

|

@Mark-Simulacrum Proc macros shouldn't have state between invocations, as we don't want to guarantee any particular execution order, and also incremental macro expansion wouldn't want to always re-run all the invocations. If necessary, we can make the incremental expansion integration opt-in, but I hope we don't have to (as most proc macros, especially derives, are stateless). |

|

Okay -- so mostly essentially non-technical reasons? That's what I expected. I think the main reason why I feel like thread isolation is not super helpful is that it seems like then people will just move to |

|

@Mark-Simulacrum I think we need to be more clear about not guaranteeing execution order in documentation and such; is this even documented anywhere right now? |

|

@Mark-Simulacrum One thing I forgot to mention is that the But with #49219, methods will panic (including |

Decouple proc_macro from the rest of the compiler.

This PR removes all dependencies of `proc_macro` on compiler crates and allows multiple copies of `proc_macro`, built even by different compilers (but from the same source), to interoperate.

Practically, it allows:

* running proc macro tests at stage1 (I moved most from `-fulldeps` to the regular suites)

* using proc macros in the compiler itself (may require some rustbuild trickery)

On the server (i.e. compiler front-end) side:

* `server::*` traits are implemented to provide the concrete types and methods

* the concrete types are completely separated from the `proc_macro` public API

* the only use of the type implementing `Server` is to be passed to `Client::run`

On the client (i.e. proc macro) side (potentially using a different `proc_macro` instance!):

* `client::Client` wraps around client-side (expansion) function pointers

* it encapsulates the `proc_macro` instance used by the client

* its `run` method can be called by a server, to execute the client-side function

* the client instance is bridged to the provided server, while it runs

* ~~currently a thread is spawned, could use process isolation in the future~~

(not the case anymore, see #56058)

* proc macro crates get a generated `static` holding a `&[ProcMacro]`

* this describes all derives/attr/bang proc macros, replacing the "registrar" function

* each variant of `ProcMacro` contains an appropriately typed `Client<fn(...) -> ...>`

`proc_macro` public APIs call into the server via an internal "bridge":

* only a currently running proc macro `Client` can interact with those APIs

* server code might not be able to (if it uses a different `proc_macro` instance)

* however, it can always create and `run` its own `Client`, but that may be inefficient

* the `bridge` uses serialization, C ABI and integer handles to avoid Rust ABI instability

* each invocation of a proc macro results in disjoint integers in its `proc_macro` handles

* this prevents using values of those types across invocations (if they even can be kept)

r? @alexcrichton cc @jseyfried @nikomatsakis @Zoxc @thepowersgang

Decouple proc_macro from the rest of the compiler.

This PR removes all dependencies of `proc_macro` on compiler crates and allows multiple copies of `proc_macro`, built even by different compilers (but from the same source), to interoperate.

Practically, it allows:

* running proc macro tests at stage1 (I moved most from `-fulldeps` to the regular suites)

* using proc macros in the compiler itself (may require some rustbuild trickery)

On the server (i.e. compiler front-end) side:

* `server::*` traits are implemented to provide the concrete types and methods

* the concrete types are completely separated from the `proc_macro` public API

* the only use of the type implementing `Server` is to be passed to `Client::run`

On the client (i.e. proc macro) side (potentially using a different `proc_macro` instance!):

* `client::Client` wraps around client-side (expansion) function pointers

* it encapsulates the `proc_macro` instance used by the client

* its `run` method can be called by a server, to execute the client-side function

* the client instance is bridged to the provided server, while it runs

* ~~currently a thread is spawned, could use process isolation in the future~~

(not the case anymore, see #56058)

* proc macro crates get a generated `static` holding a `&[ProcMacro]`

* this describes all derives/attr/bang proc macros, replacing the "registrar" function

* each variant of `ProcMacro` contains an appropriately typed `Client<fn(...) -> ...>`

`proc_macro` public APIs call into the server via an internal "bridge":

* only a currently running proc macro `Client` can interact with those APIs

* server code might not be able to (if it uses a different `proc_macro` instance)

* however, it can always create and `run` its own `Client`, but that may be inefficient

* the `bridge` uses serialization, C ABI and integer handles to avoid Rust ABI instability

* each invocation of a proc macro results in disjoint integers in its `proc_macro` handles

* this prevents using values of those types across invocations (if they even can be kept)

r? @alexcrichton cc @jseyfried @nikomatsakis @Zoxc @thepowersgang

Decouple proc_macro from the rest of the compiler.

This PR removes all dependencies of `proc_macro` on compiler crates and allows multiple copies of `proc_macro`, built even by different compilers (but from the same source), to interoperate.

Practically, it allows:

* running proc macro tests at stage1 (I moved most from `-fulldeps` to the regular suites)

* using proc macros in the compiler itself (may require some rustbuild trickery)

On the server (i.e. compiler front-end) side:

* `server::*` traits are implemented to provide the concrete types and methods

* the concrete types are completely separated from the `proc_macro` public API

* the only use of the type implementing `Server` is to be passed to `Client::run`

On the client (i.e. proc macro) side (potentially using a different `proc_macro` instance!):

* `client::Client` wraps around client-side (expansion) function pointers

* it encapsulates the `proc_macro` instance used by the client

* its `run` method can be called by a server, to execute the client-side function

* the client instance is bridged to the provided server, while it runs

* ~~currently a thread is spawned, could use process isolation in the future~~

(not the case anymore, see #56058)

* proc macro crates get a generated `static` holding a `&[ProcMacro]`

* this describes all derives/attr/bang proc macros, replacing the "registrar" function

* each variant of `ProcMacro` contains an appropriately typed `Client<fn(...) -> ...>`

`proc_macro` public APIs call into the server via an internal "bridge":

* only a currently running proc macro `Client` can interact with those APIs

* server code might not be able to (if it uses a different `proc_macro` instance)

* however, it can always create and `run` its own `Client`, but that may be inefficient

* the `bridge` uses serialization, C ABI and integer handles to avoid Rust ABI instability

* each invocation of a proc macro results in disjoint integers in its `proc_macro` handles

* this prevents using values of those types across invocations (if they even can be kept)

r? @alexcrichton cc @jseyfried @nikomatsakis @Zoxc @thepowersgang

|

Is there any possible workaround for maintaining state between proc macros invocations? If not are there plans for a solution? |

|

What is the use case for that? |

|

@Mark-Simulacrum A mini web framework, looked at a couple of solutions, the two I've found so far is generating functions which is used by rocket and reset-router. Currently sparkles is using thread local state and it is what I was leaning towards before finding this issue. It has the added benefit of avoiding reset-router + rocket's namespacing problem |

|

Is what you want to do possible if we randomized the order we expand things in? If not, you shouldn't do it. OTOH, there may be something you could do by having a proc macro attribute on a module or entire crate (I don't think we currently support it, but it would allow more interesting transformations). |

Decouple proc_macro from the rest of the compiler.

This PR removes all dependencies of `proc_macro` on compiler crates and allows multiple copies of `proc_macro`, built even by different compilers (but from the same source), to interoperate.

Practically, it allows:

* running proc macro tests at stage1 (I moved most from `-fulldeps` to the regular suites)

* using proc macros in the compiler itself (may require some rustbuild trickery)

On the server (i.e. compiler front-end) side:

* `server::*` traits are implemented to provide the concrete types and methods

* the concrete types are completely separated from the `proc_macro` public API

* the only use of the type implementing `Server` is to be passed to `Client::run`

On the client (i.e. proc macro) side (potentially using a different `proc_macro` instance!):

* `client::Client` wraps around client-side (expansion) function pointers

* it encapsulates the `proc_macro` instance used by the client

* its `run` method can be called by a server, to execute the client-side function

* the client instance is bridged to the provided server, while it runs

* ~~currently a thread is spawned, could use process isolation in the future~~

(not the case anymore, see #56058)

* proc macro crates get a generated `static` holding a `&[ProcMacro]`

* this describes all derives/attr/bang proc macros, replacing the "registrar" function

* each variant of `ProcMacro` contains an appropriately typed `Client<fn(...) -> ...>`

`proc_macro` public APIs call into the server via an internal "bridge":

* only a currently running proc macro `Client` can interact with those APIs

* server code might not be able to (if it uses a different `proc_macro` instance)

* however, it can always create and `run` its own `Client`, but that may be inefficient

* the `bridge` uses serialization, C ABI and integer handles to avoid Rust ABI instability

* each invocation of a proc macro results in disjoint integers in its `proc_macro` handles

* this prevents using values of those types across invocations (if they even can be kept)

r? @alexcrichton cc @jseyfried @nikomatsakis @Zoxc @thepowersgang

|

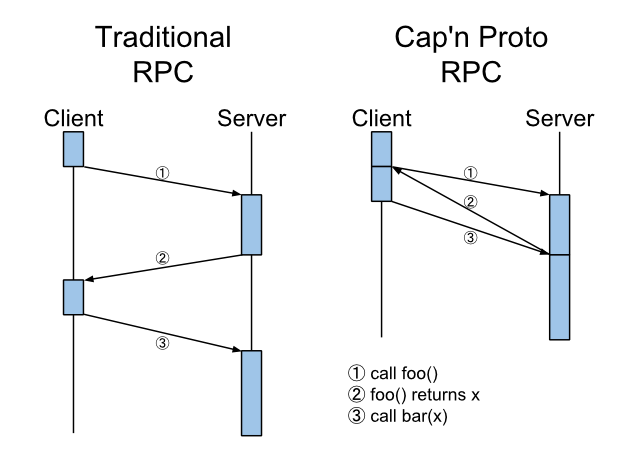

Regarding the performance issues, could pipelining be a potential approach? I'm not sure how complex would it be to implement, but as proc macros are mainly dealing transformations, not the value of Span itself, pipelining definitely can reduce RPC overhead here. |

|

@ishitatsuyuki I don't know what pipelining is. Can you explain your idea in more detail and/or give an example what would be different? |

|

@oli-obk The idea is that the client turns what would be a RPC (IPC/FFI, whatever) call into an embedded object in the final result instead. What I'm referring to by "pipelining" is this: In detail, I would be using Span transformation as an example. The client side Functions on |

|

Ah so this is a symbolic execution scheme for RPC calls, building a tree/DAG of operations that is only evaluated when absolutely necessary. I think with an arena for allocation this could be pretty efficient. Also, you can use integer IDs by pushing these to a buffer. cc @nikomatsakis Neat trick! (I just realized it would make hashing and equality checks more expensive for Ident and Span since it couldn't use the integer ID without checking it's not an unevaluated request) |

|

A technical reason could be to better report (and isolate) misbehaving proc-macros. |

The original purpose of most of this refactoring was actually to cache the generated `TokenStream`s, since constantly regenerating these takes a long time (an extra ~20000ns per call) and these calls happen thousands of times. However, it turns out that: 1. It not valid to retain any TokenStream between different invocations of a proc-macro. Trying will cause the compiler to crash (rust-lang/rust#66003); 2. `syn::Ident` has some problem where it will also cause the compiler to crash if it ends up in thread-local storage (rust-lang/rust#80473) and upstream seems to want to stop any thread-local storage in proc-macros eventually (rust-lang/rust#56058). Being able to cache these tokens would provide a small but decent speedup (~3%), so it is unfortunate that this is not possible. Still, the formatting changes made while trying to implement a cache seem reasonable enough to keep in the tree.

The original purpose of most of this refactoring was actually to cache the generated `TokenStream`s, since constantly regenerating these takes a long time (an extra ~20000ns per call) and these calls happen thousands of times. However, it turns out that: 1. It not valid to retain any TokenStream between different invocations of a proc-macro. Trying will cause the compiler to crash (rust-lang/rust#66003); 2. `syn::Ident` has some problem where it will also cause the compiler to crash if it ends up in thread-local storage (rust-lang/rust#80473) and upstream seems to want to stop any thread-local storage in proc-macros eventually (rust-lang/rust#56058). Being able to cache these tokens would provide a small but decent speedup (~3%), so it is unfortunate that this is not possible. Still, the formatting changes made while trying to implement a cache seem reasonable enough to keep in the tree.

The original purpose of most of this refactoring was actually to cache the generated `TokenStream`s, since constantly regenerating these takes a long time (an extra ~20000ns per call) and these calls happen thousands of times. However, it turns out that: 1. It not valid to retain any TokenStream between different invocations of a proc-macro. Trying will cause the compiler to crash (rust-lang/rust#66003); 2. `syn::Ident` has some problem where it will also cause the compiler to crash if it ends up in thread-local storage (rust-lang/rust#80473) and upstream seems to want to stop any thread-local storage in proc-macros eventually (rust-lang/rust#56058). Being able to cache these tokens would provide a small but decent speedup (~3%), so it is unfortunate that this is not possible. Still, the formatting changes made while trying to implement a cache seem reasonable enough to keep in the tree.

|

Would this open a path for running proc-macros as separate processes communicating with rustc over a pipe or unix-socket-like thing? That would be handy for being able to run statically linked versions of rustc on platforms without a dynamic linker. I'd imagine each proc-macro process should be able to handle multiple macro expansions without having to terminate and respawn for each one. |

|

Adding a reproducible failure case after finding the same problem as #86556: use serde::Deserialize;

use serde_json;

struct Coord(f64, f64);

impl<'de> Deserialize<'de> for Coord {

fn deserialize<D>(deserializer: D) -> Result<Self, D::Error>

where

D: serde::de::Deserializer<'de>,

{

#[derive(Deserialize)]

struct Inner {

x: f64,

y: f64,

}

let Inner { x, y } = Inner::deserialize(deserializer)?;

Ok(Coord(x, y))

}

}

fn main() -> Result<(), Box<dyn std::error::Error>> {

let db_content = r#"[{"x":1.0,"y":1.0},{"x":2.0,"y":17.0}]"#;

let _: Vec<(f64, f64)> = serde_json::from_str(db_content)?;

Ok(())

}[dependencies]

serde = { version = "=1.0.126", features = ["derive"] }

serde_json = "=1.0.64"Moving the |

|

Not sure if this is the exact goal of this thread, but I have a usecase where I have a derive macro used on structs to implement some trait. I would like an enum containing every struct that derived this type. For example If i had I would like to generate: |

#49219 introduces

proc_macro::bridge::server::{ExecutionStrategy,SameThread,CrossThread}.SameThreadhad to be used becauseCrossThreadwas a significant performance regression.Ideally, we'd use

CrossThread, which spawns a thread for each invocation, to prevent (and discourage) proc macros from using TLS for state between invocations.But we'd have to figure out how to make it not regress performance too much, if at all possible.

(it could be the use of channels, which are overkill since they never have more than one value)

cc @dtolnay @alexcrichton @petrochenkov

(TODO: update with regressions, if any, after the cross-thread crater run finishes on #49219)

The text was updated successfully, but these errors were encountered: