A Swift 4 framework for streaming remote audio with real-time effects using AVAudioEngine. Read the full article here!

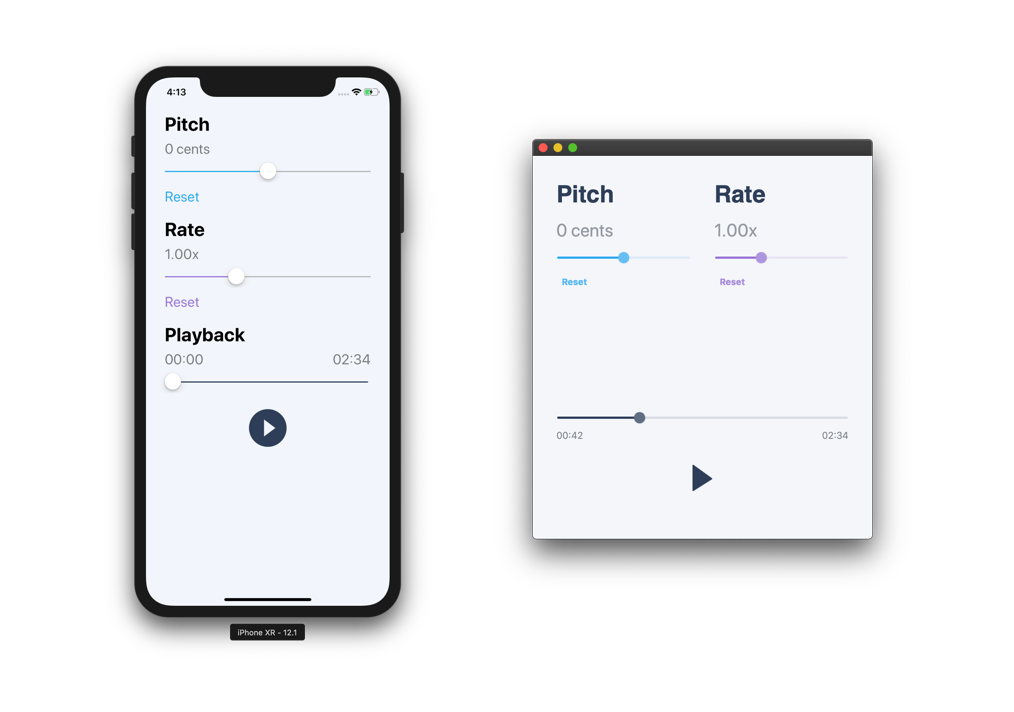

This repo contains two example projects, one for iOS and one for macOS, in the TimePitchStreamer.xcodeproj found in the Examples folder.

In this article we're going to use AVAudioEngine to build an audio streamer that allows adjusting the time and pitch of a song downloaded from the internet in realtime. Why would we possibly want to do such a thing? Read on!

- Our Final App

- How the web does it

- Working with the Audio Queue Services

- Working with the Audio Unit Processing Graph Services (i.e. AUGraph)

- Working with AVAudioEngine

- Building our AVAudioEngine streamer

- Building our TimePitchStreamer

- Building our UI

- Conclusion

- Credits

We're going to be streaming the song Rumble by Ben Sound. The remote URL for Rumble hosted by Fast Learner is:

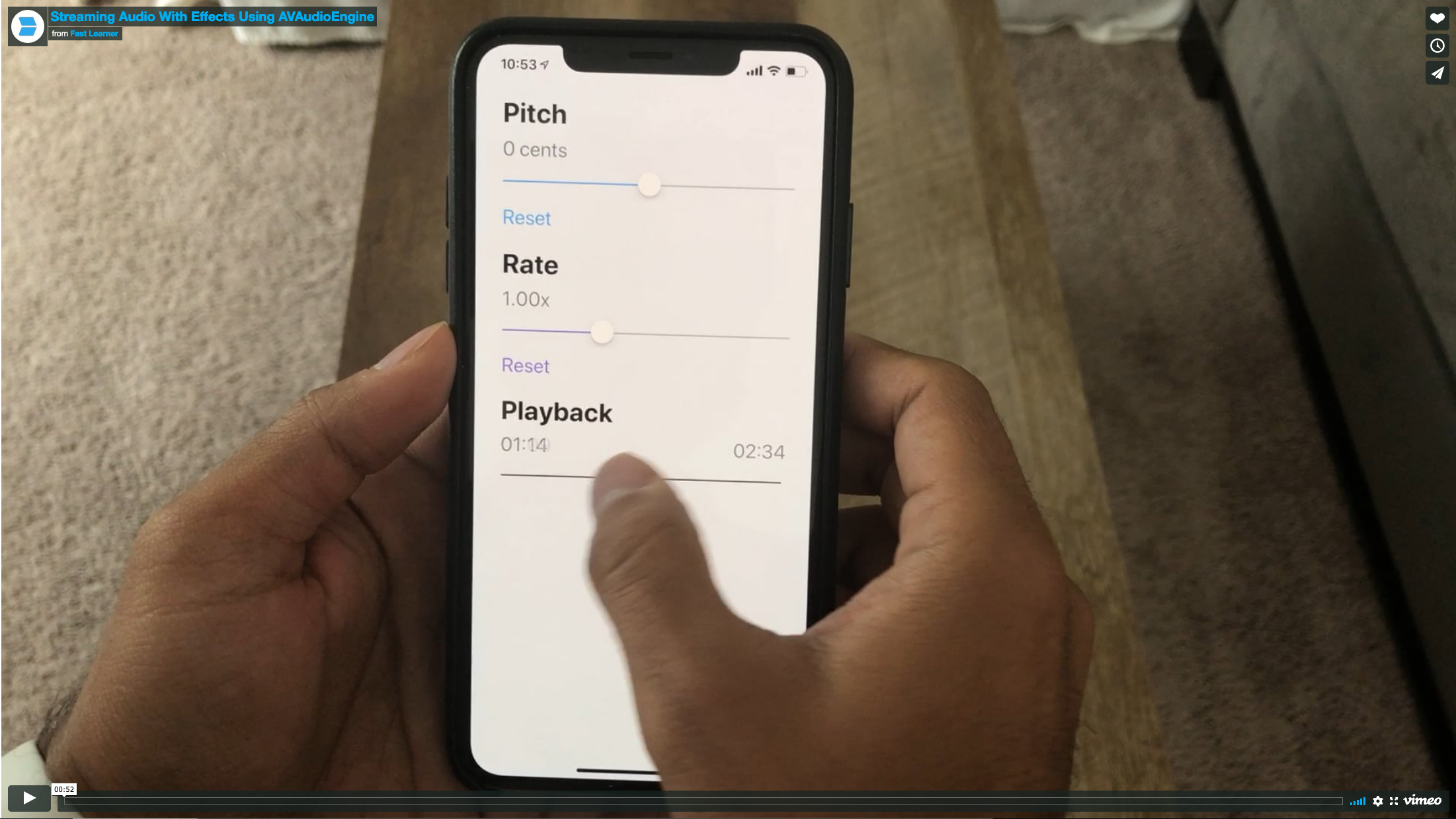

https://cdn.fastlearner.media/bensound-rumble.mp3I say remote because this file is living on the internet, not locally. Below we have a video demonstrating the time/pitch shifting iOS app we'll build in this article. You'll learn how this app downloads, parses (i.e. decodes), and plays back Rumble. Much like any standard audio player, we have the usual functionality including play, pause, volume control, and position seek. In addition to those controls, however, we've added two sliders at the top that allow adjusting the pitch and playback rate (time) of the song.

Notice how we're able to change the pitch and playback rate in realtime. This would not be possible (at least in a sane way) without the AVAudioEngine! Before we dive into the implementation let's take a look at what we're trying to achieve conceptually. Since we're looking to stream an audio file that's living on the internet it'd be helpful to understand how the web does it since our iOS player will borrow those same concepts to download, enqueue, and stream the same audio data.

On the web we have an HTML5 <audio> element that allows us to stream an audio file from a URL using just a few lines of code. For instance, to play Rumble all we need to write is:

<audio controls>

<source src="https://cdn.fastlearner.media/bensound-rumble.mp3" type="audio/mpeg">

Your browser does not support the audio element.

</audio> This is super convenient for basic playback, but what if we wanted to add an effect? You'd need to use the Web Audio Javascript API, which involves wrapping the audio element as a node in an audio graph. Here's an example of how we could add a lowpass filter using Web Audio:

// Grab the audio element from the DOM

const audioNode = document.querySelector("audio");

// Use Web Audio to create an audio graph that uses the stream from the audio element

const audioCtx = new (window.AudioContext || window.webkitAudioContext)();

const sourceNode = audioCtx.createMediaElementSource(audioNode);

// Create the lowpass filter

const lowpassNode = audioCtx.createBiquadFilter();

// Connect the source to the lowpass filter

sourceNode.connect(lowpassNode);

// Connect the lowpass filter to the output (speaker)

lowpassNode.connect(audioCtx.destination);

Pretty convenient right? The audio graph in Web Audio allows us to chain together the audio stream to the low pass effect and the speaker like a guitarist would with a guitar, effect pedal, and an amp.

Similar to how HTML5 provides us the <audio> tag, Apple provides us the AVPlayer from the AVFoundation framework to perform basic file streaming. For instance, we could use the AVPlayer to play the same song as before like so:

if let url = URL(string: "https://cdn.fastlearner.media/bensound-rumble.mp3") {

let player = AVPlayer(url: url)

player.play()

} Just like the <audio> tag, this would be perfect if we just needed to play the audio without applying any effects or visualizing it. However, if we wanted more flexibility then we'd need something similar to Web Audio's audio graph on iOS...

Though there is a little magic the <audio> tag handles on the web that we'll need to handle ourselves if we plan on using the AVAudioEngine, our final TimePitchStreamer will look similar to the Web Audio implementation you saw above used to setup a graph and form connections between nodes. Note that until just a few years ago we'd have to achieve this using either the Audio Queue Services or Audio Unit Processing Graph Services. Since the AVAudioEngine is a hybrid of these two approaches let's quickly review the two.

The Audio Queue Services provide an API for playing and recording audio data coming from an arbitrary source. For instance, consider a walkie-talkie app where you had a peer-to-peer connection between two iOS devices and wanted to stream audio from one phone to another.

You wouldn't be able to use a file reference (i.e. AudioFileID or AVAudioFile) from the receiving phone because nothing is written to disk. Instead, you'd likely be using the MultipeerConnectivity framework to send data from one phone to another, packet by packet.

In this case, since we can't create a file reference we wouldn't be able to use an AVPlayer to play back the audio. Instead, we could make use of the Audio Queue Services to enqueue each buffer of audio data for playback as it is being received like so:

In this case, as we're receiving audio data on the device we'd like to perform playback with we'd be pushing those buffers onto a queue that would take care of scheduling each for playback to the speakers on a first-in first-out (FIFO) basis. For an example implementation of a streamer using the Audio Queue Services check out this mini-player open source project I did for Beats Music (now Apple Music) a few years ago.

On the modern web most media resources are compressed to save storage space and bandwidth on servers and content delivery networks (CDN). An extremely handy feature of the Audio Queue Services is that it automatically handles decoding compressed formats like MP3 and AAC. As you'll see later, when using an AUGraph or AVAudioEngine you must take care of decoding any compressed audio data into a linear pulse code modulated format (LPCM, i.e. uncompressed) yourself in order to schedule it on a graph.

As cool as the Audio Queue Services are, unfortunately, adding in realtime effects such as the time-pitch shifter in our example app would still be rather complicated and involve the use of an Audio Unit, which we’ll discuss in detail in the next section. For now, let's move on from the Audio Queue Services and take a look at the Audio Unit Processing Graph Services.

The Audio Unit Processing Graph Services provide a graph-based API for playing uncompressed, LPCM audio data using nodes that are connected to each other. You can think of an audio graph working much like musicians in a rock band would setup their sound for a live show. The musicians would each connect their instruments to a series of effects and into to a mixer that would combine the different audio streams into single stream to play out the speakers. We can visualize this setup like so:

- Each musician starts producing audio using their instrument

- The guitar player needs to use a distortion effect so she connects her guitar to an effect pedal before connecting to the mixer

- The mixer takes an input from each musician and produces a single output to the speakers

- The speakers play out to the audience

Using the Audio Unit Processing Graph Services we could model the setup from above like so:

Notice how the output in this case pulls audio from each of the previous nodes. That is, the arrows flow right to left rather than left to right as in the rock band diagram above. We'll explore in detail in the next section.

Specifically, when we’re working with the Audio Unit Processing Graph Services we’re dealing with the AUGraph interface, which has historically been the primary audio graph implementation in Apple's CoreAudio (specifically AudioToolbox) framework. Before the AVAudioEngine was introduced in 2014 this was the closest thing we had to the Web Audio graph implementation for writing iOS and macOS apps. The AUGraph provides the ability to manage an array of nodes and their connections. Think of it a wrapper around the nodes we used to represent the rock band earlier.

As noted above, audio graphs work on a pull model - that is, the last node of an audio graph pulls data from its previously connected node, which then pulls data from its previously connected until it reaches the first node. For our guitar player above the flow of audio would go something like this - note the direction of the arrows:

Each render cycle of the audio graph would cause the output to pull audio data from the mixer, which would pull audio data from the distortion effect, which would then pull audio from the guitar. If the guitar at the head of the chain wasn't producing any sweet riffs it'd still be in charge of providing a silent buffer of audio for the rest of the graph to use. The head of an audio graph (i.e. the component most to the left) is referred to as a generator.

Each of the nodes in an AUGraph handles a specific function whether it be generating, modifying, or outputting sound. In an AUGraph a node is referred to as an AUNode and wraps what is called an AudioUnit.

The AudioUnit is an incredibly important component of Core Audio. Each of the Audio Units contain implementations for generating and modifying streams of audio and providing I/O to the sound hardware on iOS/macOS/tvOS.

Think back to our guitar player using the distortion effect to modify the sound of her guitar. In the context of an AUGraph we’d use a distortion Audio Unit to handle processing that effect.

Audio Units, however, can do more than just apply an effect. Core Audio actually has specific Audio Units for providing input access from the mic or any connected instruments and output to the speakers and offline rendering. Hence, each Audio Unit has a type, such as kAudioUnitType_Output or kAudioUnitType_Effect, and subtype, such as kAudioUnitSubType_RemoteIO or kAudioUnitSubType_Distortion.

CoreAudio provides a bunch of super useful built-in Audio Units. These are described in an AudioComponentDescription using types and subtypes. A type is a high-level description of what the Audio Unit does. Is it a generator? A mixer? Each serves a different function in the context of a graph and has rules how it can be used. As of iOS 12 we have the following types:

| Types |

|---|

| kAudioUnitType_Effect |

| kAudioUnitType_Mixer |

| kAudioUnitType_Output |

| kAudioUnitType_Panner |

| kAudioUnitType_Generator |

| kAudioUnitType_MusicDevice |

| kAudioUnitType_MusicEffect |

| kAudioUnitType_RemoteEffect |

| kAudioUnitType_MIDIProcessor |

A subtype is a low-level description of what an Audio Unit specifically does. Is it a time/pitch shifting effect? Is it an input that uses hardware-enabled voice processing? Is it a MIDI synth?

| Subtypes |

|---|

| kAudioUnitSubType_NewTimePitch |

| kAudioUnitSubType_MIDISynth |

| kAudioUnitSubType_Varispeed |

| kAudioUnitSubType_AUiPodTime |

| kAudioUnitSubType_Distortion |

| kAudioUnitSubType_MatrixMixer |

| kAudioUnitSubType_PeakLimiter |

| kAudioUnitSubType_SampleDelay |

| kAudioUnitSubType_ParametricEQ |

| kAudioUnitSubType_RoundTripAAC |

| kAudioUnitSubType_SpatialMixer |

| kAudioUnitSubType_GenericOutput |

| kAudioUnitSubType_LowPassFilter |

| kAudioUnitSubType_MultiSplitter |

| kAudioUnitSubType_BandPassFilter |

| kAudioUnitSubType_HighPassFilter |

| kAudioUnitSubType_LowShelfFilter |

| kAudioUnitSubType_AudioFilePlayer |

| kAudioUnitSubType_AUiPodTimeOther |

| kAudioUnitSubType_HighShelfFilter |

| kAudioUnitSubType_DeferredRenderer |

| kAudioUnitSubType_DynamicsProcessor |

| kAudioUnitSubType_MultiChannelMixer |

| kAudioUnitSubType_VoiceProcessingIO |

| kAudioUnitSubType_ScheduledSoundPlayer |

You may have noticed the time-pitch shift effect above (kAudioUnitSubType_NewTimePitch). We may be able to use something similar to this for our streamer!

Please note that this list is constantly getting updated with every new version of iOS and changes depending on whether you're targeting iOS, macOS, or tvOS so the best way to know what you have available is to check Apple's docs.

For this article we're not going to be directly using Audio Units in our streamer, but understanding the anatomy of one will help us get familiar with the terminology used in the AVAudioEngine. Let's analyze Apple's diagram of an Audio Unit:

Let’s break down what you’re seeing above:

- An Audio Unit contains 3 different “scopes”. The left side where the audio is flowing in is the Input scope, while the right side where the audio is flowing out is the Output scope. The Global scope refers to the global state of the Audio Unit.

- Each scope of an Audio Unit has a stream description describing the format of the audio data (in the form of a

AudioStreamBasicDescription). - For each scope of an Audio Unit there can be n-channels where the Audio Unit’s implementation will specify the maximum number of channels it supports. You can query how many channels an Audio Unit supports for its input and output scopes.

- The main logic for occurs in its DSP block shown in the center. Different types of units will either generate or process sound.

- Audio Units can use a Render Callback, which is a function you can implement to either provide your own data (in the form of an

AudioBufferListto the Input scope) to an Audio Unit or process data from an Audio Unit after the processing has been performed using the Output scope. When providing your own data to a render callback it is essential that its stream format matches the stream format of the Input scope.

You can see a real-world implementation of an AUGraph in the EZOutput class of the EZAudio framework I wrote a little while back.

The Audio Unit Processing Graph Services require the audio data flowing through each node to be in a LPCM format and does not automatically perform any decoding like we'd get using the Audio Queue Services. If we’d like to use an AUGraph for streaming and support formats like MP3 or AAC we’d have to perform the decoding ourselves and then pass the LPCM data into the graph.

It should be noted that using a node configured with the kAudioUnitSubType_AUConverter subtype does not handle compressed format conversions so we’d still need to use the Audio Converter Services to do that conversion on the fly.

At WWDC 2017 Apple announced the AUGraph would be deprecated in 2018 in favor of the AVAudioEngine. We can see this is indeed the case by browsing the AUGraph documentation and looking at all the deprecation warnings.

Since teaching you how to write an audio streamer using deprecated technology would've killed the whole vibe of Fast Learner we'll move on to our implementation using the AVAudioEngine.

You can think of the AVAudioEngine as something between a queue and a graph that serves as the missing link between the AVPlayer and the Audio Queue Services and Audio Unit Processing Graph Services. Whereas the Audio Queue Services and Audio Unit Processing Graph Services were originally C-based APIs, the AVAudioEngine was introduced in 2014 using a higher-level Objective-C/Swift interface.

To create an instance of the AVAudioEngine in Swift (4.2) all we need to do is write:

let engine = AVAudioEngine()Next we can create and connect generators, effects, and mixer nodes similar to how we did using an AUGraph. For instance, if we wanted to play a local audio file with a delay effect we could use the AVAudioPlayerNode and the AVAudioUnitDelay:

// Create the nodes (1)

let playerNode = AVAudioPlayerNode()

let delayNode = AVAudioUnitDelay()

// Attach the nodes (2)

engine.attach(playerNode)

engine.attach(delayNode)

// Connect the nodes (3)

engine.connect(playerNode, to: delayNode, format: nil)

engine.connect(delayNode, to: engine.mainMixerNode, format: nil)

// Prepare the engine (4)

engine.prepare()

// Schedule file (5)

do {

// Local files only

let url = URL(fileURLWithPath: "path_to_your_local_file")!

let file = try AVAudioFile(forReading: url)

playerNode.scheduleFile(file, at: nil, completionHandler: nil)

} catch {

print("Failed to create file: \(error.localizedDescription)")

return

}

// Setup delay parameters (6)

delayNode.delayTime = 0.8

delayNode.feedback = 80

delayNode.wetDryMix = 50

// Start the engine and player node (7)

do {

try engine.start()

playerNode.play()

} catch {

print("Failed to start engine: \(error.localizedDescription)")

}Here's a breakdown of what we just did:

- Created nodes for the file player and delay effect. The delay's class,

AVAudioUnitDelay, is a subclass ofAVAudioUnitEffect, which is the *AVFoundation wrapper for an Audio Unit. In the previous section we went into detail about Audio Units so this should hopefully be familiar! - We then attached the player and delay nodes to the engine. This is similar to the

AUGraphAddNodemethod forAUGraphand works in a similar way (the engine now owns these nodes). - Next we connected the nodes. First the player node into the delay node and then the delay node into the engine's output mixer node. This is similar to the

AUGraphConnectNodeInputmethod forAUGraphand can be thought of like the guitar player's setup from earlier (guitar -> pedal -> mixer is now player -> delay -> mixer), where we're using the player node instead of a guitar as a generator. - We then prepared the engine for playback. It is at this point the engine preallocates all the resources it needs for playback. This is similar to the

AUGraphInitializemethod forAUGraph. - Next we created a file for reading and scheduled it onto the player node. The file is an

AVAudioFile, which is provided byAVFoundationfor generic audio reading/writing. The player node has a handy method for efficiently scheduling audio from anAVAudioFile, but also supports scheduling individual buffers of audio as well in the form ofAVAudioPCMBuffer. Note this would only work for local files only (nothing on the internet)! - Set the default values on the delay node so we can hear the delay effect.

- Finally we started the engine and the player node. Once the engine is running we can start the player node at any time, but we'll started it immediately in this example.

A key difference between the AVAudioEngine and the AUGraph is in how we provide the audio data. AUGraph works on a pull model where we provide audio buffers in the form of AudioBufferList in a render callback whenever the graph needs it.

AVAudioEngine, on the other hand, works on a push model similar to the Audio Queue Services. We schedule files or audio buffers in the form of AVAudioFile or AVAudioPCMBuffer onto the player node. The player node then internally handles providing the data for the engine to consume at runtime.

We'll keep the push model in mind as we move into the next section.

Because the AVAudioEngine works like a hybrid between the Audio Queue Services and Audio Unit Processing Graph Services we can combine what we know about each to create a streamer that schedules audio like a queue, but supports real-time effects like an audio graph.

At a high-level here's what we'd like to achieve:

Here's a breakdown of the streamer's components:

- Download the audio data from the internet. We know we need to pull raw audio data from somewhere. How we implement the downloader doesn't matter as long as we're receiving audio data in its binary format (i.e.

Datain Swift 4). - Parse the binary audio data into audio packets. To do this we will use the often confusing, but very awesome Audio File Stream Services API.

- Read the parsed audio packets into LPCM audio packets. To handle any format conversion required (specifically compressed to uncompressed) we'll be using the Audio Converter Services API.

- Stream (i.e. playback) the LPCM audio packets using an

AVAudioEngineby scheduling them onto theAVAudioPlayerNodeat the head of the engine.

In the following sections we're going to dive into the implementation of each of these components. We're going to use a protocol-based approach to define the functionality we'd expect from each component and then do a concrete implementation. For instance, for the Download component we're going to define a Downloading protocol and perform a concrete implementation of the protocol using the URLSession in the Downloader class.

Let's start by defining a Downloading protocol that we can use to fetch our audio data.

public protocol Downloading: class {

// MARK: - Properties

/// A receiver implementing the `DownloadingDelegate` to receive state change, completion, and progress events from the `Downloading` instance.

var delegate: DownloadingDelegate? { get set }

/// The current progress of the downloader. Ranges from 0.0 - 1.0, default is 0.0.

var progress: Float { get }

/// The current state of the downloader. See `DownloadingState` for the different possible states.

var state: DownloadingState { get }

/// A `URL` representing the current URL the downloader is fetching. This is an optional because this protocol is designed to allow classes implementing the `Downloading` protocol to be used as singletons for many different URLS so a common cache can be used to redownloading the same resources.

var url: URL? { get set }

// MARK: - Methods

/// Starts the downloader

func start()

/// Pauses the downloader

func pause()

/// Stops and/or aborts the downloader. This should invalidate all cached data under the hood.

func stop()

}At a high level we expect to have a delegate (defined below) to receive the binary audio data as it is received, a progress value for how much of the total data has been downloaded, as well as a state (defined below) to define whether the download has started, stopped, or paused.

public protocol DownloadingDelegate: class {

func download(_ download: Downloading, changedState state: DownloadingState)

func download(_ download: Downloading, completedWithError error: Error?)

func download(_ download: Downloading, didReceiveData data: Data, progress: Float)

}public enum DownloadingState: String {

case completed

case started

case paused

case notStarted

case stopped

}Our Downloader class is going to be the concrete implementation of the Downloading protocol and use the URLSession to perform the networking request. Let's start by implementing the properties for the Downloading protocol.

public class Downloader: NSObject, Downloading {

public var delegate: DownloadingDelegate?

public var progress: Float = 0

public var state: DownloadingState = .notStarted {

didSet {

delegate?.download(self, changedState: state)

}

}

public var totalBytesReceived: Int64 = 0

public var totalBytesCount: Int64 = 0

public var url: URL? {

didSet {

if state == .started {

stop()

}

if let url = url {

progress = 0.0

state = .notStarted

totalBytesCount = 0

totalBytesReceived = 0

task = session.dataTask(with: url)

} else {

task = nil

}

}

}

}Next we're going to define our URLSession related properties.

public class Downloader: NSObject, Downloading {

...Downloading properties

/// The `URLSession` currently being used as the HTTP/HTTPS implementation for the downloader.

fileprivate lazy var session: URLSession = {

return URLSession(configuration: .default, delegate: self, delegateQueue: nil)

}()

/// A `URLSessionDataTask` representing the data operation for the current `URL`.

fileprivate var task: URLSessionDataTask?

/// A `Int64` representing the total amount of bytes received

var totalBytesReceived: Int64 = 0

/// A `Int64` representing the total amount of bytes for the entire file

var totalBytesCount: Int64 = 0

}Now we'll implement the Downloading protocol's methods for start(), pause(), and stop().

public class Downloader: NSObject, Downloading {

...Properties

public func start() {

guard let task = task else {

return

}

switch state {

case .completed, .started:

return

default:

state = .started

task.resume()

}

}

public func pause() {

guard let task = task else {

return

}

guard state == .started else {

return

}

state = .paused

task.suspend()

}

public func stop() {

guard let task = task else {

return

}

guard state == .started else {

return

}

state = .stopped

task.cancel()

}

}Finally, we go ahead and implement the URLSessionDataDelegate methods on the Downloader to receive the data from the URLSession.

extension Downloader: URLSessionDataDelegate {

public func urlSession(_ session: URLSession, dataTask: URLSessionDataTask, didReceive response: URLResponse, completionHandler: @escaping (URLSession.ResponseDisposition) -> Void) {

totalBytesCount = response.expectedContentLength

completionHandler(.allow)

}

public func urlSession(_ session: URLSession, dataTask: URLSessionDataTask, didReceive data: Data) {

totalBytesReceived += Int64(data.count)

progress = Float(totalBytesReceived) / Float(totalBytesCount)

delegate?.download(self, didReceiveData: data, progress: progress)

progressHandler?(data, progress)

}

public func urlSession(_ session: URLSession, task: URLSessionTask, didCompleteWithError error: Error?) {

state = .completed

delegate?.download(self, completedWithError: error)

completionHandler?(error)

}

}Nice! Our Downloader is now complete and is ready to to download a song from the internet using a URL. As the song is downloading and we receive each chunk of binary audio data we will report it to a receiver via the delegate's download(_:, didReceiveData:,progress:) method.

To handle converting the audio data from a Downloading into audio packets let's go ahead and implement a Parsing protocol.

import AVFoundation

public protocol Parsing: class {

// MARK: - Properties

/// (1)

var dataFormat: AVAudioFormat? { get }

/// (2)

var duration: TimeInterval? { get }

/// (3)

var isParsingComplete: Bool { get }

/// (4)

var packets: [(Data, AudioStreamPacketDescription?)] { get }

/// (5)

var totalFrameCount: AVAudioFrameCount? { get }

/// (6)

var totalPacketCount: AVAudioPacketCount? { get }

// MARK: - Methods

/// (7)

func parse(data: Data) throws

/// (8)

func frameOffset(forTime time: TimeInterval) -> AVAudioFramePosition?

/// (9)

func packetOffset(forFrame frame: AVAudioFramePosition) -> AVAudioPacketCount?

/// (10)

func timeOffset(forFrame frame: AVAudioFrameCount) -> TimeInterval?

}In a Parsing we'd expect it to have a couple important properties:

- A

dataFormatproperty that describes the format of the audio packets. - A

durationproperty that describes the total duration of the file in seconds. - An

isParsingCompleteproperty indicating whether all the packets have been parsed. This will always be evaluated as the count of thepacketsproperty being equal to thetotalPacketCountproperty. We'll do a default implementation of this in the next section. - A

packetsproperty that holds an array of duples. Each duple contains a chunk of binary audio data (Data) and an optional packet description (AudioStreamPacketDescription) if it is a compressed format. - A

totalFrameCountproperty that describes the total amount of frames in the entire audio file. - A

totalPacketCountproperty that describes the total amount of packets in the entire audio file.

In addition, we define a few methods that will allow us to parse and seek through the audio packets.

- A

parse(data:)method that takes in binary audio data and progressively parses it to provide us the properties listed above. - A

frameOffset(forTime:)method that provides a frame offset given a time in seconds. This method and the next two are needed for handling seek operations. - A

packetOffset(forFrame:)method that provides a packet offset given a frame. - A

timeOffset(forFrame:)method that provides a time offset given a frame.

Luckily, since we're likely to find ourselves writing the same code to define the duration, totalFrameCount, and isParsingComplete properties as well as the frameOffset(forTime:), packetOffset(forFrame:), and timeOffset(forFrame:) methods we can add an extension directly on the Parsing protocol to provide a default implementation of these.

extension Parsing {

public var duration: TimeInterval? {

guard let sampleRate = dataFormat?.sampleRate else {

return nil

}

guard let totalFrameCount = totalFrameCount else {

return nil

}

return TimeInterval(totalFrameCount) / TimeInterval(sampleRate)

}

public var totalFrameCount: AVAudioFrameCount? {

guard let framesPerPacket = dataFormat?.streamDescription.pointee.mFramesPerPacket else {

return nil

}

guard let totalPacketCount = totalPacketCount else {

return nil

}

return AVAudioFrameCount(totalPacketCount) * AVAudioFrameCount(framesPerPacket)

}

public var isParsingComplete: Bool {

guard let totalPacketCount = totalPacketCount else {

return false

}

return packets.count == totalPacketCount

}

public func frameOffset(forTime time: TimeInterval) -> AVAudioFramePosition? {

guard let _ = dataFormat?.streamDescription.pointee,

let frameCount = totalFrameCount,

let duration = duration else {

return nil

}

let ratio = time / duration

return AVAudioFramePosition(Double(frameCount) * ratio)

}

public func packetOffset(forFrame frame: AVAudioFramePosition) -> AVAudioPacketCount? {

guard let framesPerPacket = dataFormat?.streamDescription.pointee.mFramesPerPacket else {

return nil

}

return AVAudioPacketCount(frame) / AVAudioPacketCount(framesPerPacket)

}

public func timeOffset(forFrame frame: AVAudioFrameCount) -> TimeInterval? {

guard let _ = dataFormat?.streamDescription.pointee,

let frameCount = totalFrameCount,

let duration = duration else {

return nil

}

return TimeInterval(frame) / TimeInterval(frameCount) * duration

}

}Our Parser class is going to be a concrete implementation of the Parsing protocol and use the Audio File Stream Services API to to convert the binary audio into audio packets. Let's start by implementing the properties for the Parsing protocol:

import AVFoundation

public class Parser: Parsing {

public internal(set) var dataFormat: AVAudioFormat?

public internal(set) var packets = [(Data, AudioStreamPacketDescription?)]()

// (1)

public var totalPacketCount: AVAudioPacketCount? {

guard let _ = dataFormat else {

return nil

}

return max(AVAudioPacketCount(packetCount), AVAudioPacketCount(packets.count))

}

}- Note that we're determining the total packet count to be the maximum of either the

packetCountproperty that is a one-time parsed value from the Audio File Stream Services or the total number of packets received so far (thepackets.count).

Next we're going to define our Audio File Stream Services related properties.

public class Parser: Parsing {

...Parsing properties

/// A `UInt64` corresponding to the total frame count parsed by the Audio File Stream Services

public internal(set) var frameCount: UInt64 = 0

/// A `UInt64` corresponding to the total packet count parsed by the Audio File Stream Services

public internal(set) var packetCount: UInt64 = 0

/// The `AudioFileStreamID` used by the Audio File Stream Services for converting the binary data into audio packets

fileprivate var streamID: AudioFileStreamID?

}Next we're going to define a default initializer that will create a new streamID that is required before we can use the Audio File Stream Services to parse any audio data.

public class Parser: Parsing {

...Properties

public init() throws {

// (1)

let context = unsafeBitCast(self, to: UnsafeMutableRawPointer.self)

// (2)

guard AudioFileStreamOpen(context, ParserPropertyChangeCallback, ParserPacketCallback, kAudioFileMP3Type, &streamID) == noErr else {

throw ParserError.streamCouldNotOpen

}

}

}- We're creating a context object that we can pass into the

AudioFileStreamOpenmethod that will allow us to access ourParserclass instance within static C methods. - We initialize the Audio File Stream by called the

AudioFileStreamOpen()method and passing our context object and callback methods that we can use to be notified anytime there is new data that was parsed.

The two callback methods for the Audio File Stream Services we'll used are defined below.

ParserPropertyChangeCallback: This is triggered when the Audio File Stream Services has enough data to provide a property such as the total packet count or data format.ParserPacketCallback: This is triggered when the Audio File Stream Services has enough data to provide audio packets and, if it's a compressed format such as MP3 or AAC, audio packet descriptions.

Note the use of the unsafeBitCast method above used to create an UnsafeMutableRawPointer representation of the Parser instance to pass into the callbacks. In Core Audio we're typically dealing with C-based APIs and these callbacks are actually static C functions that are defined outside of the Obj-C/Swift class interfaces so the only way we can grab the instance of the Parser is by passing it in as a context object (in C this would be a void *). This will make more sense when we define our callbacks.

Before that, however, let's define our parse(data:) method from the Parsing protocol.

public class Parser: Parsing {

...Properties

...Init

public func parse(data: Data) throws {

let streamID = self.streamID!

let count = data.count

_ = try data.withUnsafeBytes { (bytes: UnsafePointer<UInt8>) in

let result = AudioFileStreamParseBytes(streamID, UInt32(count), bytes, [])

guard result == noErr else {

throw ParserError.failedToParseBytes(result)

}

}

}

}Since the Audio File Stream Services is a C-based API we need to extract a pointer to the binary audio data from the Data object. We do this using the withUnsafeBytes method on Data and pass those bytes to the AudioFileStreamParseBytes method that will invoke either the ParserPropertyChangeCallback or ParserPacketCallback if it has enough audio data.

As we pass audio data to the Audio File Stream Services via our parse(data:) method it will first call the property listener callback to indicate the various properties have been extracted. These include:

| Audio File Stream Properties |

|---|

| kAudioFileStreamProperty_ReadyToProducePackets |

| kAudioFileStreamProperty_FileFormat |

| kAudioFileStreamProperty_DataFormat |

| kAudioFileStreamProperty_AudioDataByteCount |

| kAudioFileStreamProperty_AudioDataPacketCount |

| kAudioFileStreamProperty_DataOffset |

| kAudioFileStreamProperty_BitRate |

| kAudioFileStreamProperty_FormatList |

| kAudioFileStreamProperty_MagicCookieData |

| kAudioFileStreamProperty_MaximumPacketSize |

| kAudioFileStreamProperty_ChannelLayout |

| kAudioFileStreamProperty_PacketToFrame |

| kAudioFileStreamProperty_FrameToPacket |

| kAudioFileStreamProperty_PacketToByte |

| kAudioFileStreamProperty_ByteToPacket |

| kAudioFileStreamProperty_PacketTableInfo |

| kAudioFileStreamProperty_PacketSizeUpperBound |

| kAudioFileStreamProperty_AverageBytesPerPacket |

| kAudioFileStreamProperty_InfoDictionary |

For the purposes of our Parser we only care about the kAudioFileStreamProperty_DataFormat and the kAudioFileStreamProperty_AudioDataPacketCount. Let's define our callback:

func ParserPropertyChangeCallback(_ context: UnsafeMutableRawPointer, _ streamID: AudioFileStreamID, _ propertyID: AudioFileStreamPropertyID, _ flags: UnsafeMutablePointer<AudioFileStreamPropertyFlags>) {

let parser = Unmanaged<Parser>.fromOpaque(context).takeUnretainedValue()

/// Parse the various properties

switch propertyID {

case kAudioFileStreamProperty_DataFormat:

var format = AudioStreamBasicDescription()

GetPropertyValue(&format, streamID, propertyID)

parser.dataFormat = AVAudioFormat(streamDescription: &format)

case kAudioFileStreamProperty_AudioDataPacketCount:

GetPropertyValue(&parser.packetCount, streamID, propertyID)

default:

break

}

}Note that we're able to obtain the instance of our Parser using the Unmanaged interface to cast the context pointer back to the appropriate class instance. Since our parser callbacks are not happening on a realtime audio thread this type of casting is ok.

Also note that we're using a generic helper method called GetPropertyValue(_:_:_:) to get the actual property values from the streamID. We can define that method like so:

func GetPropertyValue<T>(_ value: inout T, _ streamID: AudioFileStreamID, _ propertyID: AudioFileStreamPropertyID) {

var propSize: UInt32 = 0

guard AudioFileStreamGetPropertyInfo(streamID, propertyID, &propSize, nil) == noErr else {

return

}

guard AudioFileStreamGetProperty(streamID, propertyID, &propSize, &value) == noErr else {

return

}

}Here we're wrapping the Audio File Stream Services C-based API for getting the property values. Like many other Core Audio APIs we first need to get the size of the property and then pass in that size as well as a variable to hold the actual value. Because of this we make the value itself generic and use the inout decoration to indicate the method is going to write back a value to the argument passed in instead of outputting a new value.

Next, once enough audio data has been passed to the Audio File Stream Services and the property parser is complete it will be ready to produce packets and continuously trigger the ParserPacketCallback as it can create more and more audio packets. Let's define our packet callback:

func ParserPacketCallback(_ context: UnsafeMutableRawPointer, _ byteCount: UInt32, _ packetCount: UInt32, _ data: UnsafeRawPointer, _ packetDescriptions: UnsafeMutablePointer<AudioStreamPacketDescription>) {

// (1)

let parser = Unmanaged<Parser>.fromOpaque(context).takeUnretainedValue()

// (2)

let packetDescriptionsOrNil: UnsafeMutablePointer<AudioStreamPacketDescription>? = packetDescriptions

let isCompressed = packetDescriptionsOrNil != nil

// (3)

guard let dataFormat = parser.dataFormat else {

return

}

// (4)

if isCompressed {

for i in 0 ..< Int(packetCount) {

let packetDescription = packetDescriptions[i]

let packetStart = Int(packetDescription.mStartOffset)

let packetSize = Int(packetDescription.mDataByteSize)

let packetData = Data(bytes: data.advanced(by: packetStart), count: packetSize)

parser.packets.append((packetData, packetDescription))

}

} else {

let format = dataFormat.streamDescription.pointee

let bytesPerPacket = Int(format.mBytesPerPacket)

for i in 0 ..< Int(packetCount) {

let packetStart = i * bytesPerPacket

let packetSize = bytesPerPacket

let packetData = Data(bytes: data.advanced(by: packetStart), count: packetSize)

parser.packets.append((packetData, nil))

}

}

}Let's go through what's happening here:

- We cast the

contextpointer back to ourParserinstance. - We then need to check if we're dealing with a compressed format (like MP3, AAC) or not. We actually have to cast the

packetDescriptionsargument back into an optional and check if it's nil. This is a bug with the Audio File Stream Services where the Swift interface generated from the original C-interface should have an optional argument forpacketDescriptions. If you're reading this and are in the Core Audio team please fix this! :D - Next, we check if the

dataFormatof theParseris defined so we know how many bytes correspond to one packet of audio data. - Finally, we iterate through the number of packets produced and create a duple corresponding to a single packet of audio data and include the packet description if we're dealing with a compressed format. Note the use of the

advanced(by:)method on thedataargument to make sure we're obtaining the audio data at the right byte offset. For uncompressed formats like WAV and FLAC we don't need any packet descriptions so we just set it tonil.

We've successfully completed writing our Parser, a concrete implementation of the Parsing protocol that can handle converting binary audio provided by a Downloading into audio packets thanks to the Audio File Stream Services. Note that these parsed audio packets are not guaranteed to be LPCM so if we're dealing with a compressed format like MP3 or AAC we still can't play these packets in an AVAudioEngine. In the next section we'll define a Reading protocol that will use a Parsing to get audio packets that we will then convert into a LPCM audio packets for our AVAudioEngine to play.

To handle converting the audio packets from a Parsing into LPCM audio packets for our AVAudioEngine to read let's define a Reading protocol.

public protocol Reading {

// MARK: - Properties

/// (1)

var currentPacket: AVAudioPacketCount { get }

/// (2)

var parser: Parsing { get }

/// (3)

var readFormat: AVAudioFormat { get }

// MARK: - Initializers

/// (4)

init(parser: Parsing, readFormat: AVAudioFormat) throws

// MARK: - Methods

/// (5)

func read(_ frames: AVAudioFrameCount) throws -> AVAudioPCMBuffer

/// (6)

func seek(_ packet: AVAudioPacketCount) throws

}In a Reading interface we'd expect the following properties:

- A

currentPacketproperty representing the last read packet index. All future reads should start from here. - A

parserproperty representing aParsingthat should be used to read the source audio packets from. - A

readFormatproperty representing the LPCM audio format that the audio packets from aParsingshould be converted to. This LPCM format will be playable by theAVAudioEngine.

In addition, we specify an initializer:

- An

init(parser:,readFormat:)method that takes in aParsingto provide the source audio packets as well as anAVAudioFormatthat will be the format the source audio packets are converted to.

And finally, we define the two important methods:

- A

read(_:)method that provides anAVAudioPCMBuffercontaining the LPCM audio data corresponding to the number of frames specified. This data will be obtained by pulling the audio packets from theParsingand converting them into the LPCM format specified by thereadFormatproperty. - A

seek(_:)method that provides the ability to safely change the packet index specified by thecurrentPacketproperty. Specifically, when doing a seek operation we want to ensure we're not in the middle of a read operation.

Our Reader class is going to be a concrete implementation of the Reading protocol and use the Audio Converter Services API to convert the parsed audio packets into LPCM audio packets suitable for playback. Let's start by implementing the properties for the Reading protocol:

import AVFoundation

public class Reader: Reading {

public internal(set) var currentPacket: AVAudioPacketCount = 0

public let parser: Parsing

public let readFormat: AVAudioFormat

}Next we're going to define our converter and a queue to use to make sure our operations are thread-safe.

public class Reader: Reading {

...Reading properties

/// An `AudioConverterRef` used to do the conversion from the source format of the `parser` (i.e. the `sourceFormat`) to the read destination (i.e. the `destinationFormat`). This is provided by the Audio Conversion Services (I prefer it to the `AVAudioConverter`)

var converter: AudioConverterRef? = nil

/// A `DispatchQueue` used to ensure any operations we do changing the current packet index is thread-safe

private let queue = DispatchQueue(label: "com.fastlearner.streamer")

}Next we're going to define the required initializer from the Reading protocol:

public class Reader: Reading {

...Properties

public required init(parser: Parsing, readFormat: AVAudioFormat) throws {

self.parser = parser

guard let dataFormat = parser.dataFormat else {

throw ReaderError.parserMissingDataFormat

}

let sourceFormat = dataFormat.streamDescription

let commonFormat = readFormat.streamDescription

let result = AudioConverterNew(sourceFormat, commonFormat, &converter)

guard result == noErr else {

throw ReaderError.unableToCreateConverter(result)

}

self.readFormat = readFormat

}

// Make sure we dispose the converter when this class is deallocated

deinit {

guard AudioConverterDispose(converter!) == noErr else {

return

}

}

}Note that when we try to create a new converter using AudioConverterNew we check it was created successfully. If not, then we throw an error to prevent a reader being created without a proper converter. We'll define the ReaderError values below:

public enum ReaderError: LocalizedError {

case cannotLockQueue

case converterFailed(OSStatus)

case failedToCreateDestinationFormat

case failedToCreatePCMBuffer

case notEnoughData

case parserMissingDataFormat

case reachedEndOfFile

case unableToCreateConverter(OSStatus)

}Now we're ready to define our read method:

public class Reader: Reading {

...Properties

...Initializer

public func read(_ frames: AVAudioFrameCount) throws -> AVAudioPCMBuffer {

let framesPerPacket = readFormat.streamDescription.pointee.mFramesPerPacket

var packets = frames / framesPerPacket

/// (1)

guard let buffer = AVAudioPCMBuffer(pcmFormat: readFormat, frameCapacity: frames) else {

throw ReaderError.failedToCreatePCMBuffer

}

buffer.frameLength = frames

// (2)

try queue.sync {

let context = unsafeBitCast(self, to: UnsafeMutableRawPointer.self)

let status = AudioConverterFillComplexBuffer(converter!, ReaderConverterCallback, context, &packets, buffer.mutableAudioBufferList, nil)

guard status == noErr else {

switch status {

case ReaderMissingSourceFormatError:

throw ReaderError.parserMissingDataFormat

case ReaderReachedEndOfDataError:

throw ReaderError.reachedEndOfFile

case ReaderNotEnoughDataError:

throw ReaderError.notEnoughData

default:

throw ReaderError.converterFailed(status)

}

}

}

return buffer

}

}This may look like a lot, but let's break it down.

- First we allocate an

AVAudioPCMBufferto hold the target audio data in the read format. - Next we use the

AudioConverterFillComplexBuffer()method to fill the buffer allocated in step (1) with the requested number of frames. Similar to how we did with theParser, we'll define a static C method calledReaderConverterCallbackfor providing the source audio packets needed in the LPCM conversion. We'll define the converter callback method soon, but note that we wrap the conversion operation with a synchronous queue to ensure thread-safely since we will be modifying thecurrentPacketproperty within the converter callback.

Finally let's define the seek method:

public class Reader: Reading {

...Properties

...Initializer

...Read

public func seek(_ packet: AVAudioPacketCount) throws {

queue.sync {

currentPacket = packet

}

}

}Short and sweet! All we do is set the current packet to the one specified, but wrap it in a synchronous queue to make it thread-safe.

Now we're ready to define our converter callback ReaderConverterCallback:

func ReaderConverterCallback(_ converter: AudioConverterRef,

_ packetCount: UnsafeMutablePointer<UInt32>,

_ ioData: UnsafeMutablePointer<AudioBufferList>,

_ outPacketDescriptions: UnsafeMutablePointer<UnsafeMutablePointer<AudioStreamPacketDescription>?>?,

_ context: UnsafeMutableRawPointer?) -> OSStatus {

let reader = Unmanaged<Reader>.fromOpaque(context!).takeUnretainedValue()

// (1)

guard let sourceFormat = reader.parser.dataFormat else {

return ReaderMissingSourceFormatError

}

// (2)

let packetIndex = Int(reader.currentPacket)

let packets = reader.parser.packets

let isEndOfData = packetIndex >= packets.count - 1

if isEndOfData {

if reader.parser.isParsingComplete {

packetCount.pointee = 0

return ReaderReachedEndOfDataError

} else {

return ReaderNotEnoughDataError

}

}

// (3)

let packet = packets[packetIndex]

var data = packet.0

let dataCount = data.count

ioData.pointee.mNumberBuffers = 1

ioData.pointee.mBuffers.mData = UnsafeMutableRawPointer.allocate(byteCount: dataCount, alignment: 0)

_ = data.withUnsafeMutableBytes { (bytes: UnsafeMutablePointer<UInt8>) in

memcpy((ioData.pointee.mBuffers.mData?.assumingMemoryBound(to: UInt8.self))!, bytes, dataCount)

}

ioData.pointee.mBuffers.mDataByteSize = UInt32(dataCount)

// (4)

let sourceFormatDescription = sourceFormat.streamDescription.pointee

if sourceFormatDescription.mFormatID != kAudioFormatLinearPCM {

if outPacketDescriptions?.pointee == nil {

outPacketDescriptions?.pointee = UnsafeMutablePointer<AudioStreamPacketDescription>.allocate(capacity: 1)

}

outPacketDescriptions?.pointee?.pointee.mDataByteSize = UInt32(dataCount)

outPacketDescriptions?.pointee?.pointee.mStartOffset = 0

outPacketDescriptions?.pointee?.pointee.mVariableFramesInPacket = 0

}

packetCount.pointee = 1

reader.currentPacket = reader.currentPacket + 1

return noErr;

}- Make sure we have a valid source format so we know the data format of the parser's audio packets

- We check to make sure we haven't reached the end of the data we have available in the parser. The two scenarios where this could occur is if we've reached the end of the file or we've reached the end of the data we currently have downloaded, but not the entire file.

- We grab the packet available at the current packet index and fill in the

ioDataobject with the contents of that packet. Note that we're providing the packet data 1 packet at a time. - If we're dealing with a compressed format then we also must provide the packet descriptions so the Audio Converter Services can use it to appropriate convert those samples to LPCM.

That wraps up our Reader implementation. At this point we've implemented the logic we need to download a file and get LPCM audio that we can feed into an AVAudioEngine. Let's move on to our Streaming interface.

The Streaming protocol will perform playback an AVAudioEngine via a AVAudioPlayerNode, and handle the flow of data between a Downloading, Parsing, and Reading.

public protocol Streaming: class {

// MARK: - Properties

/// (1)

var currentTime: TimeInterval? { get }

/// (2)

var delegate: StreamingDelegate? { get set }

/// (3)

var duration: TimeInterval? { get }

/// (4)

var downloader: Downloading { get }

/// (5)

var parser: Parsing? { get }

/// (6)

var reader: Reading? { get }

/// (7)

var engine: AVAudioEngine { get }

/// (8)

var playerNode: AVAudioPlayerNode { get }

/// (9)

var readBufferSize: AVAudioFrameCount { get }

/// (10)

var readFormat: AVAudioFormat { get }

/// (11)

var state: StreamingState { get }

/// (12)

var url: URL? { get }

/// (13)

var volume: Float { get set }

// MARK: - Methods

/// (14)

func play()

/// (15)

func pause()

/// (16)

func stop()

/// (17)

func seek(to time: TimeInterval) throws

}There's a lot going on above so let's break down what's going on starting with the properties:

- A

currentTimeproperty representing the current play time in seconds. - A

delegateproperty that allows another class to respond to changes to the streamer. See theStreamingDelegateinterface below. - A

durationproperty representing the current duration time in seconds. - A

downloaderproperty that represents theDownloadinginstance used to pull the binary audio data. - A

parserproperty that represents theParsinginstance used to convert the binary audio data from thedownloaderinto audio packets. - A

readerproperty that represents theReadinginstance used to convert the parsed audio packets from theparserinto LPCM audio packets for playback. - An

engineproperty that represents theAVAudioEnginewe're using to actually perform the playback. - A

playerNodeproperty that represents theAVAudioPlayerNodethat we will use to schedule the LPCM audio packets from thereaderfor playback into theengine. - A

readBufferSizeproperty representing how many frames of LPCM audio should be scheduled onto theplayerNode. - A

readFormatproperty representing a LPCM audio format that will be used by theengineandplayerNode. This is the target format thereaderwill convert the audio packets coming from theparserto. - A

stateproperty that represents the current state of the streamer. TheStreamingStateis defined below. - A

urlproperty representing the URL (i.e. internet link) of the current audio file being streamed. - A

volumeproperty representing the current volume of theengine. Our demo app doesn't expose a UI for this, but if you wanted a user interface that allowed adjusting the volume you'd want this.

Phew! So those are all the properties we needed to define our Streaming protocol. Next we need to define the four most common audio player properties you're likely to find.

- A

play()method that will begin audio playback. - A

pause()method that will be used to pause the audio playback. - A

stop()method that will be used to stop the audio playback (go back to the beginning and deallocate all scheduled buffers in theplayerNode). - A

seek(to:)method that will allow us to seek to different portions of the audio file.

Let's quickly define the StreamingDelegate and the StreamingState we mentioned above.

public protocol StreamingDelegate: class {

func streamer(_ streamer: Streaming, failedDownloadWithError error: Error, forURL url: URL)

func streamer(_ streamer: Streaming, updatedDownloadProgress progress: Float, forURL url: URL)

func streamer(_ streamer: Streaming, changedState state: StreamingState)

func streamer(_ streamer: Streaming, updatedCurrentTime currentTime: TimeInterval)

func streamer(_ streamer: Streaming, updatedDuration duration: TimeInterval)

}public enum StreamingState: String {

case stopped

case paused

case playing

}Finally, we can create an extension on the Streaming protocol to define a default readBufferSize and readFormat that should work most of the time.

extension Streaming {

public var readBufferSize: AVAudioFrameCount {

return 8192

}

public var readFormat: AVAudioFormat {

return AVAudioFormat(commonFormat: .pcmFormatFloat32, sampleRate: 44100, channels: 2, interleaved: false)!

}

}Now that we've defined the Streaming protocol, as well as concrete classes implementing the Downloading, Parsing, and Reading protocols (the Downloader, Parser, and Reader, respectively), we're now ready to implement our AVAudioEngine-based streamer! Like we've done before, let's start by defining the Streaming properties:

/// (1)

open class Streamer: Streaming {

/// (2)

public var currentTime: TimeInterval? {

guard let nodeTime = playerNode.lastRenderTime,

let playerTime = playerNode.playerTime(forNodeTime: nodeTime) else {

return nil

}

let currentTime = TimeInterval(playerTime.sampleTime) / playerTime.sampleRate

return currentTime + currentTimeOffset

}

public var delegate: StreamingDelegate?

public internal(set) var duration: TimeInterval?

public lazy var downloader: Downloading = {

let downloader = Downloader()

downloader.delegate = self

return downloader

}()

public internal(set) var parser: Parsing?

public internal(set) var reader: Reading?

public let engine = AVAudioEngine()

public let playerNode = AVAudioPlayerNode()

public internal(set) var state: StreamingState = .stopped {

didSet {

delegate?.streamer(self, changedState: state)

}

}

/// (3)

public var url: URL? {

didSet {

reset()

if let url = url {

downloader.url = url

downloader.start()

}

}

}

// (4)

public var volume: Float {

get {

return engine.mainMixerNode.outputVolume

}

set {

engine.mainMixerNode.outputVolume = newValue

}

}

}Above we define quite a few properties. Specifically I wanted to touch on a few things that are important to note at this point.

- Instead of being a

publicclass we're making theStreameranopenclass. This is because we intend on subclassing it later and only want the base implementation to worry about setting up the essentials for ourengineand coordinating thedownloader,parser, andreader. . In order to implement the time-pitch shifting (or any other combination of effects) streamer we will later subclass theStreamerand override a few methods to attach and connect different effect nodes. - The

currentTimeis calculated using thesampleTimeof theplayerNode. When aseekoperation is performed the player node's sample time actually gets reset to 0 because we call thestop()method on it so we need to store another variable that has our current time offset. We will define that offset ascurrentTimeOffset - Whenever a new

urlis set on theStreamerwe're going to define areset()method that will allow us to reset the playback state and deallocate all resources relating to the currenturl. - We provide get/set access to the volume by setting the volume property of the main mixer node of the

AVAudioEngine.

Now let's define the rest of the properties we will need inside the Streamer.

open class Streamer: Streaming {

...Streaming Properties

/// A `TimeInterval` used to calculate the current play time relative to a seek operation.

var currentTimeOffset: TimeInterval = 0

/// A `Bool` indicating whether the file has been completely scheduled into the player node.

var isFileSchedulingComplete = false

}Before we implement the methods from the Streaming protocol let's first define a default initializer as well as some helpful setup methods.

open class Streamer: Streaming {

...Properties

public init() {

setupAudioEngine()

}

func setupAudioEngine() {

// (1)

attachNodes()

// (2)

connectNodes()

// (3)

engine.prepare()

/// (4)

let interval = 1 / (readFormat.sampleRate / Double(readBufferSize))

Timer.scheduledTimer(withTimeInterval: interval / 2, repeats: true) {

[weak self] _ in

// (5)

self?.scheduleNextBuffer()

// (6)

self?.handleTimeUpdate()

// (7)

self?.notifyTimeUpdated()

}

}

open func attachNodes() {

engine.attach(playerNode)

}

open func connectNodes() {

engine.connect(playerNode, to: engine.mainMixerNode, format: readFormat)

}

func handleTimeUpdate() {

guard let currentTime = currentTime, let duration = duration else {

return

}

if currentTime >= duration {

try? seek(to: 0)

stop()

}

}

func notifyTimeUpdated() {

guard engine.isRunning, playerNode.isPlaying else {

return

}

guard let currentTime = currentTime else {

return

}

delegate?.streamer(self, updatedCurrentTime: currentTime)

}

}When we initialize our Streamer we begin by attaching and connecting the nodes we need within the AVAudioEngine. Here's a breakdown of the steps:

- We attach the nodes we intend on using within the engine. In our basic

Streamerthis is just theplayerNodethat we will use to schedule the LPCM audio buffers from thereader. Since our time-pitch subclass will need to attach more nodes we'll mark this method asopenso our subclass can override it. - We connect the nodes we've attach to the

engine. Right now all we do is attach theplayerNodeto the main mixer node of theengine. Since our time-pitch subclass will need to connect the nodes a little differently so we'll also mark this method asopenso our subclass can override it. - We prepare the

engine. This step preallocates all resources needed by theengineto immediately start playback. - We create a scheduled timer that will give us a runloop to periodically keep scheduling buffers onto the

playerNodeand update the current time. - Every time the timer fires we should schedule a new buffer onto the

playerNode. We will define this method in the next section after we implement theDownloadingDelegatemethods. - Every time the timer fires we check if the whole audio file has played by comparing the

currentTimeto theduration. If so, then we seek to the beginning of the data and stop playback. - We notify the current playback time has updated using the

streamer(_:, updatedCurrentTime:)method on thedelegate.

Next we're going to define a reset() method to allow us to reset the state of the Streamer. We'll need this anytime we load a new url.

open class Streamer: Streaming {

...Properties

...Initializer + Setup

func reset() {

// (1)

stop()

// (2)

duration = nil

reader = nil

isFileSchedulingComplete = false

state = .stopped

// (3)

do {

parser = try Parser()

} catch {

print("Failed to create parser: \(error.localizedDescription)")

}

}

}Here's a quick recap of what's happening here:

- We stop playback completely.

- We reset all values used that were related to the current file.

- We create a new

parserin anticipation of new audio data coming from thedownloader. There is exactly oneparserper audio file because it progressively produces audio packets that are related to the data format of the audio it initially started parsing.

Now that we have our setup and reset methods defined, let's go ahead and implement the required methods from the DownloadingDelegate protocol since the downloader property of the Streamer sets its delegate equal to the Streamer instance.

extension Streamer: DownloadingDelegate {

public func download(_ download: Downloading, completedWithError error: Error?) {

// (1)

if let error = error, let url = download.url {

delegate?.streamer(self, failedDownloadWithError: error, forURL: url)

}

}

public func download(_ download: Downloading, changedState downloadState: DownloadingState) {

// Nothing for now

}

public func download(_ download: Downloading, didReceiveData data: Data, progress: Float) {

// (2)

guard let parser = parser else {

return

}

// (3)

do {

try parser.parse(data: data)

} catch {

print("Parser failed to parse: \(error.localizedDescription)")

}

// (4)

if reader == nil, let _ = parser.dataFormat {

do {

reader = try Reader(parser: parser, readFormat: readFormat)

} catch {

print("Failed to create reader: \(error.localizedDescription)")

}

}

/// Update the progress UI

DispatchQueue.main.async {

[weak self] in

// (5)

self?.notifyDownloadProgress(progress)

// (6)

self?.handleDurationUpdate()

}

}

func notifyDownloadProgress(_ progress: Float) {

guard let url = url else {

return

}

delegate?.streamer(self, updatedDownloadProgress: progress, forURL: url)

}

func handleDurationUpdate() {

// (7)

if let newDuration = parser?.duration {

var shouldUpdate = false

if duration == nil {

shouldUpdate = true

} else if let oldDuration = duration, oldDuration < newDuration {

shouldUpdate = true

}

// (8)

if shouldUpdate {

self.duration = newDuration

notifyDurationUpdate(newDuration)

}

}

}

func notifyDurationUpdate(_ duration: TimeInterval) {

guard let _ = url else {

return

}

delegate?.streamer(self, updatedDuration: duration)

}

}The majority of our focus in this section is in the download(_:,didReceiveData:progress:), but let's do a quick recap of the main points above:

- When the download completes we check if it failed and, if so, we call the

streamer(_:,failedDownloadWithError:forURL:)on thedelegateproperty. - As we're receiving data we first check if we have a non-nil

parser. Note that every time we set a newurlourreset()method gets called, which defines a newparserinstance to use. - We attempt to parse the binary audio data into audio packets using the

parser. - If the

readerproperty is nil we check if theparserhas parsed enough data to have adataFormatdefined. Note that theParserclass we've defined earlier uses the Audio File Stream Services, which progressively parses the binary audio data into properties first and then audio packets. Once we have a validdataFormaton theparserwe can create an instance of thereaderby passing in theparserand thereadFormatwe previously defined in theStreamingprotocol. As mentioned before, thereadFormatmust be the LPCM format we expect to use in theplayerNode. - We notify the download progress has updated using the

streamer(_:, updatedDownloadProgress:,forURL:)method on thedelegate. - We check if the value of the duration has changed. If so then we notify the delegate using the

streamer(_:updatedDuration:)method. - We check if the

parserhas itsdurationproperty defined. Since theparseris progressively parsing more and more audio data itsdurationproperty may keep increasing (such as when we're dealing with live streams). - If the new duration value is greater than the previous duration value we notify the

delegateof theStreamerusing thestreamer(_,updatedDuration:)method.

That completes our implementation of the DownloadingDelegate. Using our downloader we're able to pull the binary audio data corresponding to the url property and parse it using the parser. When our parser has enough data to define a dataFormat we create a reader we can then use for scheduling buffers onto the playerNode.

Let's go ahead and define the scheduleNextBuffer() method we used earlier in the Timer of the setupAudioEngine() method.

open class Streamer: Streaming {

...Properties

...Initializer + Setup

...Reset

func scheduleNextBuffer() {

// (1)

guard let reader = reader else {

return

}

// (2)

guard !isFileSchedulingComplete else {

return

}

do {

// (3)

let nextScheduledBuffer = try reader.read(readBufferSize)

// (4)

playerNode.scheduleBuffer(nextScheduledBuffer)

} catch ReaderError.reachedEndOfFile {

// (5)

isFileSchedulingComplete = true

} catch {

print("Reader failed to read: \(error.localizedDescription)")

}

}

}Let's break this down:

- We first check the

readeris notnil. Remember thereaderis only initialized when theparserhas parsed enough of the downloaded audio data to have a validdataFormatproperty. - We check our

isFileSchedulingCompleteproperty to see if we've already scheduled the entire file. If so, all the buffers for the file have been scheduled onto theplayerNodeand our work is complete. - We obtain the next buffer of LPCM audio from the

readerby passing in thereadBufferSizeproperty we defined in theStreamingprotocol. This is the step where thereaderwill attempt to read the number of audio frames using the audio packets from theparserand convert them into LPCM audio packets to return aAVAudioPCMBuffer. - We schedule the next buffer of LPCM audio data (i.e. the

AVAudioPCMBufferreturned from thereader'sread()method) onto theplayerNode. - If the

readerthrows aReaderError.reachedEndOfFileerror then we set theisFileSchedulingCompleteproperty to true so we know we shouldn't attempt to read anymore buffers from thereader.

Great! At the point we've implemented all the logic we need for scheduling the audio data specified by the url property onto our playerNode in the correct LPCM format. As a result, if the audio file at the url specified is in an MP3 or AAC compressed format our reader will properly handle the format conversion required to read the compressed packets on the fly.

We're now ready to implement the playback methods from the Streaming protocol. As we implement these methods we'll go one-by-one to make sure we handle all edge cases. Let's start with play():

open class Streamer: Streaming {

...Properties

...Initializer + Setup

...Reset

...Schedule Buffers

public func play() {

// (1)

guard !playerNode.isPlaying else {

return

}

// (2)

if !engine.isRunning {

do {

try engine.start()

} catch {

print("Engine failed to start: \(error.localizedDescription)")

return

}

}

// (3)

playerNode.play()

// (4)

state = .playing

}

}Here's a recap of our play() method:

- We check if the

playerNodeis already playing and, if so, we are already done. - We check if the

engineis running and if it's not then we'll start it up. Since we calledengine.prepare()in oursetupAudioEnginemethod above this call should be instant. - We tell the

playerNodetoplay(), which begins playing out any LPCM audio buffers that have been scheduled onto it. - We update the state to

playing(this will trigger thestreamer(_,changedState:)method in thedelegate).

Next we'll implement the pause method.

open class Streamer: Streaming {

...Properties

...Initializer + Setup

...Reset

...Schedule Buffers

...Play

public func pause() {

// (1)

guard playerNode.isPlaying else {

return

}

// (2)

playerNode.pause()

engine.pause()

// (3)

state = .paused

}

}Nothing crazy here, here's the recap:

- We check that the

playerNodeis not playing and, if so, we're already done. - We pause both the

playerNodeas well as theengine. When we pause theplayerNodewe're also pausing itssampleTime, which allows us to have an accuratecurrentTimeproperty. - We update the state to

paused(this will trigger thestreamer(_,changedState:)method in thedelegate).

Next let's implement the stop() method:

open class Streamer: Streaming {

...Properties

...Initializer + Setup

...Reset

...Schedule Buffers

...Play

...Pause

public func stop() {

// (1)

downloader.stop()

// (2)

playerNode.stop()

engine.stop()

// (3)

state = .stopped

}

}Again, we're not doing anything crazy here, but it's good we understand why each step is necessary.

- We stop the

downloader, which may currently be downloading audio data. - We stop the

playerNodeand theengine. By doing this theplayerNodewill release all scheduled buffers and change itssampleTimeto 0. Callingstopon theenginereleases any resources allocated in theengine.prepare()method. -

- We update the state to

stopped(this will trigger thestreamer(_,changedState:)method in thedelegate).

- We update the state to

Next let's implement our seek(to:) method. This will allow us to skip around to different parts of the file.

open class Streamer: Streaming {

...Properties

...Initializer + Setup

...Reset

...Schedule Buffers

...Play

...Pause

...Stop

public func seek(to time: TimeInterval) throws {

// (1)

guard let parser = parser, let reader = reader else {

return

}

// (2)

guard let frameOffset = parser.frameOffset(forTime: time),

let packetOffset = parser.packetOffset(forFrame: frameOffset) else {

return

}

// (3)

currentTimeOffset = time

// (4)

isFileSchedulingComplete = false

// (5)

let isPlaying = playerNode.isPlaying

// (6)

playerNode.stop()

// (7)

do {

try reader.seek(packetOffset)

} catch {

// Log error

return

}

// (8)

if isPlaying {

playerNode.play()

}

// (9)

delegate?.streamer(self, updatedCurrentTime: time)

}

}There's a little bit more going on here, but let's break it down:

- We make sure we have a

parserandreaderbecause we'll need both to convert and set the new current time value to a proper packet offset. - We get the packet offset from the new current time value specified. We do this by first getting the frame offset using the

frameOffset(forTime:)method on the parser. Then we use thepacketOffset(forFrame:)to get the packet from the frame offset. We could've created apacketOffset(forTime:)method in theParsingprotocol, but I wanted to use this as a chance to demonstrate the conversion to frame and packets we typically need to perform to do a seek operation from seconds. - We store the new current time value as an offset to make sure our

currentTimeproperty has the proper offset from the beginning of the file. We do this because we're going to stop theplayerNode, which causes itssampleTimeto reset to 0 and we want to be sure we're reporting thecurrentTimeafter seek operations relative to the whole file. - We reset the

isFileSchedulingCompleteproperty to false to make sure ourscheduleNextBuffer()method starts scheduling audio buffers again relative to the new start position. Remember that when we callstopon theplayerNodeit releases all internally scheduled buffers. - We check if the

playerNodeis currently playing to make sure we properly restart playback again once theseekoperation is complete. - We call the

stopmethod on theplayerNodeto release all schedule buffers and reset itssampleTime. - We call the

seek(_:)method on thereaderto make it sets thecurrentPacketproperty to the new packet offset. This will ensure that all future calls to itsread(_:)method are done at the proper packet offset. - If the

playerNodewas previously playing we immediately resume playback. - We trigger the

streamer(_,updatedCurrentTime:)method on thedelegateto notify our receiver of the new current time value.

That completes our Streamer class! Click here to see the full source for the Streamer class and any extensions and custom enums we used above.

In the next section we're going to create a subclass of the Streamer that adds the time-pitch effect we promised in the example app.

In the previous section we demonstrated how to download, parse, and read back an audio file for playback in an AVAudioEngine. We created the Streamer class to coordinate the Downloader, Parser, and Reader classes so we could go from downloading binary audio data, to making audio packets from that data, to converting those audio packets into LPCM audio packets on the fly so we could schedule it onto an AVAudioPlayerNode. In general, those are the typical steps we'd have to implement to playback an audio file without any added effects.

Now, let's go ahead and take it one step further. Here's how we'd implement a subclass of the Streamer to include the time-pitch effect in our demo application.

// (1)

final class TimePitchStreamer: Streamer {

/// (2)

let timePitchNode = AVAudioUnitTimePitch()

/// (3)

var pitch: Float {

get {

return timePitchNode.pitch

}

set {

timePitchNode.pitch = newValue

}

}

/// (4)

var rate: Float {

get {

return timePitchNode.rate

}

set {

timePitchNode.rate = newValue

}

}

// (5)

override func attachNodes() {

super.attachNodes()

engine.attach(timePitchNode)

}

// (6)

override func connectNodes() {

engine.connect(playerNode, to: timePitchNode, format: readFormat)

engine.connect(timePitchNode, to: engine.mainMixerNode, format: readFormat)

}

}Here's what we've done:

- First we create a

finalsubclass of theStreamercalledTimePitchStreamer. We mark theTimePitchStreameras final because we don't want any other class to subclass it. - To perform the time-pitch shifting effect we're going to utilize the

AVAudioUnitTimePitchnode. This effect node's role is analogous to that of the Audio Unit in theAUGraphwe discussed earlier. As a matter of fact, theAVAudioUnitTimePitchnode sounds exactly like thekAudioUnitSubType_NewTimePitchAudio Unit effect subtype. - We expose a

pitchproperty to provide a higher level way of adjusting the pitch of thetimePitchNode. This is optional since this value can be set directly on thetimePitchNodeinstance, but will be convenient in our UI. - We expose a