Releases: huggingface/transformers

Safetensors serialization by default, DistilWhisper, Fuyu, Kosmos-2, SeamlessM4T, Owl-v2

New models

Distil-Whisper

Distil-Whisper is a distilled version of Whisper that is 6 times faster, 49% smaller, and performs within 1% word error rate (WER) on out-of-distribution data. It was proposed in the paper Robust Knowledge Distillation via Large-Scale Pseudo Labelling.

Distil-Whisper copies the entire encoder from Whisper, meaning it retains Whisper's robustness to different audio conditions. It only copies 2 decoder layers, which significantly reduces the time taken to auto-regressively generate text tokens:

Distil-Whisper is MIT licensed and directly available in the Transformers library with chunked long-form inference, Flash Attention 2 support, and Speculative Decoding. For details on using the model, refer to the following instructions.

Joint work from @sanchit-gandhi, @patrickvonplaten and @srush.

- [Assistant Generation] Improve Encoder Decoder by @patrickvonplaten in #26701

- [WhisperForCausalLM] Add WhisperForCausalLM for speculative decoding by @patrickvonplaten in #27195

- [Whisper, Bart, MBart] Add Flash Attention 2 by @patrickvonplaten in #27203

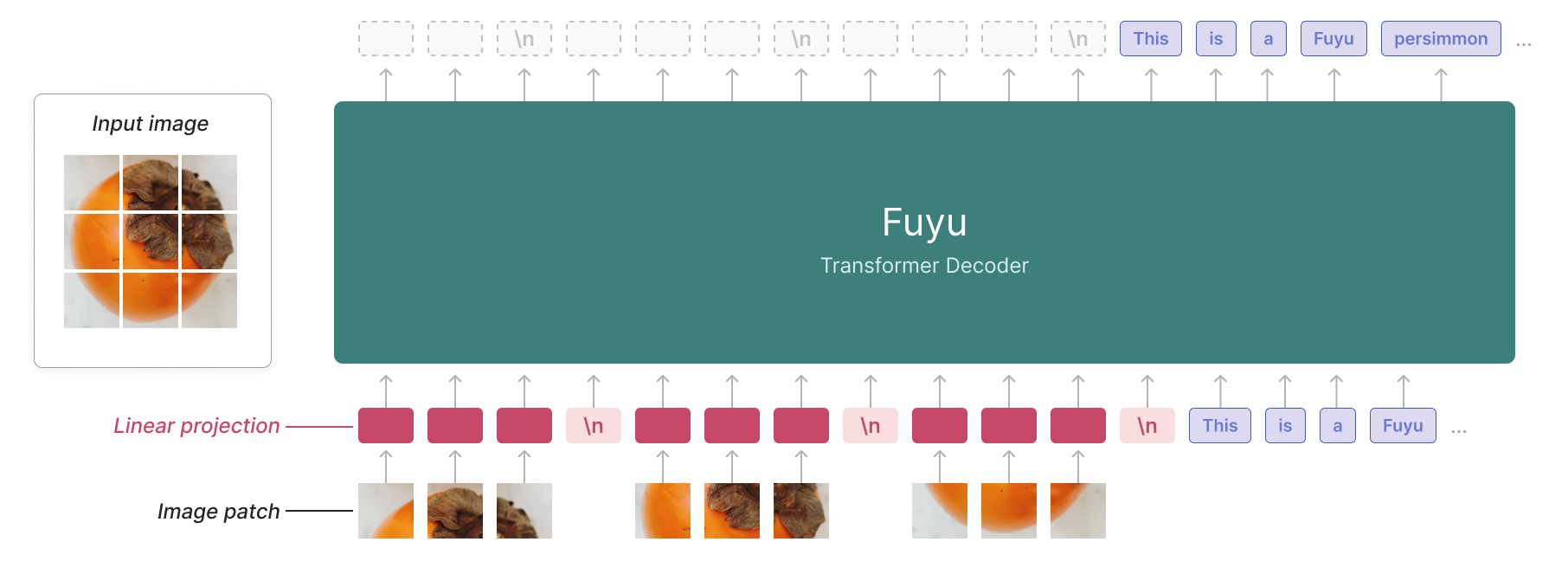

Fuyu

The Fuyu model was created by ADEPT, and authored by Rohan Bavishi, Erich Elsen, Curtis Hawthorne, Maxwell Nye, Augustus Odena, Arushi Somani, Sağnak Taşırlar.

The authors introduced Fuyu-8B, a decoder-only multimodal model based on the classic transformers architecture, with query and key normalization. A linear encoder is added to create multimodal embeddings from image inputs.

By treating image tokens like text tokens and using a special image-newline character, the model knows when an image line ends. Image positional embeddings are removed. This avoids the need for different training phases for various image resolutions. With 8 billion parameters and licensed under CC-BY-NC, Fuyu-8B is notable for its ability to handle both text and images, its impressive context size of 16K, and its overall performance.

Joint work from @molbap, @pcuenca, @amyeroberts, @ArthurZucker

SeamlessM4T

The SeamlessM4T model was proposed in SeamlessM4T — Massively Multilingual & Multimodal Machine Translation by the Seamless Communication team from Meta AI.

SeamlessM4T is a collection of models designed to provide high quality translation, allowing people from different linguistic communities to communicate effortlessly through speech and text.

SeamlessM4T enables multiple tasks without relying on separate models:

- Speech-to-speech translation (S2ST)

- Speech-to-text translation (S2TT)

- Text-to-speech translation (T2ST)

- Text-to-text translation (T2TT)

- Automatic speech recognition (ASR)

SeamlessM4TModel can perform all the above tasks, but each task also has its own dedicated sub-model.

Kosmos-2

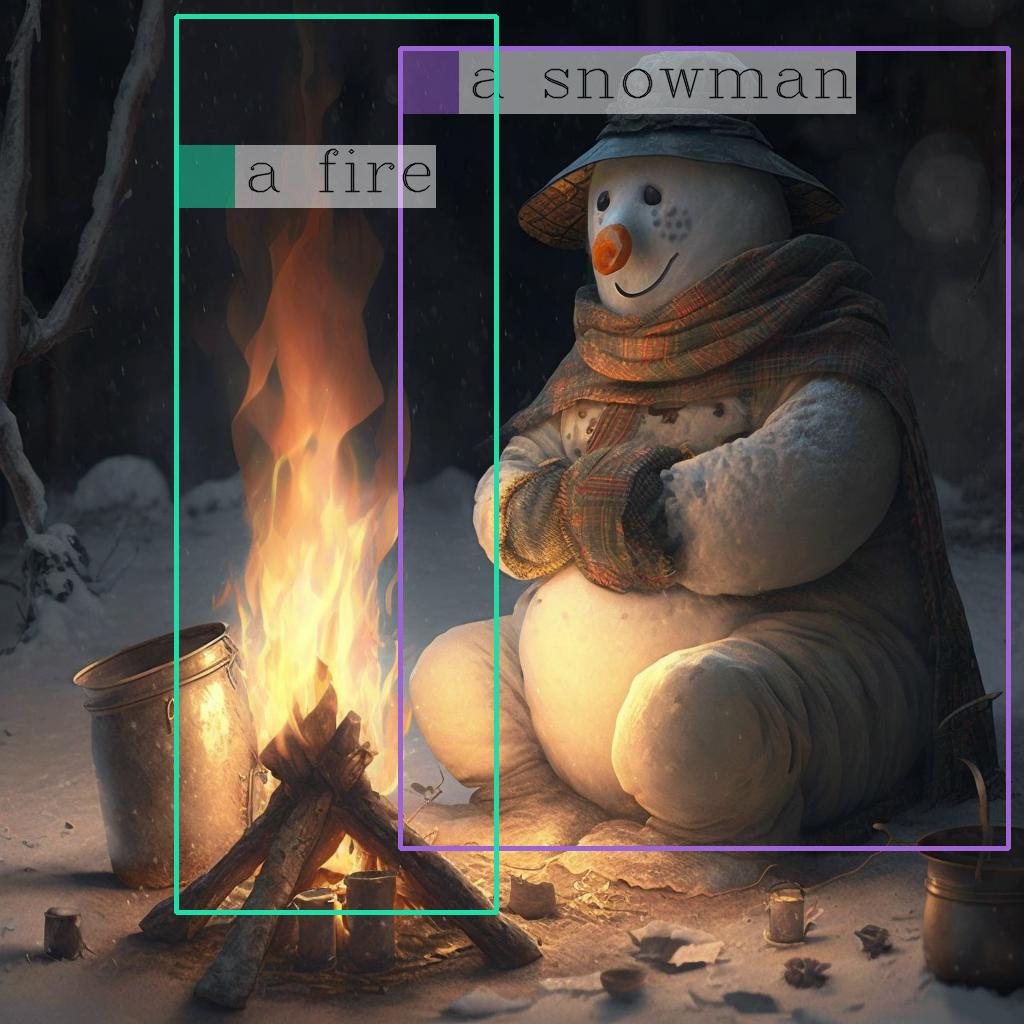

The KOSMOS-2 model was proposed in Kosmos-2: Grounding Multimodal Large Language Models to the World by Zhiliang Peng, Wenhui Wang, Li Dong, Yaru Hao, Shaohan Huang, Shuming Ma, Furu Wei.

KOSMOS-2 is a Transformer-based causal language model and is trained using the next-word prediction task on a web-scale dataset of grounded image-text pairs GRIT. The spatial coordinates of the bounding boxes in the dataset are converted to a sequence of location tokens, which are appended to their respective entity text spans (for example, a snowman followed by <patch_index_0044><patch_index_0863>). The data format is similar to “hyperlinks” that connect the object regions in an image to their text span in the corresponding caption.

Owl-v2

OWLv2 was proposed in Scaling Open-Vocabulary Object Detection by Matthias Minderer, Alexey Gritsenko, Neil Houlsby. OWLv2 scales up OWL-ViT using self-training, which uses an existing detector to generate pseudo-box annotations on image-text pairs. This results in large gains over the previous state-of-the-art for zero-shot object detection.

- Add OWLv2, bis by @NielsRogge in #26668

🚨🚨🚨 Safetensors by default for torch serialization 🚨🚨🚨

Version v4.35.0 now puts safetensors serialization by default. This is a significant change targeted at making users of the Hugging Face Hub, transformers, and any downstream library leveraging it safer.

The safetensors library is a safe serialization framework for machine learning tensors. It has been audited and will become the default serialization framework for several organizations (Hugging Face, EleutherAI, Stability AI).

It was already the default loading mechanism since v4.30.0 and would therefore already default to loading model.safetensors files instead of pytorch_model.bin if these were present in the repository.

With v4.35.0, any call to save_pretrained for torch models will now save a safetensors file. This safetensors file is in the PyTorch format, but can be loaded in TensorFlow and Flax models alike.

- Loading a

safetensorsfile and having a warning mentioning missing weights unexpectedly - Obtaining completely wrong/random results at inference after loading a pretrained model that you have saved in

safetensors

If you wish to continue saving files in the .bin format, you can do so by specifying safe_serialization=False in all your save_pretrained calls.

- Safetensors serialization by default by @LysandreJik in #27064

Chat templates

Chat templates have been expanded with the addition of the add_generation_prompt argument to apply_chat_template(). This has also enabled us to rework the ConversationalPipeline class to use chat templates. Any model with a chat template is now automatically usable through ConversationalPipeline.

- Add add_generation_prompt argument to apply_chat_template by @Rocketknight1 in #26573

- Conversation pipeline fixes by @Rocketknight1 in #26795

Guides

Two new guides on LLMs were added the library:

- [docs] LLM prompting guide by @MKhalusova in #26274

- [docs] Optimizing LLMs by @patrickvonplaten in #26058

Quantization

Exllama-v2 integration

Exllama-v2 provides better GPTQ kernel for higher throughput and lower latency for GPTQ models. The original code can be found here.

You will need the latest versions of optimum and auto-gptq. Read more about the integration here.

AWQ integration

AWQ is a new and popular quantization scheme, already used in various libraries such as TGI, vllm, etc. and known to be faster than GPTQ models according to some benchmarks. The original code can be found here and here you can read more about the original paper.

We support AWQ inference with original kernels as well as kernels provided through autoawq package that you can simply install with pip install autoawq.

- [

core/Quantization] AWQ integration by @younesbelkada in #27045

We also provide an example script on how to push quantized weights on the hub on the original repository.

Read more about the benchmarks and the integration here

GPTQ on CPU !

You can now run GPTQ models on CPU using the latest version of auto-gptq thanks to @vivekkhandelwal1 !

- Add support for loading GPTQ models on CPU by @vivekkhandelwal1 in #26719

Attention mask refactor

We refactored the attention mask logic for major models in transformers. For instance, we removed padding_mask argument which was ambiguous for some users

- Remove ambiguous

padding_maskand instead use a 2D->4D Attn Mask Mapper by @patrickvonplaten in #26792 - [Attention Mask] Refactor all encoder-decoder attention mask by @patrickvonplaten in #27086

Flash Attention 2 for more models + quantizat...

Patch release: v4.34.1

v4.34: Mistral, Persimmon, Prompt templating, Flash Attention 2, Tokenizer refactor

New models

Mistral

Mistral-7B-v0.1 is a decoder-based LM with the following architectural choices:

- Sliding Window Attention - Trained with 8k context length and fixed cache size, with a theoretical attention span of 128K tokens

- GQA (Grouped Query Attention) - allowing faster inference and lower cache size.

- Byte-fallback BPE tokenizer - ensures that characters are never mapped to out-of-vocabulary tokens.

Persimmon

The authors introduced Persimmon-8B, a decoder model based on the classic transformers architecture, with query and key normalization. Persimmon-8B is a fully permissively licensed model with approximately 8 billion parameters, released under the Apache license. Some of the key attributes of Persimmon-8B are long context size (16K), performance, and capabilities for multimodal extensions.

- [

Persimmon] Add support for persimmon by @ArthurZucker in #26042

BROS

BROS stands for BERT Relying On Spatiality. It is an encoder-only Transformer model that takes a sequence of tokens and their bounding boxes as inputs and outputs a sequence of hidden states. BROS encode relative spatial information instead of using absolute spatial information.

- Add BROS by @jinhopark8345 in #23190

ViTMatte

ViTMatte leverages plain Vision Transformers for the task of image matting, which is the process of accurately estimating the foreground object in images and videos.

- Add ViTMatte by @NielsRogge in #25843

Nougat

Nougat uses the same architecture as Donut, meaning an image Transformer encoder and an autoregressive text Transformer decoder to translate scientific PDFs to markdown, enabling easier access to them.

- Add Nougat by @NielsRogge and @molbap in #25942

Prompt templating

We've added a new template feature for chat models. This allows the formatting that a chat model was trained with to be saved with the model, ensuring that users can exactly reproduce that formatting when they want to fine-tune the model or use it for inference. For more information, see our template documentation.

- Overhaul Conversation class and prompt templating by @Rocketknight1 in #25323

🚨🚨 Tokenizer refactor

- [

Tokenizer] attemp to fix add_token issues by @ArthurZucker in #23909 - Nit-added-tokens by @ArthurZucker in #26538 adds some fix to #23909 .

🚨Workflow Changes 🚨:

These are not breaking changes per se but rather bugfixes. However, we understand that this may result in some workflow changes so we highlight them below.

- unique_no_split_tokens attribute removed and not used in the internal logic

- sanitize_special_tokens() follows a deprecation cycle and does nothing

- All attributes in SPECIAL_TOKENS_ATTRIBUTES are stored as AddedTokens and no strings.

- loading a slow from a fast or a fast from a slow will no longer raise and error if the tokens added don't have the correct index. This is because they will always be added following the order of the added_tokens but will correct mistakes in the saved vocabulary if there are any. (And there are a lot in old format tokenizers)

- the length of a tokenizer is now max(set(self.get_vocab().keys())) accounting for holes in the vocab. The vocab_size no longer takes into account the added vocab for most of the tokenizers (as it should not). Mostly breaking for T5

- Adding a token using tokenizer.add_tokens([AddedToken("hey", rstrip=False, normalized=True)]) now takes into account rstrip, lstrip, normalized information.

- added_tokens_decoder holds AddedToken, not strings.

- add_tokens() for both fast and slow will always be updated if the token is already part of the vocab, allowing for custom stripping.

- initializing a tokenizer form scratch will now add missing special tokens to the vocab.

- stripping is not always done for special tokens! 🚨 Only if the AddedToken has lstrip=True and rstrip=True

- fairseq_ids_to_tokens attribute removed for Barthez (was not used)

➕ Most visible features:

- printing a tokenizer now shows

tokenizer.added_tokens_decoderfor both fast and slow tokenizers. Moreover, additional tokens that were already part of the initial vocab are also found there. - faster

from_pretrained, fasteradd_tokensbecause special and non special can be mixed together and the trie is not always rebuilt. - faster encode/decode with caching mechanism for

added_tokens_decoder/encoder. - information is fully saved in the

tokenizer_config.json

For any issues relating to this, make sure to open a new issue and ping @ArthurZucker.

Flash Attention 2

FA2 support added to transformers for most popular architectures (llama, mistral, falcon) architectures actively being contributed in this issue (#26350). Simply pass use_flash_attention_2=True when calling from_pretrained

In the future, PyTorch will support Flash Attention 2 through torch.scaled_dot_product_attention, users would be able to benefit from both (transformers core & transformers + SDPA) implementations of Flash Attention-2 with simple changes (model.to_bettertransformer() and force-dispatch the SDPA kernel to FA-2 in the case of SDPA)

- [

core] Integrate Flash attention 2 in most used models by @younesbelkada in #25598

For our future plans regarding integrating F.sdpa from PyTorch in core transformers, see here: #26557

Lazy import structure

Support for lazy loading integration libraries has been added. This will drastically speed up importing transformers and related object from the library.

Example before this change:

2023-09-11 11:07:52.010179: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

python3 -c "from transformers import CLIPTextModel" 3.31s user 3.06s system 220% cpu 2.893 total

After this change:

python3 -c "from transformers import CLIPTextModel" 1.70s user 1.49s system 220% cpu 1.447 total

- [Core] Add lazy import structure to imports by @patrickvonplaten in #26090

Bugfixes and improvements

- Fix typo by @susnato in #25966

- Fix Detr CI by @ydshieh in #25972

- Fix

test_load_img_url_timeoutby @ydshieh in #25976 - nn.Identity is not required to be compatible with PyTorch < 1.1.0 as the minimum PyTorch version we currently support is 1.10.0 by @statelesshz in #25974

- Add

Pop2Pianospace demo. by @susnato in #25975 - fix typo by @kai01ai in #25981

- Use main in conversion script by @ydshieh in #25973

- [doc] Always call it Agents for consistency by @julien-c in #25958

- Update RAG README.md with correct path to examples/seq2seq by @tleyden in #25953

- Update training_args.py to remove the runtime error by @sahel-sh in #25920

- Trainer: delegate default generation values to

generation_configby @gante in #25987 - Show failed tests on CircleCI layout in a better way by @ydshieh in #25895

- Patch with accelerate xpu by @abhilash1910 in #25714

- PegasusX add _no_split_modules by @andreeahedes in #25933

- Add TFDebertaV2ForMultipleChoice by @raghavanone in #25932

- deepspeed resume from ckpt fixes and adding support for deepspeed optimizer and HF scheduler by @pacman100 in #25863

- [Wav2Vec2 Conformer] Fix inference float16 by @sanchit-gandhi in #25985

- Add LLaMA resources by @eenzeenee in #25859

- [

CI] Fix red CI and ERROR failed should show by @ArthurZucker in #25995 - [

VITS] tokenizer integration test: fix revision did not exist by @ArthurZucker in #25996 - Fix Mega chunking error when using decoder-only model by @tanaymeh in #25765

- save space when converting hf model to megatron model. by @flower-with-safe in #25950

- Update README.md by @NinoRisteski in #26003

- Falcon: fix revision propagation by @LysandreJik in #26006

- TF-OPT attention mask fixes by @Rocketknight1 in #25238

- Fix small typo README.md by @zspo in #25934

- 🌐[i18n-KO] Translated

llm_tutorial.mdto Korean by @harheem in #25791 - Remove Falcon from undocumented list by @Rocketknight1 in #26008

- modify context length for GPTQ + version bump by @SunMarc in #25899

- Fix err with FSDP by @muellerzr in #25991

- fix _resize_token_embeddings will set lm head size to 0 when enabled deepspeed zero3 by @kai01ai in #26024

- Fix CircleCI config by @ydshieh in #26023

- Add

tgsspeed metrics by @CokeDong in #25858 - [VITS] Fix nightly tests by @sanchit-gandhi in #25986

- Added HerBERT to README.md by @Muskan011 in #26020

- Fix vilt config docstring parameter to match value in init by @raghavanone in #26017

- Punctuation fix by @kwonmha in #26025

- Try to fix training Loss inconsistent after resume from old checkpoint by @dumpmemory in #25872

- Fix Dropout Implementation in Graphormer by @alexanderkrauck in #24817

- Update missing docs on

activation_dropoutand fix DropOut docs for SEW-D by @gau-nernst in #26031 - Skip warning if tracing with dynamo by @angelayi in #25581

- 🌐 [i18n-KO] Translated

llama.mdto Korean by @harheem in #26044 - [

CodeLlamaTokenizerFast] Fix fixset_infilling_processorto properly reset by @ArthurZucker in #26041 - [

CITests] skip failing tests until #26054 is merged by @ArthurZucker in #26063 - only main process should call _save on deepspeed zero3 by @zjjMaiMai in #25959

- docs: update link huggingface map by @pphuc25 in #26077

- docs: add space to docs by @pphuc25 in #26067

- [

core] Import tensorflow inside relevant methods...

Patch release: v4.33.3

Patch release: v4.33.2

Falcon, Code Llama, ViTDet, DINO v2, VITS

Falcon

Falcon is a class of causal decoder-only models built by TII. The largest Falcon checkpoints have been trained on >=1T tokens of text, with a particular emphasis on the RefinedWeb corpus. They are made available under the Apache 2.0 license.

Falcon’s architecture is modern and optimized for inference, with multi-query attention and support for efficient attention variants like FlashAttention. Both ‘base’ models trained only as causal language models as well as ‘instruct’ models that have received further fine-tuning are available.

- Falcon port #24523 by @Rocketknight1

- Falcon: Add RoPE scaling by @gante in #25878

- Add proper Falcon docs and conversion script by @Rocketknight1 in #25954

- Put Falcon back by @LysandreJik in #25960

- [

Falcon] Remove SDPA for falcon to support earlier versions of PyTorch (< 2.0) by @younesbelkada in #25947

Code Llama

Code Llama, is a family of large language models for code based on Llama 2, providing state-of-the-art performance among open models, infilling capabilities, support for large input contexts, and zero-shot instruction following ability for programming tasks.

- [

CodeLlama] Add support forCodeLlamaby @ArthurZucker in #25740 - [

CodeLlama] Fix CI by @ArthurZucker in #25890

ViTDet

ViTDet reuses the ViT model architecture, adapted to object detection.

- Add ViTDet by @NielsRogge in #25524

DINO v2

DINO v2 is the next iteration of the DINO model. It is added as a backbone class, allowing it to be re-used in downstream models.

- [DINOv2] Add backbone class by @NielsRogge in #25520

VITS

VITS (Variational Inference with adversarial learning for end-to-end Text-to-Speech) is an end-to-end speech synthesis model that predicts a speech waveform conditional on an input text sequence. It is a conditional variational autoencoder (VAE) comprised of a posterior encoder, decoder, and conditional prior.

Breaking changes:

- 🚨🚨🚨 [

Refactor] Move third-party related utility files intointegrations/folder 🚨🚨🚨 by @younesbelkada in #25599

Moves all third party libs (outside HF ecosystem) related utility files inside integrations/ instead of having them in transformers directly.

In order to get the previous usage you should be changing your call to the following:

- from transformers.deepspeed import HfDeepSpeedConfig

+ from transformers.integrations import HfDeepSpeedConfigBugfixes and improvements

- [DOCS] MusicGen Docs Update by @xNul in #25510

- [MINOR:TYPO] by @cakiki in #25646

- Pass the proper token to PEFT integration in auto classes by @sgugger in #25649

- Put IDEFICS in the right section of the doc by @sgugger in #25650

- TF 2.14 compatibility by @Rocketknight1 in #25630

- Fix bloom add prefix space by @ArthurZucker in #25652

- removing unnecesssary extra parameter by @rafaelpadilla in #25643

- Adds

TRANSFORMERS_TEST_BACKENDby @vvvm23 in #25655 - stringify config by @AleksanderWWW in #25637

- Add input_embeds functionality to gpt_neo Causal LM by @gaasher in #25659

- Update doc toctree by @ydshieh in #25661

- Add Llama2 resources by @wonhyeongseo in #25531

- [

SPM] PatchspmLlama and T5 by @ArthurZucker in #25656 - [

GPTNeo] Add input_embeds functionality to gpt_neo Causal LM by @ArthurZucker in #25664 - fix wrong path in some doc by @ydshieh in #25658

- Remove

utils/documentation_tests.txtby @ydshieh in #25680 - Prevent Dynamo graph fragmentation in GPTNeoX with torch.baddbmm fix by @norabelrose in #24941

⚠️ [CLAP] Fix dtype of logit scales in init by @sanchit-gandhi in #25682- Sets the stalebot to 10 AM CEST by @LysandreJik in #25678

- Fix

pad_tokencheck condition by @ydshieh in #25685 - [DOCS] Added docstring example for EpsilonLogitsWarper #24783 by @sanjeevk-os in #25378

- correct resume training steps number in progress bar by @pphuc25 in #25691

- Generate: general test for decoder-only generation from

inputs_embedsby @gante in #25687 - Fix typo in

configuration_gpt2.pyby @susnato in #25676 - fix ram efficient fsdp init by @pacman100 in #25686

- [

LlamaTokenizer] make unk_token_length a property by @ArthurZucker in #25689 - Update list of persons to tag by @sgugger in #25708

- docs: Resolve typos in warning text by @tomaarsen in #25711

- Fix failing

test_batch_generationfor bloom by @ydshieh in #25718 - [

PEFT] Fix peft version by @younesbelkada in #25710 - Fix number of minimal calls to the Hub with peft integration by @sgugger in #25715

- [

AutoGPTQ] Add correct installation of GPTQ library + fix slow tests by @younesbelkada in #25713 - Generate: nudge towards

do_sample=Falsewhentemperature=0.0by @gante in #25722 - [

from_pretrained] Simpler code for peft by @ArthurZucker in #25726 - [idefics] idefics-9b test use 4bit quant by @stas00 in #25734

- ImageProcessor - check if input pixel values between 0-255 by @amyeroberts in #25688

- [

from_pretrained] Fix failing PEFT tests by @younesbelkada in #25733 - [ASR Pipe Test] Fix CTC timestamps error message by @sanchit-gandhi in #25727

- 🌐 [i18n-KO] Translated

visual_question_answering.mdto Korean by @wonhyeongseo in #25679 - [

PEFT] Fix PeftConfig save pretrained when callingadd_adapterby @younesbelkada in #25738 - fixed typo in speech encoder decoder doc by @asusevski in #25745

- Add FlaxCLIPTextModelWithProjection by @pcuenca in #25254

- Generate: add missing logits processors docs by @gante in #25653

- [DOCS] Add example for HammingDiversityLogitsProcessor by @jessthebp in #25481

- Generate: logits processors are doctested and fix broken doctests by @gante in #25692

- [CLAP] Fix logit scales dtype for fp16 by @sanchit-gandhi in #25754

- [

Sentencepiece] make surelegacydo not requireprotobufby @ArthurZucker in #25684 - fix encoder hook by @SunMarc in #25735

- Docs: fix indentation in

HammingDiversityLogitsProcessorby @gante in #25756 - Add type hints for several pytorch models (batch-3) by @nablabits in #25705

- Correct attention mask dtype for Flax GPT2 by @liutianlin0121 in #25636

- fix a typo in docsting by @statelesshz in #25759

- [idefics] small fixes by @stas00 in #25764

- Add docstrings and fix VIVIT examples by @Geometrein in #25628

- [

LlamaFamiliy] add a tip about dtype by @ArthurZucker in #25794 - Add type hints for several pytorch models (batch-2) by @nablabits in #25557

- Add type hints for pytorch models (final batch) by @nablabits in #25750

- Add type hints for several pytorch models (batch-4) by @nablabits in #25749

- [idefics] fix vision's

hidden_actby @stas00 in #25787 - Arde/fsdp activation checkpointing by @arde171 in #25771

- Fix incorrect Boolean value in deepspeed example by @tmm1 in #25788

- fixing name position_embeddings to object_queries by @Lorenzobattistela in #24652

- Resolving Attribute error when using the FSDP ram efficient feature by @pacman100 in #25820

- [

Docs] More clarifications on BT + FA by @younesbelkada in #25823 - fix register by @zspo in #25779

- Minor wording changes for Code Llama by @osanseviero in #25815

- [

LlamaTokenizer]tokenizenits. by @ArthurZucker in #25793 - fix warning trigger for embed_positions when loading xglm by @MattYoon in #25798

- 🌐 [i18n-KO] Translated peft.md to Korean by @nuatmochoi in #25706

- 🌐 [i18n-KO]

model_memory_anatomy.mdto Korean by @mjk0618 in #25755 - Error with checking args.eval_accumulation_steps to gather tensors by @chaumng in #25819

- Tests: detect lines removed from "utils/not_doctested.txt" and doctest ALL generation files by @gante in #25763

- 🌐 [i18n-KO] Translated

add_new_pipeline.mdto Korean by @heuristicwave in #25498 - 🌐 [i18n-KO] Translated

community.mdto Korean by @sim-so in #25674 - 🤦update warning to If you want to use the new behaviour, set `legacy=… by @ArthurZucker in #25833

- update remaining

Pop2Pianocheckpoints by @susnato in #25827 - [AutoTokenizer] Add data2vec to mapping by @sanchit-gandhi in #25835

- MaskFormer,Mask2former - reduce memory load by @amyeroberts in #25741

- Support loading base64 images in pipelines by @InventivetalentDev in #25633

- Update README.md by @NinoRisteski in #25834

- Generate: models with custom

generate()returnTrueincan_generate()by @gante in #25838 - Update README.md by @NinoRisteski in #25832

- minor typo fix in PeftAdapterMixin docs by @tmm1 in #25829

- Add flax installation in daily doctest workflow by @ydshieh in #25860

- Add Blip2 model in VQA pipeline by @jpizarrom in #25532

- Remote tools are turned off by @LysandreJik in #25867

- Fix imports by @ydshieh in #25869

- fix max_memory for bnb by @SunMarc in #25842

- Docs: fix example failing doctest in

generation_strategies.mdby @gante in #25874 - pin pandas==2.0.3 by @ydshieh in #25875

- Reduce CI output by @ydshieh in #25876

- [ViTDet] Fix doc tests by @NielsRogge in #25880

- For xla tensors, use an alternative way to get a unique id by @qihqi in #25802

- fix ds z3 checkpointing when

stage3_gather_16bit_weights_on_model_save=Falseby @pacman100 in #25817 - Modify efficient GPU training doc with now-available adamw_bnb_8bit optimizer by @veezbo in #25807

- [

TokenizerFast]can_save_slow_tokenizeras a property for whenvocab_file's folder was removed by @ArthurZucker in #25626 - Save image_processor while saving pipeline (ImageSegmentationPipeline) by @raghavanone in #25884

- [

InstructBlip] FINAL Fix instructblip test by @younesbelkada in #25887 - Add type hints for tf models batch 1 by @nablabits in #25853

- Update

setup.pyby @ydshieh in #25893 - Smarter check for

is_tensorby @sgugger in #25871 - remove torch_dtype override by @SunMarc in #25894

- fix FSDP model resume optimizer & schedu...

Patch release: v4.32.1

Patch release including several patches from v4.31.0, listed below:

IDEFICS, GPTQ Quantization

IDEFICS

The IDEFICS model was proposed in OBELICS: An Open Web-Scale Filtered Dataset of Interleaved Image-Text Documents by Hugo Laurençon, Lucile Saulnier, Léo Tronchon, Stas Bekman, Amanpreet Singh, Anton Lozhkov, Thomas Wang, Siddharth Karamcheti, Alexander M. Rush, Douwe Kiela, Matthieu Cord, Victor Sanh

IDEFICS is the first open state-of-the-art visual language model at the 80B scale!

The model accepts arbitrary sequences of image and text and produces text, similarly to a multimodal ChatGPT.

Blogpost: hf.co/blog/idefics

Playground: HuggingFaceM4/idefics_playground

MPT

MPT has been added and is now officially supported within Transformers. The repositories from MosaicML have been updated to work best with the model integration within Transformers.

- [

MPT] Add MosaicML'sMPTmodel to transformers by @ArthurZucker & @younesbelkada in #24629

GPTQ Integration

GPTQ quantization is now supported in Transformers, through the optimum library. The backend relies on the auto_gptq library, from which we use the GPTQ and QuantLinear classes.

See below for an example of the API, quantizing a model using the new GPTQConfig configuration utility.

from transformers import AutoModelForCausalLM, AutoTokenizer, GPTQConfig

model_name = "facebook/opt-125m"

tokenizer = AutoTokenizer.from_pretrained(model_name)

config = GPTQConfig(bits=4, dataset = "c4", tokenizer=tokenizer, group_size=128, desc_act=False)

# works also with device_map (cpu offload works but not disk offload)

model = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype=torch.float16, quantization_config=config)Most models under TheBloke namespace with the suffix GPTQ should be supported, for example, to load a GPTQ quantized model on TheBloke/Llama-2-13B-chat-GPTQ simply run (after installing latest optimum and auto-gptq libraries):

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "TheBloke/Llama-2-13B-chat-GPTQ"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)For more information about this feature, we recommend taking a look at the following announcement blogpost: https://huggingface.co/blog/gptq-integration

Pipelines

A new pipeline, dedicated to text-to-audio and text-to-speech models, has been added to Transformers. It currently supports the 3 text-to-audio models integrated into transformers: SpeechT5ForTextToSpeech, MusicGen and Bark.

See below for an example:

from transformers import pipeline

classifier = pipeline(model="suno/bark")

output = pipeline("Hey it's HuggingFace on the phone!")

audio = output["audio"]

sampling_rate = output["sampling_rate"]Classifier-Free Guidance decoding

Classifier-Free Guidance decoding is a text generation technique developed by EleutherAI, announced in this paper. With this technique, you can increase prompt adherence in generation. You can also set it up with negative prompts, ensuring your generation doesn't go in specific directions. See its docs for usage instructions.

- add CFG for .generate() by @Vermeille in #24654

Task guides

A new task guide going into Visual Question Answering has been added to Transformers.

- VQA task guide by @MKhalusova in #25244

Model deprecation

We continue the deprecation of models that was introduced in #24787.

By deprecating, we indicate that we will stop maintaining such models, but there is no intention of actually removing those models and breaking support for them (they might one day move into a separate repo/on the Hub, but we would still add the necessary imports to make sure backward compatibility stays). The main point is that we stop testing those models. The usage of the models drives this choice and aims to ease the burden on our CI so that it may be used to focus on more critical aspects of the library.

- Deprecate unused OpenLlama architecture by @tomaarsen in #24922

Translation Efforts

There are ongoing efforts to translate the transformers' documentation in other languages. These efforts are driven by groups independent to Hugging Face, and their work is greatly appreciated further to lower the barrier of entry to ML and Transformers.

If you'd like to kickstart such an effort or help out on an existing one, please feel free to reach out by opening an issue.

- 🌐 [i18n-KO] Translated

tasks/document_question_answering.mdto Korean by @jungnerd in #24588 - 🌐 [i18n-KO] Fixed Korean and English

quicktour.mdby @wonhyeongseo in #24664 - 🌐 [i18n-KO] Updated Korean

serialization.mdby @wonhyeongseo in #24686 - 🌐 [i18n-KO] Translated performance.md to Korean by @augustinLib in #24883

- 🌐 [i18n-KO] Translated

testing.mdto Korean by @Sunmin0520 in #24900 - 🌐 [i18n-KO] Translated

perf_train_cpu.mdto Korean by @seank021 in #24911 - 🌐 [i18n-KO] Translated

<tf_xla>.mdto Korean by @54data in #24904 - 🌐 [i18n-KO] Translated

perf_hardware.mdto Korean by @augustinLib in #24966 - 🌐 [i18n-KO] Translated

hpo_train.mdto Korean by @harheem in #24968 - 🌐 [i18n-KO] Translated

perf_infer_cpu.mdto Korean by @junejae in #24920 - 🌐 [i18n-KO] Translated pipeline_webserver.md to Korean by @kihoon71 in #24828

- 🌐 [i18n-KO] Translated

transformers_agents.mdto Korean by @sim-so in #24881 - 🌐 [i18n-KO] Translated

perf_infer_gpu_many.mdto Korean by @heuristicwave in #24943 - 🌐 [i18n-KO] Translated

perf_infer_gpu_one.mdto Korean by @eenzeenee in #24978 - 🌐 [i18n-KO] Translated

add_tensorflow_model.mdto Korean by @keonju2 in #25017 - 🌐 [i18n-KO] Translated

perf_train_cpu_many.mdto Korean by @nuatmochoi in #24923 - 🌐 [i18n-KO] Translated

add_new_model.mdto Korean by @mjk0618 in #24957 - 🌐 [i18n-KO] Translated

model_summary.mdto Korean by @0525hhgus in #24625 - 🌐 [i18n-KO] Translated

philosophy.mdto Korean by @TaeYupNoh in #25010 - 🌐 [i18n-KO] Translated

perf_train_tpu_tf.mdto Korean by @0525hhgus in #25433 - 🌐 [i18n-KO] Translated docs: ko: pr_checks.md to Korean by @sronger in #24987

Explicit input data format for image processing

Addition of input_data_format argument to image transforms and ImageProcessor methods, allowing the user to explicitly set the data format of the images being processed. This enables processing of images with non-standard number of channels e.g. 4 or removes error which occur when the data format was inferred but the channel dimension was ambiguous.

import numpy as np

from transformers import ViTImageProcessor

img = np.random.randint(0, 256, (4, 6, 3))

image_processor = ViTImageProcessor()

inputs = image_processor(img, image_mean=0, image_std=1, input_data_format="channels_first")- Input data format by @amyeroberts in #25464

- Add input_data_format argument, image transforms by @amyeroberts in #25462

Documentation clarification about efficient inference through torch.scaled_dot_product_attention & Flash Attention

Users are not aware that it is possible to force dispatch torch.scaled_dot_product_attention method from torch to use Flash Attention kernels. This leads to considerable speedup and memory saving, and is also compatible with quantized models. We decided to make this explicit to users in the documentation.

- [Docs / BetterTransformer ] Added more details about flash attention + SDPA : #25265

In a nutshell, one can just run:

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("facebook/opt-350m")

model = AutoModelForCausalLM.from_pretrained("facebook/opt-350m").to("cuda")

# convert the model to BetterTransformer

model.to_bettertransformer()

input_text = "Hello my dog is cute and"

inputs = tokenizer(input_text, return_tensors="pt").to("cuda")

+ with torch.backends.cuda.sdp_kernel(enable_flash=True, enable_math=False, enable_mem_efficient=False):

outputs = model.generate(**inputs)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))to enable Flash-attenion in their model. However, this feature does not support padding yet.

FSDP and DeepSpeed Changes

Users will no longer encounter CPU RAM OOM when using FSDP to train very large models in multi-gpu or multi-node multi-gpu setting.

Users no longer have to pass fsdp_transformer_layer_cls_to_wrap as the code now use _no_split_modules by default which is available for most of the popular models. DeepSpeed Z3 init now works properly with Accelerate Launcher + Trainer.

- add util for ram efficient loading of model when using fsdp by @pacman100 in #25107

- fix fsdp checkpointing issues by @pacman100 in #24926

- fsdp fixes and enhancements by @pacman100 in #24980

- fix deepspeed load best model at end when the model gets sharded by @pacman100 in #25057

- resolving zero3 init when using accelerate config with Trainer by @pacman100 in #25227

- fix z3 init when using accelerate launcher by @pacman100 in #25589

Breaking changes

Default optimizer in the Trainer class

The defaul...

v4.31.0: Llama v2, MusicGen, Bark, MMS, EnCodec, InstructBLIP, Umt5, MRa, vIvIt

New models

Llama v2

Llama 2 was proposed in LLaMA: Open Foundation and Fine-Tuned Chat Models by Hugo Touvron et al. It builds upon the Llama architecture adding Grouped Query Attention for efficient inference.

- Add support for Llama 2 by @ArthurZucker in #24891

Musicgen

The MusicGen model was proposed in the paper Simple and Controllable Music Generation by Jade Copet, Felix Kreuk, Itai Gat, Tal Remez, David Kant, Gabriel Synnaeve, Yossi Adi and Alexandre Défossez.

MusicGen is a single stage auto-regressive Transformer model capable of generating high-quality music samples conditioned on text descriptions or audio prompts. The text descriptions are passed through a frozen text encoder model to obtain a sequence of hidden-state representations. MusicGen is then trained to predict discrete audio tokens, or audio codes, conditioned on these hidden-states. These audio tokens are then decoded using an audio compression model, such as EnCodec, to recover the audio waveform.

Through an efficient token interleaving pattern, MusicGen does not require a self-supervised semantic representation of the text/audio prompts, thus eliminating the need to cascade multiple models to predict a set of codebooks (e.g. hierarchically or upsampling). Instead, it is able to generate all the codebooks in a single forward pass.

- Add Musicgen by @sanchit-gandhi in #24109

Bark

Bark is a transformer-based text-to-speech model proposed by Suno AI in suno-ai/bark.

MMS

The MMS model was proposed in Scaling Speech Technology to 1,000+ Languages by Vineel Pratap, Andros Tjandra, Bowen Shi, Paden Tomasello, Arun Babu, Sayani Kundu, Ali Elkahky, Zhaoheng Ni, Apoorv Vyas, Maryam Fazel-Zarandi, Alexei Baevski, Yossi Adi, Xiaohui Zhang, Wei-Ning Hsu, Alexis Conneau, Michael Auli

- Add MMS CTC Fine-Tuning by @patrickvonplaten in #24281

EnCodec

The EnCodec neural codec model was proposed in High Fidelity Neural Audio Compression by Alexandre Défossez, Jade Copet, Gabriel Synnaeve, Yossi Adi.

InstructBLIP

The InstructBLIP model was proposed in InstructBLIP: Towards General-purpose Vision-Language Models with Instruction Tuning by Wenliang Dai, Junnan Li, Dongxu Li, Anthony Meng Huat Tiong, Junqi Zhao, Weisheng Wang, Boyang Li, Pascale Fung, Steven Hoi. InstructBLIP leverages the BLIP-2 architecture for visual instruction tuning.

- Add InstructBLIP by @NielsRogge in #23460

Umt5

The UMT5 model was proposed in UniMax: Fairer and More Effective Language Sampling for Large-Scale Multilingual Pretraining by Hyung Won Chung, Xavier Garcia, Adam Roberts, Yi Tay, Orhan Firat, Sharan Narang, Noah Constant.

- [

Umt5] Add google's umt5 totransformersby @ArthurZucker in #24477

MRA

The MRA model was proposed in Multi Resolution Analysis (MRA) for Approximate Self-Attention by Zhanpeng Zeng, Sourav Pal, Jeffery Kline, Glenn M Fung, and Vikas Singh.

ViViT

The Vivit model was proposed in ViViT: A Video Vision Transformer by Anurag Arnab, Mostafa Dehghani, Georg Heigold, Chen Sun, Mario Lučić, Cordelia Schmid. The paper proposes one of the first successful pure-transformer based set of models for video understanding.

Python 3.7

The last version to support Python 3.7 was 4.30.x, as it reached end-of-life on June 27, 2023 and is no longer supported by the Python Software Foundation.

PyTorch 1.9

The last version to support PyTorch 1.9 was 4.30.x. As it has been more than 2 years, and we're looking forward to using features available in PyTorch 1.10 and up, we do not support PyTorch 1.9 for v4.31 and up.

RoPE scaling

This PR adds RoPE scaling to the LLaMa and GPTNeoX families of models. It allows us to extrapolate and go beyond the original maximum sequence length (e.g. 2048 tokens on LLaMA), without fine-tuning. It offers two strategies:

- Linear scaling

- Dynamic NTK scaling

Agents

Tools now return a type that is specific to agents. This type can return a serialized version of itself (a string), that either points to a file on-disk or to the object's content. This should make interaction with text-based systems much simpler.

- Tool types by @LysandreJik in #24032

Tied weights load

Models with potentially tied weights dropped off some keys from the state dict even when the weights were not tied. This has now been fixed and more generally, the whole experience of loading a model with state dict that don't match exactly should be improved in this release.

Whisper word-level timestamps

This PR adds a method of predicting timestamps at the word (or even token) level, by analyzing the cross-attentions and applying dynamic time warping.

Auto model addition

A new auto model is added, AutoModelForTextEncoding. It is to be used when you want to extract the text encoder from an encoder-decoder architecture.

- [AutoModel] Add AutoModelForTextEncoding by @sanchit-gandhi in #24305

Model deprecation

Transformers is growing a lot and to ease a bit the burden of maintenance on our side, we have taken the decision to deprecate models that are not used a lot. Those models will never actually disappear from the library, but we will stop testing them or accepting PRs modifying them.

(enfin ça

The criteria to identify models to deprecate was less than 1,000 unique downloads in the last 30 days for models that are at least one year old. The list of deprecated models is:

- BORT

- M-CTC-T

- MMBT

- RetriBERT

- TAPEX

- Trajectory Transformer

- VAN

Breaking changes

Fixes an issue with stripped spaces for the T5 family tokenizers. If this impacts negatively inference/training with your models, please let us know by opening an issue.

⚠️ ⚠️ [T5Tokenize] Fix T5 family tokenizers⚠️ ⚠️ by @ArthurZucker in #24565

Bugfixes and improvements

- add trust_remote_code option to CLI download cmd by @radames in #24097

- Fix typo in Llama docstrings by @Kh4L in #24020

- Avoid

GPT-2daily CI job OOM (in TF tests) by @ydshieh in #24106 - [Lllama] Update tokenization code to ensure parsing of the special tokens [core] by @ArthurZucker in #24042

- PLAM => PaLM by @xingener in #24129

- [

bnb] Fix bnb config json serialization by @younesbelkada in #24137 - Correctly build models and import call_context for older TF versions by @Rocketknight1 in #24138

- Generate: PT's

top_penforcesmin_tokens_to_keepwhen it is1by @gante in #24111 - fix bugs with trainer by @pacman100 in #24134

- Fix TF Rag OOM issue by @ydshieh in #24122

- Fix SAM OOM issue on CI by @ydshieh in #24125

- Fix XGLM OOM on CI by @ydshieh in #24123

- [

SAM] Fix sam slow test by @younesbelkada in #24140 - [lamaTokenizerFast] Update documentation by @ArthurZucker in #24132

- [BlenderBotSmall] Update doc example by @ArthurZucker in #24092

- Fix Pipeline CI OOM issue by @ydshieh in #24124

- [documentation] grammatical fixes in image_classification.mdx by @LiamSwayne in #24141

- Fix typo in streamers.py by @freddiev4 in #24144

- [tests] fix bitsandbytes import issue by @stas00 in #24151

- Avoid OOM in doctest CI by @ydshieh in #24139

- Fix

Wav2Vec2CI OOM by @ydshieh in #24190 - Fix push to hub by @NielsRogge in #24187

- Change ProgressCallback to use dynamic_ncols=True by @gmlwns2000 in #24101

- [i18n]Translated "attention.mdx" to korean by @kihoon71 in #23878

- Generate: force caching on the main model, in assisted generation by @gante in #24177

- Fix device issue in

OpenLlamaModelTest::test_model_parallelismby @ydshieh in #24195 - Update

GPTNeoXLanguageGenerationTestby @ydshieh in #24193 - typo: fix typos in CONTRIBUTING.md and deepspeed.mdx by @zsj9509 in #24184

- Generate: detect special architectures when loaded from PEFT by @gante in #24198

- 🌐 [i18n-KO] Translated tasks_summary.mdx to Korean by @kihoon71 in #23977

- 🚨🚨🚨 Replace DataLoader logic for Accelerate in Trainer, remove unneeded tests 🚨🚨🚨 by @muellerzr in #24028

- Fix

_load_pretrained_modelby @SunMarc in #24200 - Fix steps bugs in no trainer examples by @Ethan-yt in #24197

- Skip RWKV test in past CI by @ydshieh in #24204

- Remove unnecessary aten::to overhead in llama by @fxmarty in #24203

- Update

WhisperForAudioClassificationdoc example by @ydshieh in #24188 - Finish dataloader integration by @muellerzr in #24201

- Add the number of

modeltest failures to slack CI report by @ydshieh in #24207 - fix: TextIteratorStreamer cannot work with pipeline by @yuanwu2017 in #23641

- Update

(TF)SamModelIntegrationTestby @ydshieh in #24199 - Improving error message when using

use_safetensors=True. by @Narsil in #24232 - Safely import pytest in testing_utils.py by @amyeroberts in #24241

- fix overflow when training mDeberta in fp16 by @sjrl in #24116

- deprecate

use_mps_deviceby @pacman100 in #24239 - Tied params cleanup by @sgugger in #24211

...

v4.30.2: Patch release

- Fix push to hubby @NielsRogge in #24187

- Fix how we detect the TF package by @Rocketknight1 in #24255